Robbyant Open Sources LingBot World: A Real-Time World Model for Interactive Simulation and Integrated AI

Robbyant, the integrated AI unit within the Ant Group, has open-sourced LingBot-World, a global model that transforms video production into an interactive simulator for integrated agents, automated driving and games. The system is designed to provide controllable environments with high visual fidelity, dynamic flexibility and long time horizons, while remaining responsive enough for real-time control.

From text to video to text to world

Most text to video models produce short clips that look realistic but behave like passive movies. They do not show how actions change the environment over time. LingBot-World is built instead as a world model with action mode. It learns the evolution of the virtual world, so that keyboard and mouse inputs, as well as camera movements, can trigger the evolution of future frames.

Formally, the model learns the conditional distribution of future video tokens, given past frames, language information and different actions. During training, it predicts sequences in up to 60 seconds. During prediction, it can automatically extract a coherent video stream that extends to about 10 minutes, while keeping the scene composition stable.

A data engine, from web video to interactive trajectories

The core design in LingBot-World is the integrated data engine. It provides a rich, interactive overview of how actions change the world while incorporating a variety of real-world scenes.

The data acquisition pipeline includes 3 sources:

- Large-scale web videos of people, animals and vehicles, from both first-person and third-person views

- Game data, where RGB frames are tightly coupled with user controls such as W, A, S, D and camera parameters

- Synthetic trajectories are rendered in the Unreal Engine, where clean frames, camera intrinsics and extrinsics and object properties are all known.

After collection, the profiling stage measures this diverse corpus. It filters resolution and duration, splits videos into clips and estimates missing camera parameters using geometry and pose models. The visual language model finds clips of quality, motion magnitude and view type, and selects a selected subset.

On top of this, the section captions module creates 3 levels of text control:

- Narrative captions for all trajectories, including camera movements

- Static scene captions describe the structure of a scene without motion

- Caption The temporal density of the short time windows focused on the local dynamics

This separation allows the model to separate stationary structures from moving patterns, which is important for long-horizon simulations.

Architecture, the core of the MoE video and action environment

LingBot-World starts from Wan2.2, 14B parameter image to video streaming converter. This core already captures powerful open source videos. The Robbyant team is expanding into a mix of DiT specialists, and 2 specialists. Each expert has about 14B parameters, so the total number of parameters is 28B, but only 1 expert is active in each step of noise generation. This keeps the projection cost the same as the dense 14B model while expanding capacity.

The curriculum extends the training sequence from 5 seconds to 60 seconds. The scheduler increases the proportion of high-noise time steps, which stabilizes world structures over long content and reduces mode folding in long releases.

To make the model interactive, actions are injected directly into the transformer blocks. The camera rotation is coded for Plücker embedding. Keyboard actions are represented as multiple thermal vectors over keys such as W, A, S, D. This encoding is combined and passed through standard dynamic layer modules, which model hidden states in DiT. Only the action adapter layers are fine-tuned, the main video core remains frozen, so the model maintains the visual quality from the previous training while learning the action response from a small interactive dataset.

The training uses both picture-to-video and video-to-video activities. Given a single image, the model can combine future frames. Given a small clip, we can extend the sequence. This results in an internal transition function that can start from arbitrary time points.

LingBot World Fast, distillation for real-time use

The centrally trained model, LingBot-World Base, still relies on multi-step propagation and full-time attention, which is expensive for real-time interactions. The Robbyant team presents LingBot-World-Fast as an accelerated variant.

The fast model starts from a high-noise expert and replaces full-temporal attention with block-causal attention. Within each temporal block, attention is bidirectional. In every block, it is the cause. This design supports key-value caching, so the model can stream frames automatically at low cost.

Distillation uses a forced diffusion technique. The learner is trained on a small set of target time steps, including time step 0, to detect both noisy and clean maskers. Analog Distillation Distillation is combined with a discriminating head. The loss of the opponent only revives the racist. The learner network is updated with the loss of distillation, which stabilizes the training while maintaining subsequent action and temporal coherence.

In tests, LingBot World Fast achieves 16 frames per second when processing 480p videos on a 1-node GPU system, and, keeps end-to-end processing latency under 1 second for real-time control.

Immediate memory and long-horizon behavior

One of the most interesting features of LingBot-World is the emergency memory. The model maintains global consistency except for discrete 3D representations such as Gaussian splatting. If the camera moves away from a historic site like Stonehenge and returns after about 60 seconds, the structure appears and has a fixed geometry. If the vehicle leaves the frame and later re-enters, it appears in a static state, not frozen or reset.

The model can also store very long sequences. The research team demonstrates the production of coherent videos of up to 10 minutes, with a stable structure and narrative structure.]

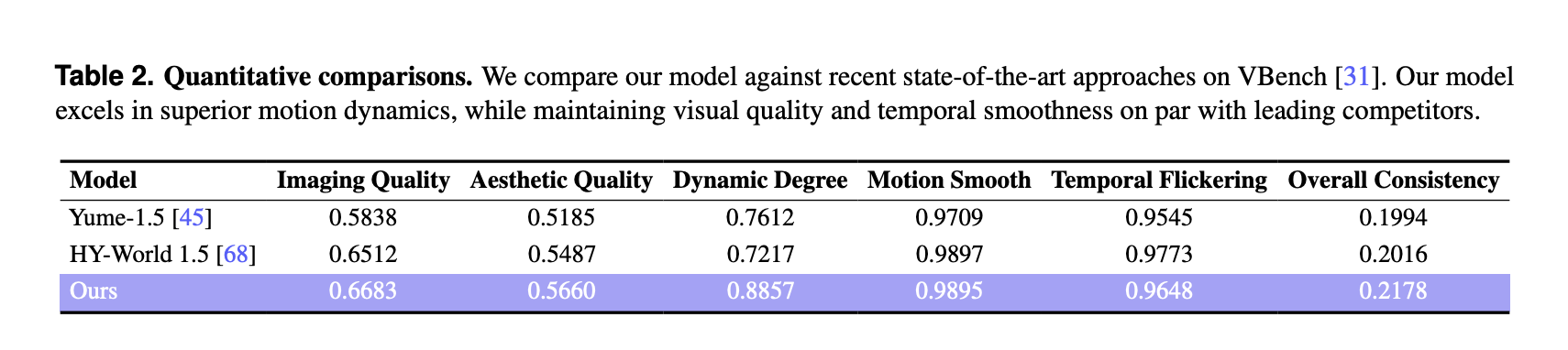

VBench results and comparison with other global models

To evaluate the value, the research team used VBench on a selected set of 100 generated videos, each longer than 30 seconds. LingBot-World is compared to 2 recent world versions, Yume-1.5 and HY-World-1.5.

On VBench, LingBot World reports:

These high scores are based on both photographic quality, aesthetic quality and dynamic quality. The dynamic degree margin is large, 0.8857 compared to 0.7612 and 0.7217, which shows rich scene transitions and complex movements that respond to user input. Motion smoothness and temporal flicker are compared to the best baseline, and the method achieves the best agreement metric among the 3 models.

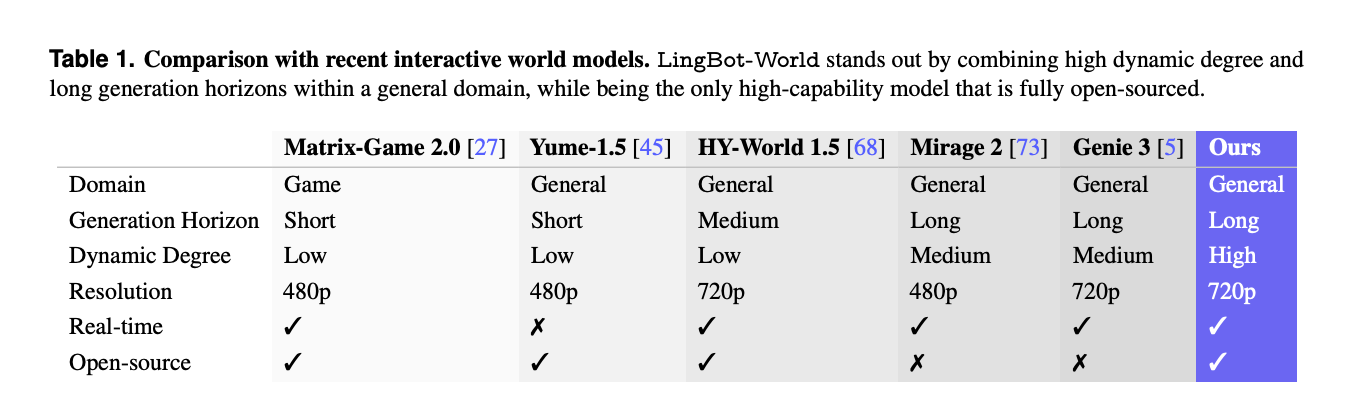

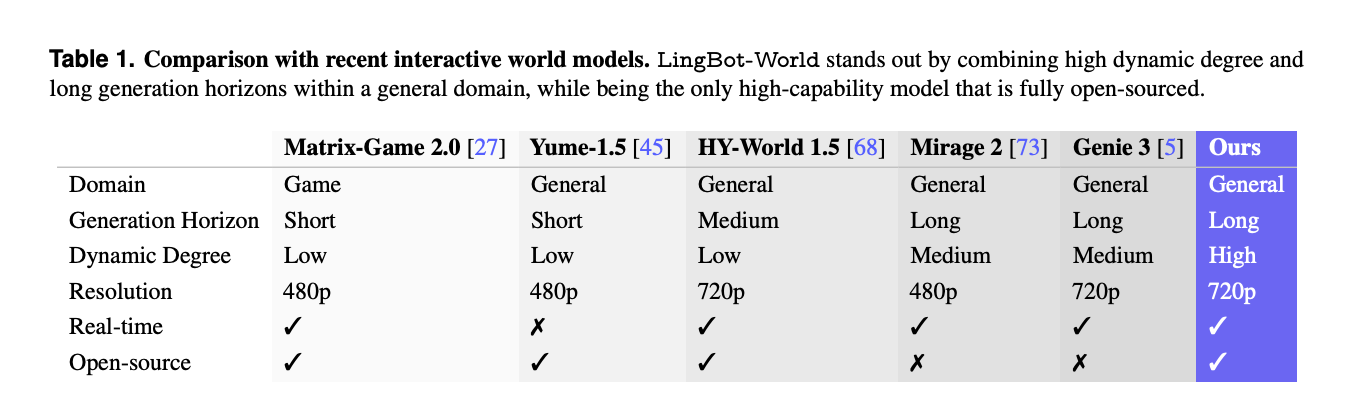

A separate comparison with other interactive systems such as Matrix-Game-2.0, Mirage-2 and Genie-3 highlights that LingBot-World is one of the few fully open world models that includes standard background coverage, long generation horizon, high power rating, 720p resolution and real-time capabilities.

Applications, fast worlds, agents and 3D reconstruction

Besides video integration, LingBot-World is positioned as an integrated AI testing ground. The model supports instant world events, where text commands change the weather, lighting, style or inject local events such as fireworks or animals moving over time, while preserving the local structure.

It can also train downstream action agents, for example with a small action model of the language of vision such as Qwen3-VL-2B predictive control policies from images. Because the generated video stream is geometrically consistent, it can be used as input to 3D reconstruction pipelines, which generate stable point clouds for indoor, outdoor and artificial scenes.

Key Takeaways

- LingBot-World is an action-oriented world model that extends text-to-video to text-to-world simulation, where keyboard actions and camera movements directly control video output over a long horizon of up to 10 minutes.

- The system is trained on a composite data engine that includes web videos, game logs with action labels and Unreal Engine trajectories, as well as sequential narration, static scene and dense temporal captions to distinguish structure from movement.

- The core backbone is a combination of 28B parameters of diffusion transformer specialists, built from Wan2.2, with 2 specialists of 14B each, and fine-tuned action adapters while the optical backbone remains frozen.

- LingBot-World-Fast is a hybrid that uses block causal attention, diffusion forcing and parallel distillation to achieve up to 16 frames per second at 480p on 1 GPU node, with a reported end-to-end time of less than 1 second for collaborative use.

- In VBench with 100 generated videos longer than 30 seconds, LingBot-World reports the highest capture quality, aesthetic quality and variable degree between Yume-1.5 and HY-World-1.5, and the model shows emerging memory and stable long-range structure suitable for embedded agents and 3D reconstruction.

Check it out Paper, Repo, project page and model weights. Also, feel free to follow us Twitter and don't forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.