AI2 Releases SERA, Soft Verified Coding Agents Built With Only Supervised Training Automated Rep Level Workflows

Researchers at the Allen Institute for AI (AI2) present SERA, Certified Efficient Identification Agents, as a family of coding agents that aim to model large closed systems using only supervised training and artificial intelligence.

What is SERA?

SERA is the first release in AI2's Open Coding Agents series. The flagship model, the SERA-32B, is built on the Qwen 3 32B architecture and is trained for a code storage level agent.

On the SWE Bench Certified with a 32K core, the SERA-32B achieves a resolution rate of 49.5 percent. In the context of 64K it reaches 54.2 percent. These numbers put it in the same performance band as open weight systems such as Devstral-Small-2 with 24B parameters and GLM-4.5 Air with 110B parameters, while SERA remains fully open with code, data, and weights.

The series includes four models today, the SERA-8B, SERA-8B GA, SERA-32B, and SERA-32B GA. Everything is released on Hugging Face under the Apache 2.0 license.

Certified Lean Generation

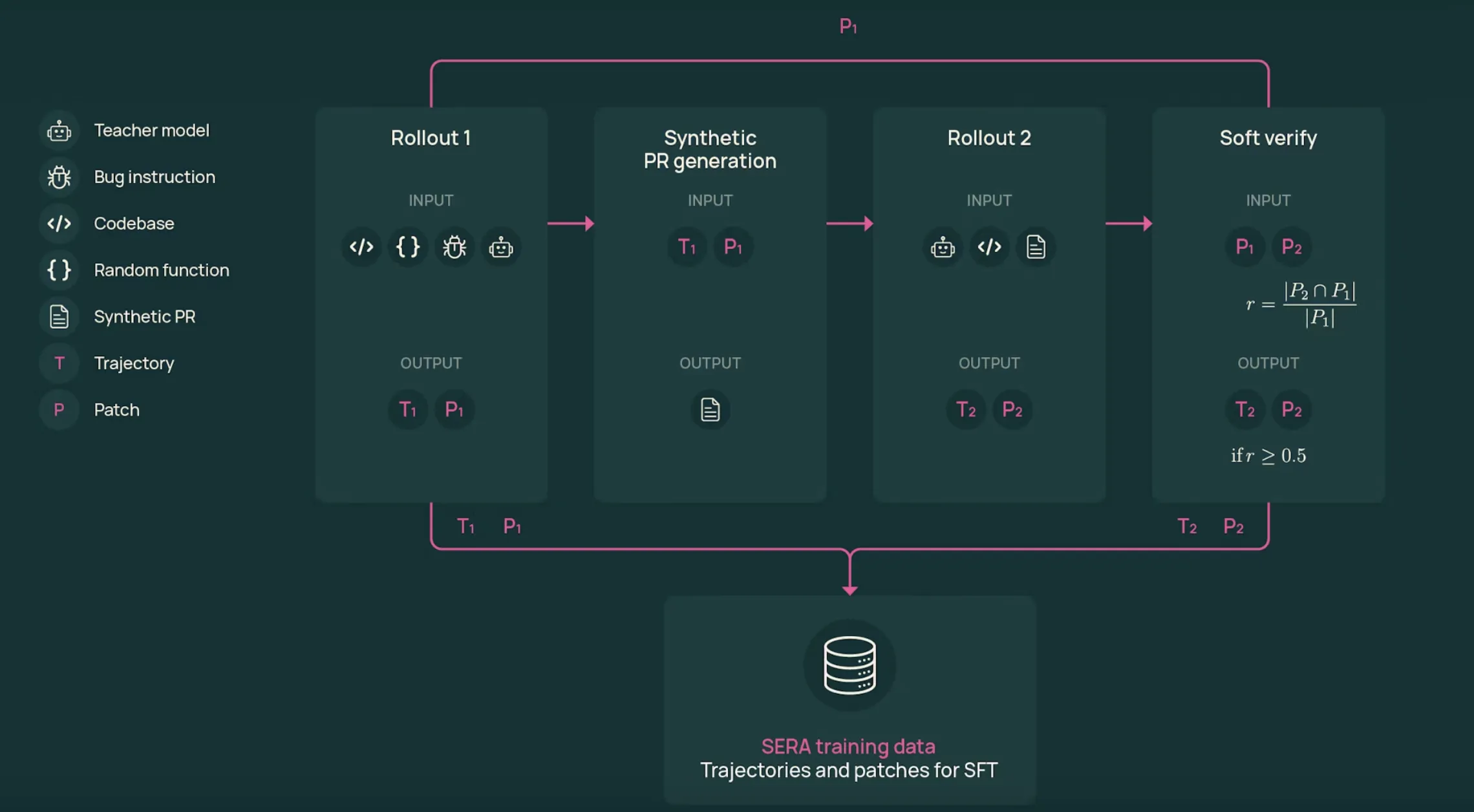

The training pipeline relies on Soft Verified Generation, SVG. SVG generates agent trajectories that look like a real developer's workflow, and uses the patch agreement between the two outputs as a soft signal of accuracy.

The procedure is:

- First release: The function is taken from the actual storage location. The teacher's model, GLM-4.6 in the SERA-32B setup, finds the style of the bug or changes the definition and works with tools to view files, edit code, and execute commands. It produces trajectory T1 and patch P1.

- A pull request is made: The system converts the trajectory into a pull request as a description. This document summarizes the layout and key in a format similar to actual pull requests.

- Second release: The teacher starts again in the last place, but now he only sees the description of the pull request and the tools. It generates a new trajectory T2 and a patch P2 that tries to make the change described.

- Soft authentication: Plots of P1 and P2 are compared line by line. The result of ur recall is calculated as the fraction of lines changed in P1 from P2. If ur is equal to 1 the trajectory is hard confirmed. With average values, the sample is confirmed by soft.

The main result from the research on capitalization is that strict validation is not necessary. When models are trained on T2 trajectories with different limits on r, even with r equal to 0, the performance on the SWE Guaranteed benchmark is the same for constant sample counts. This suggests that true multistep tracking, even if noisy, is an important oversight of coding agents.

Data scale, training, and costs

SVG is used in 121 Python repositories found in the SWE-smith corpus. For GLM-4.5 Air and GLM-4.6 instructor-led, the complete SERA datasets contain over 200,000 trajectories from both releases, making this one of the largest open source agent datasets.

SERA-32B is trained on a subset of 25,000 T2 trajectories from the Sera-4.6-Lite T2 dataset. Training uses a well-supervised standard configuration with Axolotl on Qwen-3-32B for 3 epochs, learning rate 1e-5, weight decay 0.01, and a maximum sequence length of 32,768 tokens.

Most trajectories are longer than the context limit. The research team defines a truncation rate, a fraction of steps equal to 32K tokens. Then they select the trajectories that are already equal, and alternatively they select the pieces with a high truncation ratio. This ordered truncation strategy clearly outperforms random truncation when comparing the SWE benchmark verified scores.

The reported computing budget for SERA-32B, including data processing and training, is about 40 GPU days. Using a scaling law with dataset size and performance, the research team estimated that the SVG method is approximately 26 times cheaper than reinforcement learning-based programs such as SkyRL-Agent and 57 times cheaper than previous artificial data pipelines such as SWE-smith to achieve the same SWE benchmark score.

Repository specialization

A central use case is to configure an agent on a specific endpoint. The research team is researching this in three major SWE-bench certified projects, Django, SymPy, and Sphinx.

For each archive, SVG generates on the order of 46,000 to 54,000 trajectories. Due to computational limitations, the special test is trained on 8,000 trajectories for each location, mixing 3,000 validated T2 trajectories and 5,000 filtered T1 trajectories.

In the context of 32K, these special readers match or slightly exceed the GLM-4.5-Air teacher, and also compare well with the Devstral-Small-2 in those subsets. In Django, the special reader achieves a resolution rate of 52.23 percent compared to 51.20 percent for GLM-4.5-Air. In SymPy, the special model reaches 51.11 percent compared to 48.89 percent for GLM-4.5-Air.

Key Takeaways

- SERA turns coding agents into a supervised learning problem: SERA-32B is trained for regular supervised fine-tuning on synthetic trajectories from GLM-4.6, without a reinforcement learning loop and no reliance on repository test suites.

- Soft Verified Generation removes the need for testing: SVG uses two extractions and the patch overlap between P1 and P2 to calculate a soft validation score, and the research team shows that even unverified or partially validated trajectories can train effective coding agents.

- A dataset of large, virtual agent data from real databases: The pipeline runs SVG on 121 Python projects from the SWE smith corpus, generating more than 200,000 trajectories and creating very large open datasets for coding agents.

- Effective training with transparent cost and scale analysis: SERA-32B trains on 25,000 T2 trajectories and the benchmarking study shows that SVG is about 26 times cheaper than SkyRL-Agent and 57 times cheaper than SWE-smith on the same SWE Bench Guaranteed performance.

Check it out Paper, Repo and Model Weights. Also, feel free to follow us Twitter and don't forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.