Microsoft Ai Releases Fara-7B: An efficient agentic model for computing

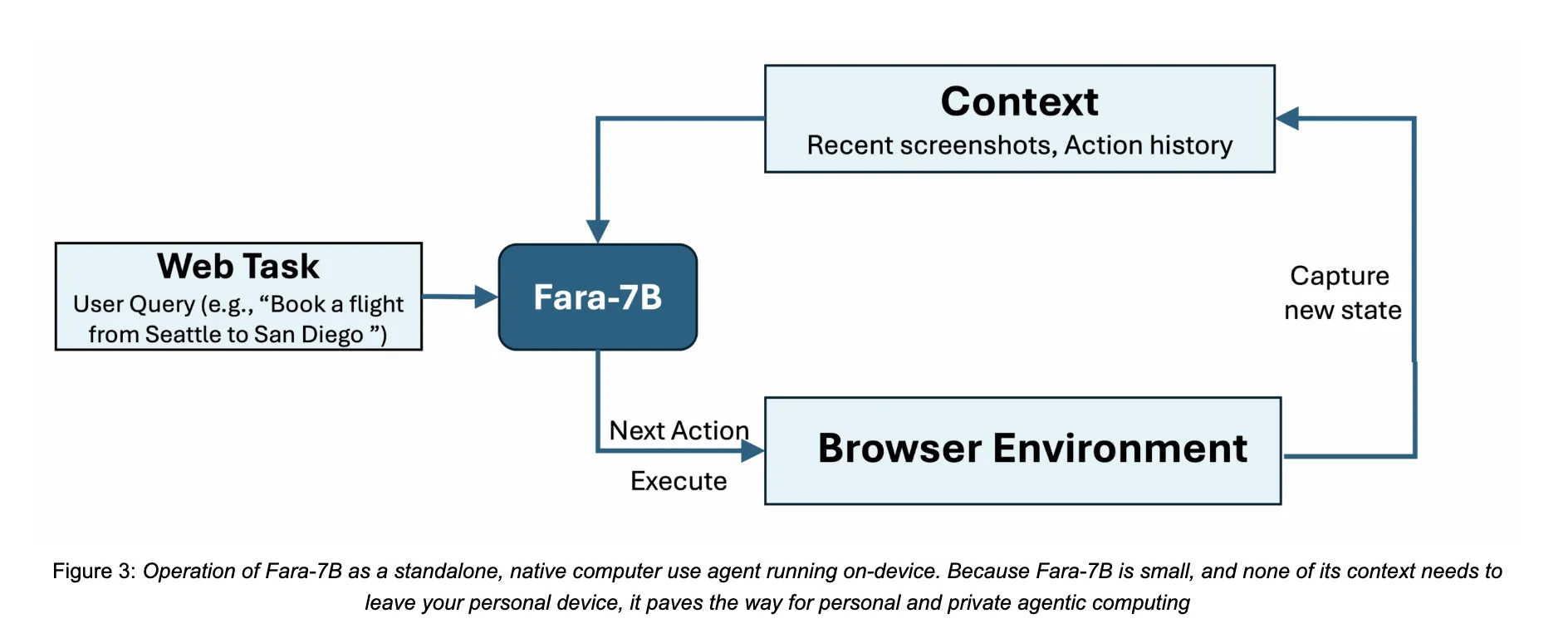

How do we allow an AI Agent to handle real web tasks like booking, searching, and creating fills directly on our devices without sending everything to the cloud? Released Microsoft research Fara-7ba 7-parameter agentic agentic lildicial language model designed for computer use. It is an open to use agent that works from screens, predicts mouse and keyboard actions, and is small enough to run on one user device, which reduces the browsing of local data.

From Chatbots to Captof Use Agents

LLMS standard discussion retrieval text. Computer-based agents such as fara-7b display a browser or desktop user interface to complete tasks such as filling out forms, navigating reservations, or comparing prices. They see the screen, consult the layout of the page, and perform basic actions such as click, scroll, type, Web_Search, or Visit.

Most existing systems rely on many large multimodal models wrapped in complex traversals that mark accessible trees and include many tools. This increases latency and often requires server-side deployment. Fara-7b compresses the behavior of those multi-agent systems in one line of multimodal decoder only model built QWEN2.5-VL-7B. It uses browser screenshots and text context, then directly the output of the considered text is followed by a call with base arguments such as links, text, or URLs.

Faragen, synthetic trajectories for web communication

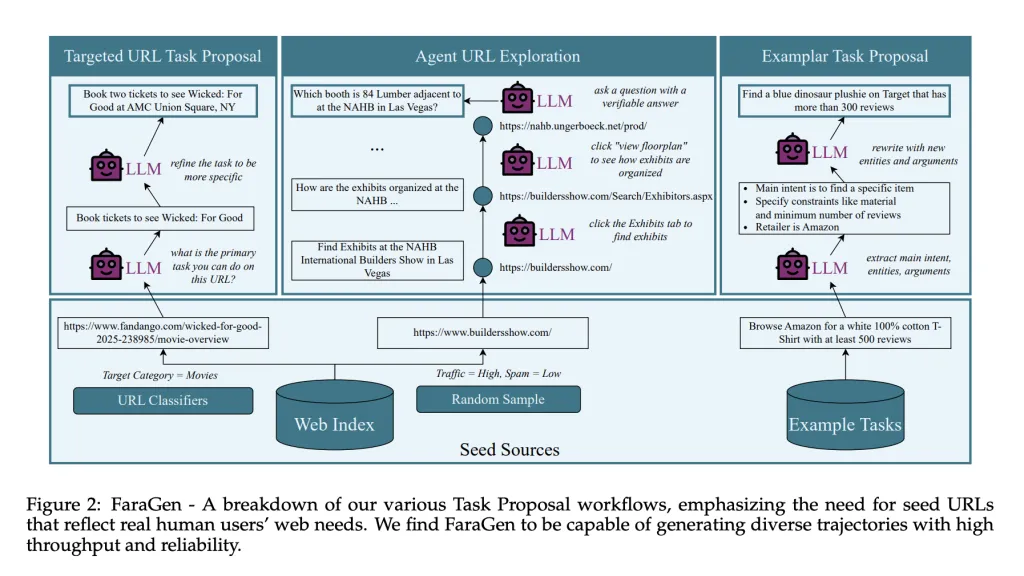

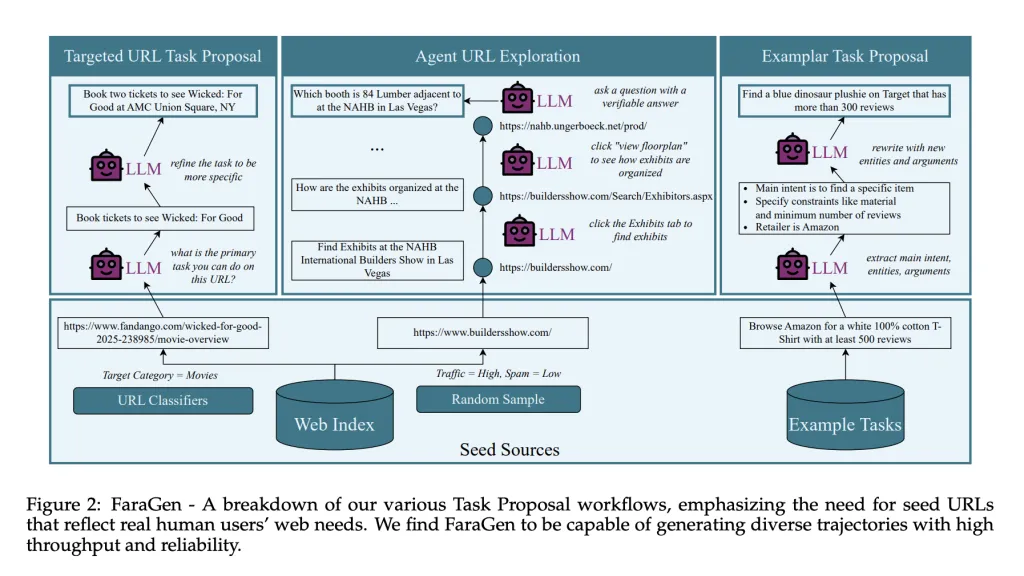

The key is a bottle of data computing agents. High quality social media logs with multiple action steps are rare and expensive to collect. The Fara Project presents It's a wonderful thingA synthetic data engine that generates and analyzes web trajectories for live sites.

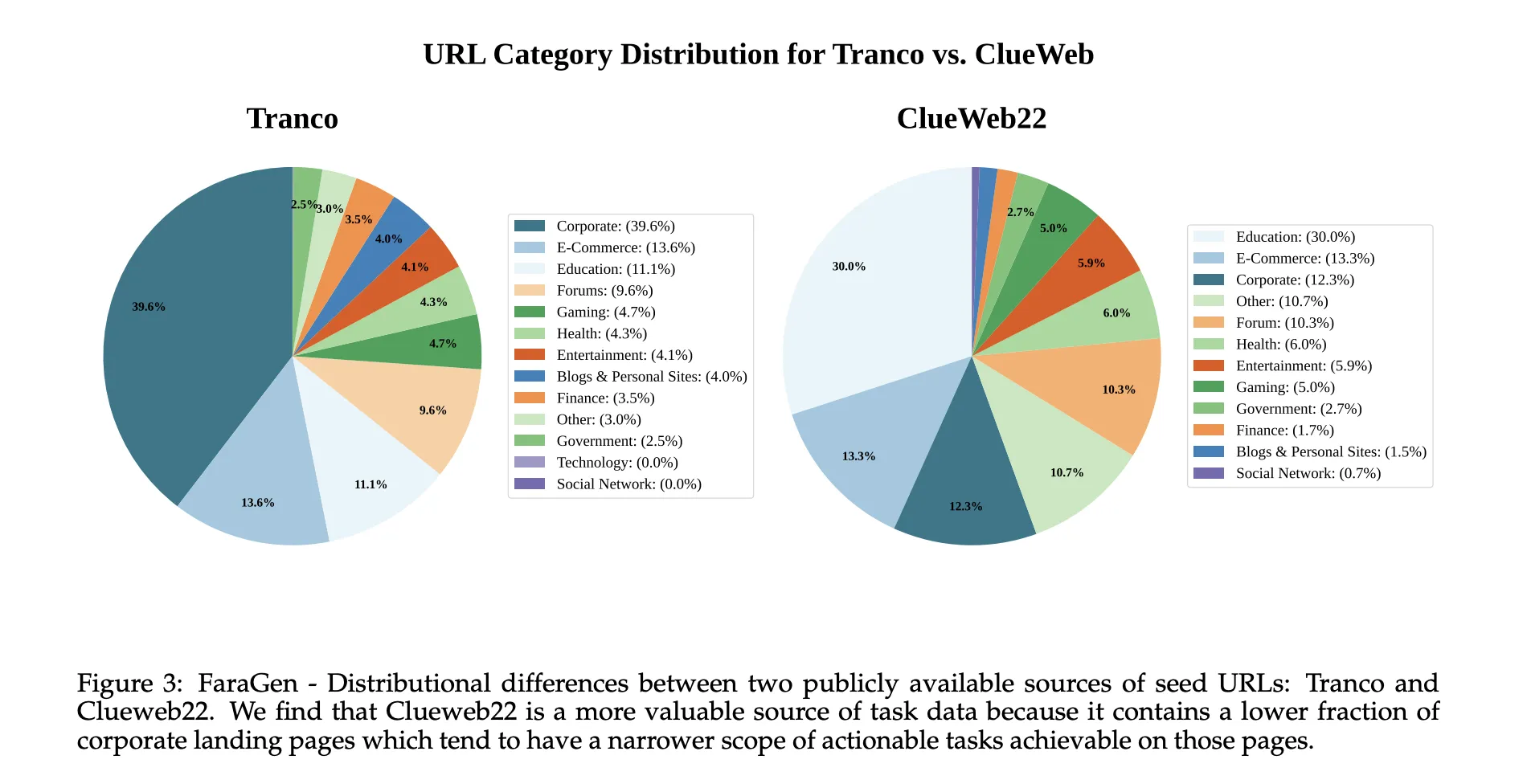

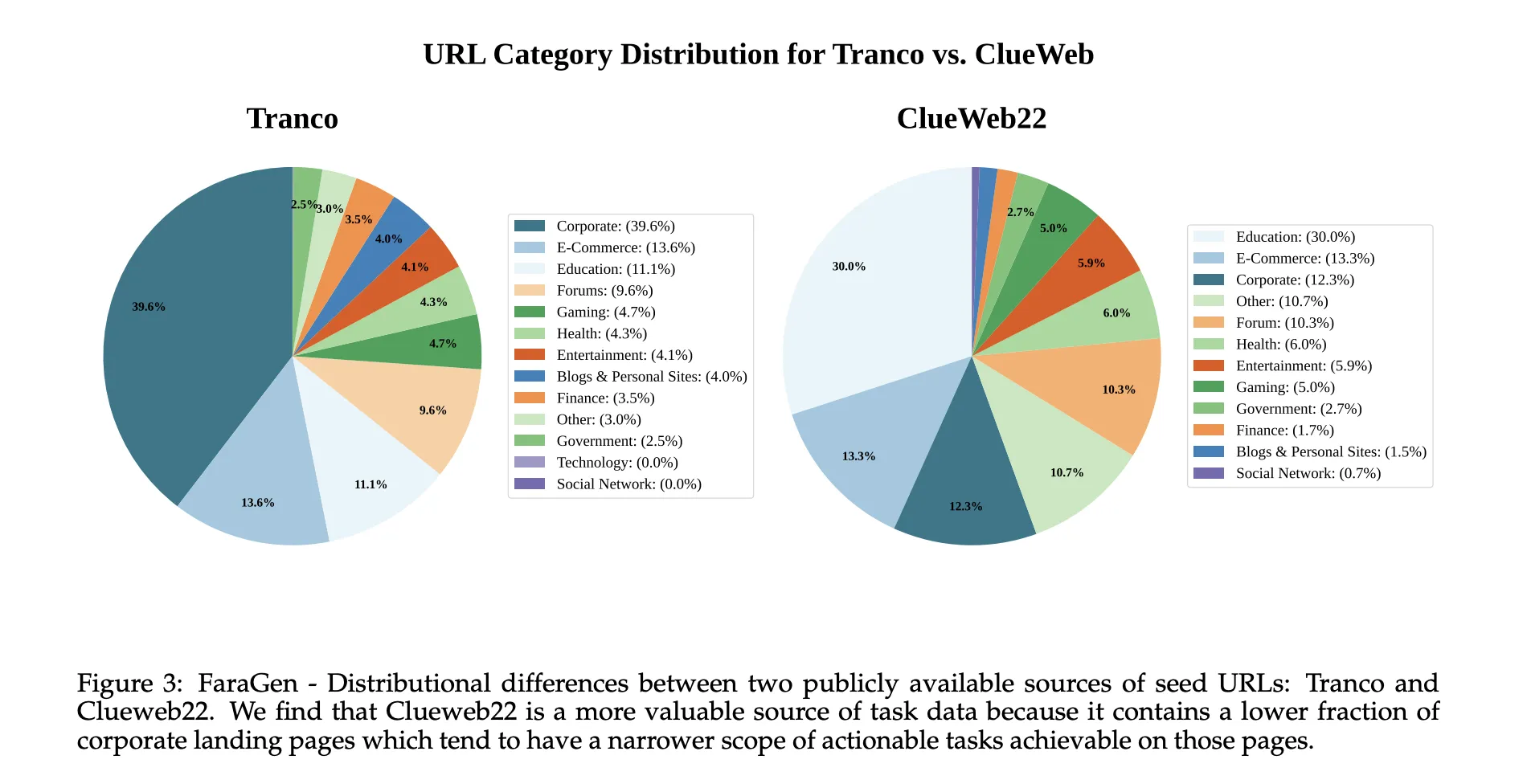

It's a wonderful thing it uses three phase pipes. The job proposal starts from seed URLs removed from Corf Corporas such as Clueweb22 and Tranco, divided into domains such as commerce, travel, entertainment, or forums. Large language models transform each URL into meaningful activities that users can try on that page, for example booking specific tickets or creating a shopping list with review constraints and materials. Jobs must be accessible without a login or PayWall, fully explained, useful, and automatically verified.

Workflow runs a multi-agent system based on Magentic-One and Magentic-UI. The Orchestrator agent orchestrates a high-level strategy and maintains a ledger of job status. Websurfer Agent detects login screens and bookmarks, and executes browser actions with playwight, such as click, type, scroll, visit. User agent services follow instructions when a task needs to be specified.

Use of trajectory validation Three are LLM based VIVIFIAS. The alignment ventifier examines whether the actions and the final response align with the objective of the task. The rubric generator generates the following rubrics and partial completion scores. The multimodal confirmation examines the screenshots and the final answer to catch the hallucinations and confirm that the visual evidence supports the success. These tests agree with people's labels in 83.3 percent of cases, with negative and false positive rates reported around 17 to 18 percent.

After filtering, It's a wonderful thing Generate 145,603 Trajectories with 1,010,797 steps over 70,117 unique domains. Trajectories range from 3 to 84 steps, with an average of 6.9 steps and about 0.5 unique sites per trajectory, indicating that many functions include sites not seen elsewhere in the data. Data is generated by premium models such as GPT-5 and O3 costing about 1 dollar per confirmed trajectory.

Model structure

Fara-7b is the only multimodal decoder model that uses QWEN2.5-VL-7B as a base. It takes into account user intent, recent screenshots from the browser, and a complete history of previous thoughts and actions. The context window is 128,000 tokens. At each step the model first generates a chain of thought that describes the current state and program, and then issues a call to the tool that specifies the next action and its arguments.

The tool space is similar to the Magentic-UI computer_use interface. Includes key, type, mouse_move, left_click, scroll, visit visit_urch, history, history, history, history, history_and_memorize_cract. Coordinates are predicted exactly as pixel positions on the screen, which allows the model to work without accessing the reachability tree at login time.

Training is done using supervised learning over nearly 18 million samples mixed with multiple data sources. These include remote trajectories that are aware of legal action considerations, image location functions and virtual location UI, screen-based question and answer, and data security.

Benches and efficiency

Microsoft is testing Fara-7b in Four Web Benches Live: WebVoyager, Online-Mind2Web, Deepshop, and the new WebTailbelch, focused under the categories of restaurants, job applications, comparison jobs, and more comparison jobs.

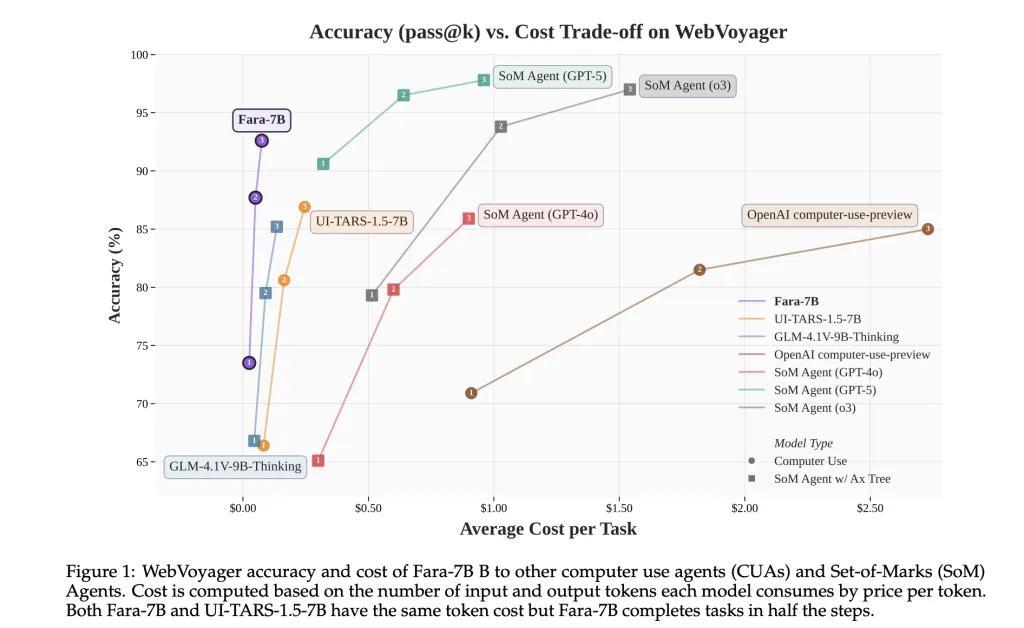

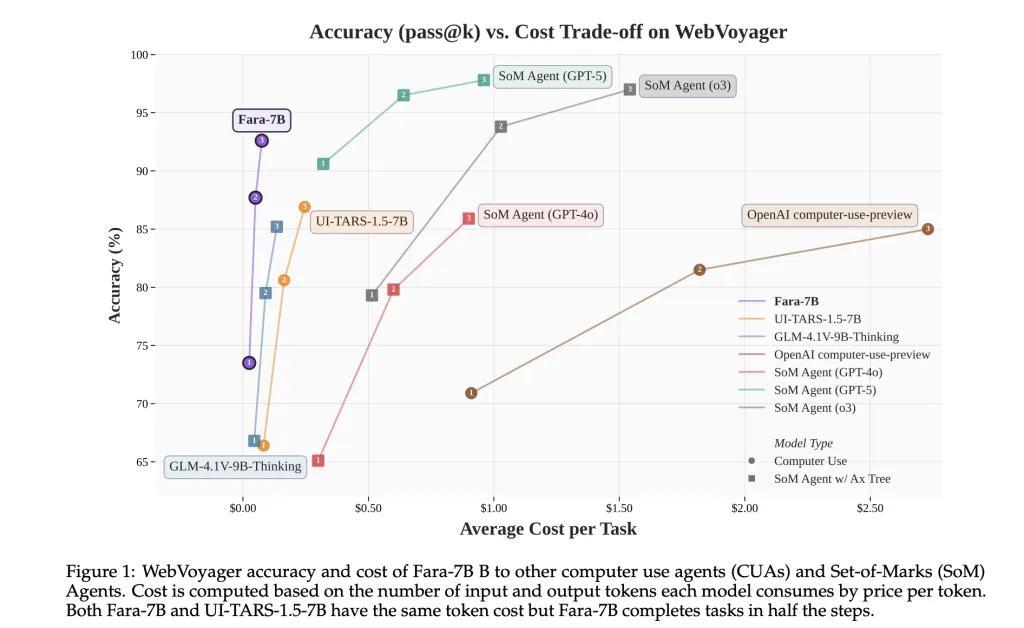

In these benchmarks, fara-7b achieves a success rate of 73.5 percent on webvoyager, 34.1 percent on internet-mind2web, 26.2 percent on deepshop, and 38.4 percent on webtailbench. This releases the 7B computer Use agent baseline UI-Tars-1.5-7b, which is 66.4, 31.3, 11.6, and 19,5, and compares well the major expressions of updating the use of OpenAi

For WebVoyager, Fara-7b uses an average of 124,000 input tokens and 1,100 output tokens per transaction, with about 16.5 actions. Using market prices, the research team estimates a cost of 0.025 dollars per activity, compared to around 0.30 dollars for som agents supported by the thinking models related to GPT-5 and O3. Fara-7b uses the same number of input tokens but about ten times the output tokens of these particular agents.

Key acquisition

- Fara-7b is a 7b parameter, eVie Weight Computer Use an agent built in Qwen2.5-VL-7B that works directly from screens, typing and moving, without depending on accessible trees during access.

- The model is trained with 145,603 verified browser trajectories

- Fara-7b achieves a success rate of 73.5 percent in WebVoyager, 34.1 percent in internet-mind2web, 26.2 percent in webtailbench, significantly improving the base 7B UI-1.5 in all four benchmarks.

- For WebVoyager, Fara-7b uses 124,000 input tokens and 1,100 output tokens per transaction, which achieves a relatively cheap transaction cost with the use of GPT 5 model perks.

Editorial notes

Fara-7b is a practical step towards computer-enabled agents that use local hardware with low cost to scale while compromising privacy. The combination of QWEN2.5 VL 7B, artificial taragen trajectories, the webtailbench offers a clear and well-characterized approach from a multi alent model that corresponds to one compact model with keynchmark text while enforcing critical points and rejection.

Look Paper, model instruments and technical details. Feel free to take a look at ours GitHub page for tutorials, code and notebooks. Also, feel free to follow us Kind of stubborn and don't forget to join ours 100K + ML Subreddit and sign up Our newsletter. Wait! Do you telegraph? Now you can join us by telegraph.

AsifAzzaq is the CEO of MarktechPost Media Inc.. as a visionary entrepreneur and developer, Asifi is committed to harnessing the power of social intelligence for good. His latest effort is the launch of a media intelligence platform, MarktechPpost, which stands out for its deep understanding of machine learning and deep learning stories that are technically sound and easily understood by a wide audience. The platform sticks to more than two million monthly views, which shows its popularity among the audience.

Follow Marktechpost: Add us as a favorite source on Google.