Meet 'KVCached': A machine learning library to enable virtual KV for LLM served on shared GPUS

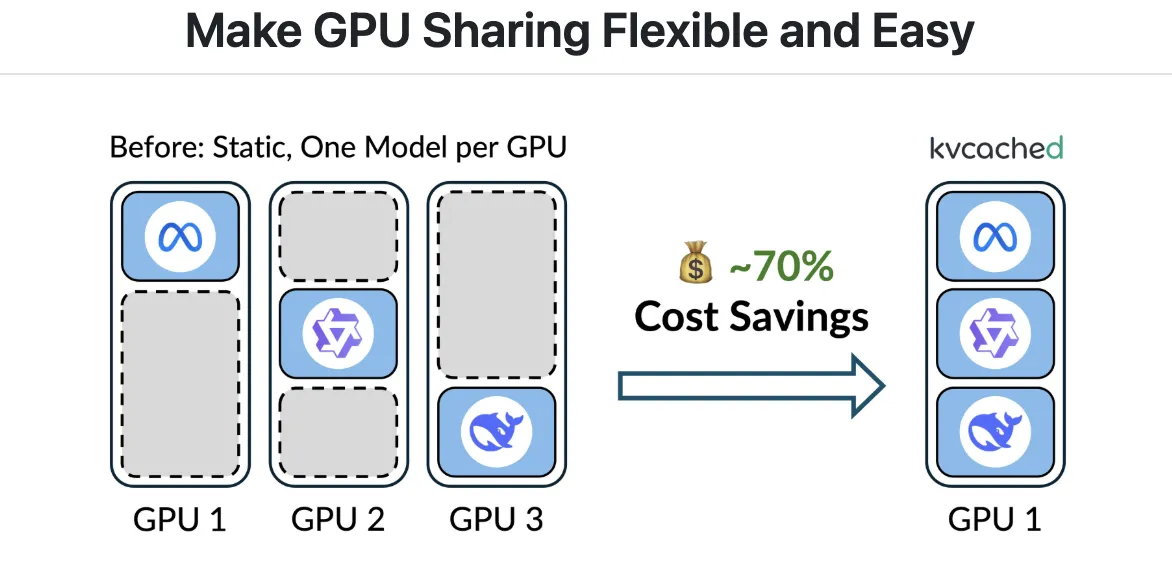

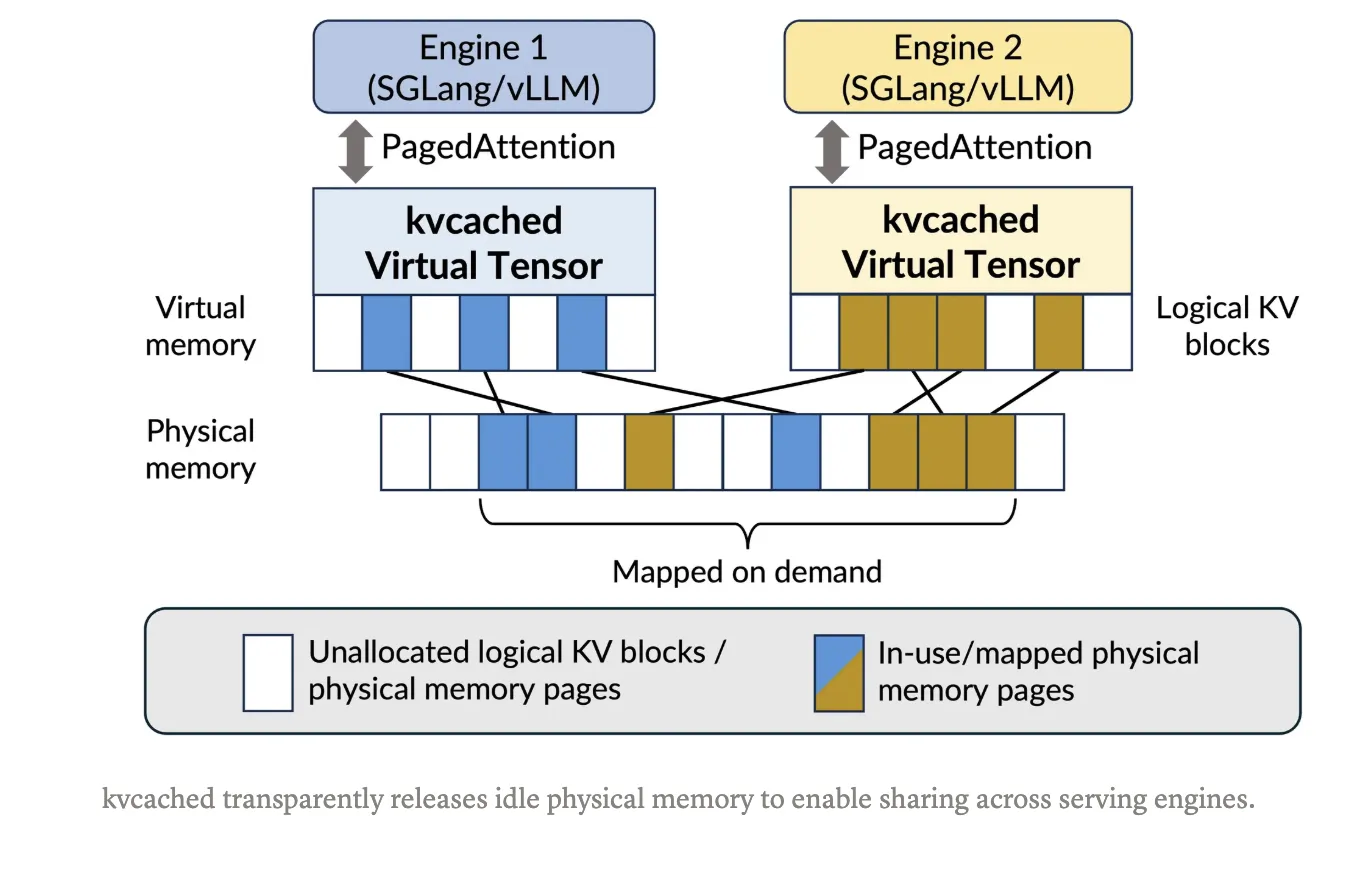

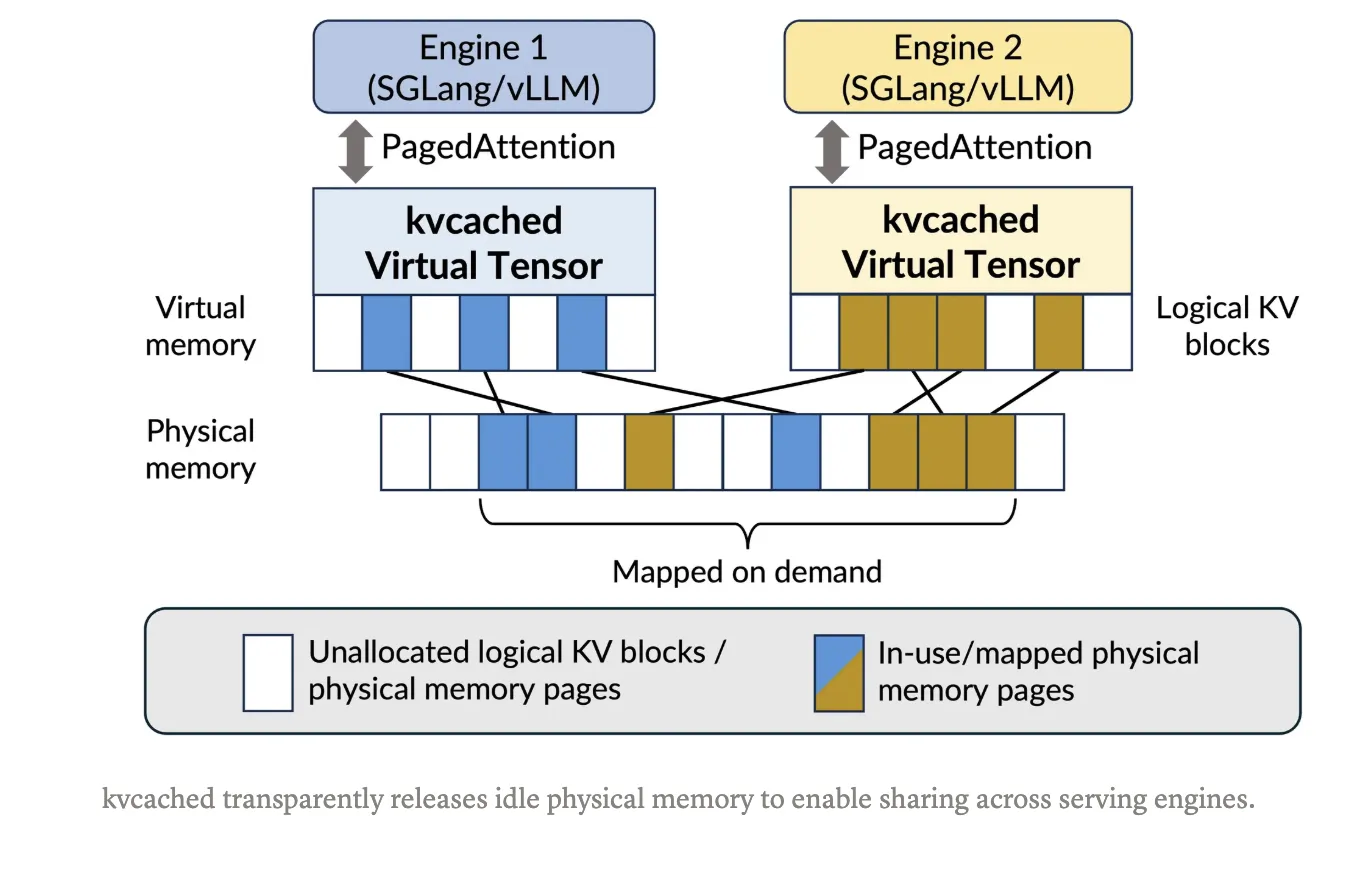

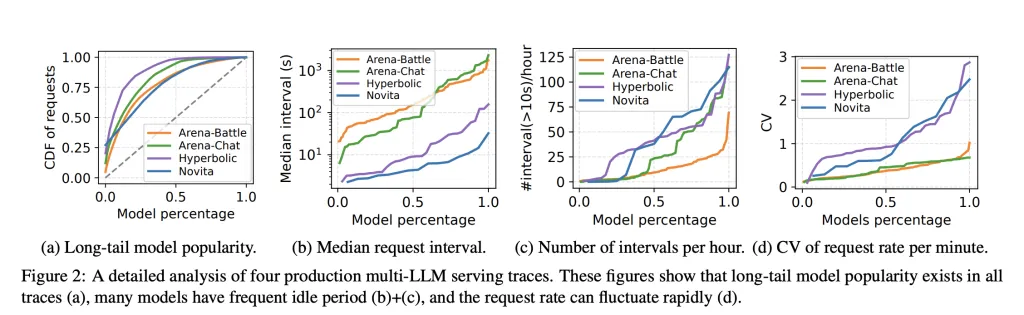

A large language model that is often used is a waste of GPU memory because pre-engines store a large area of KV of KV of KV for each model, whether the requests are bursty or empty. Meet 'KVcached', a library to enable virtual KV cache for LLM serving on a shared GPU. KVCached was developed with research from Berkeley's Sky Computing Lab (University of California, Berkeley) in collaboration with RICE University and UCLA, and with significant input from collaborators and colleagues at Stanford University, Stanford University, Stanford University. Introduces an OS-style virtual memory KV cache that allows engine placement – benefiting space first, then return only the active parts with -related to the body GPU pages are wanted. This decision increases the use of memory, reduces coldness, and enables multiple models to share time and time sharing a device without heavy engine rewriting.

What are kvcached changes?

With KVCached, the engine creates a pool of KV caches in the virtual address space. As toys come, the library maps the GPU maps to the Lazily GPU pages of the Cuda Virtual Memory API. When the requests are completed or the models go idle, the pages unmap and return to the allocated pool, which other integrated models can quickly reuse. This keeps simple pointer calculations in the kernels, and removes the need for each read engine. Project goals Shang and vllm Compiled, and released under the Apache 2.0 license. The installation and one initial command are documented in the git repository.

How does it affect scale?

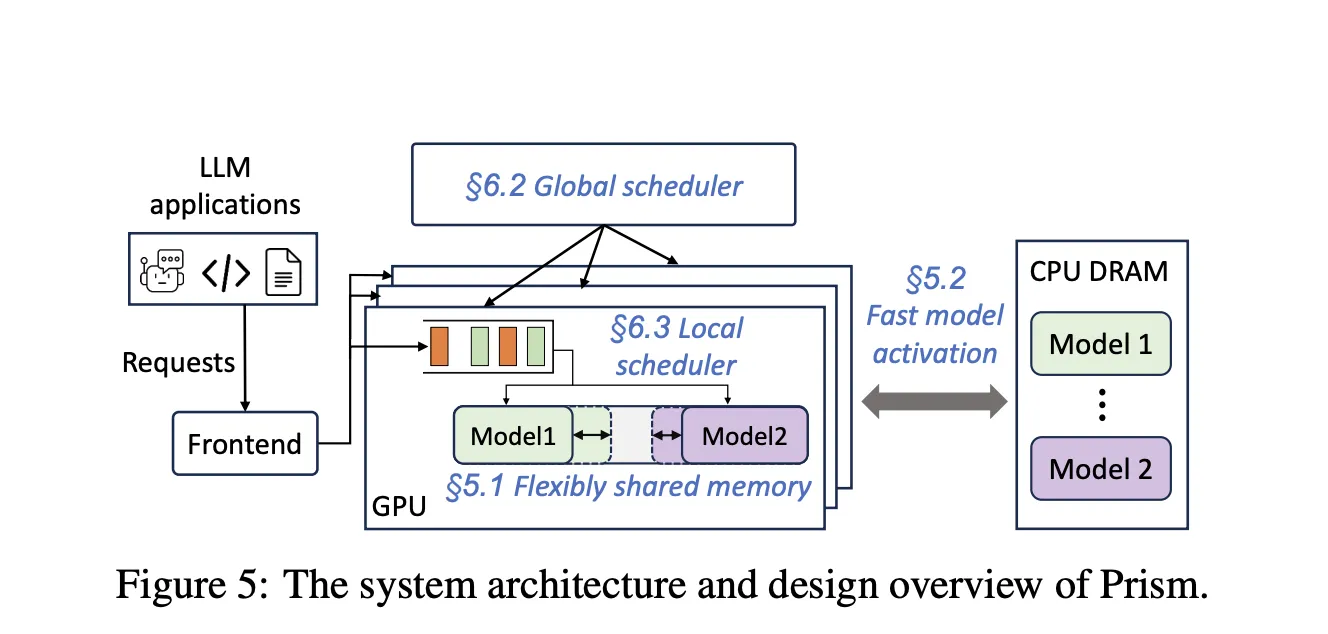

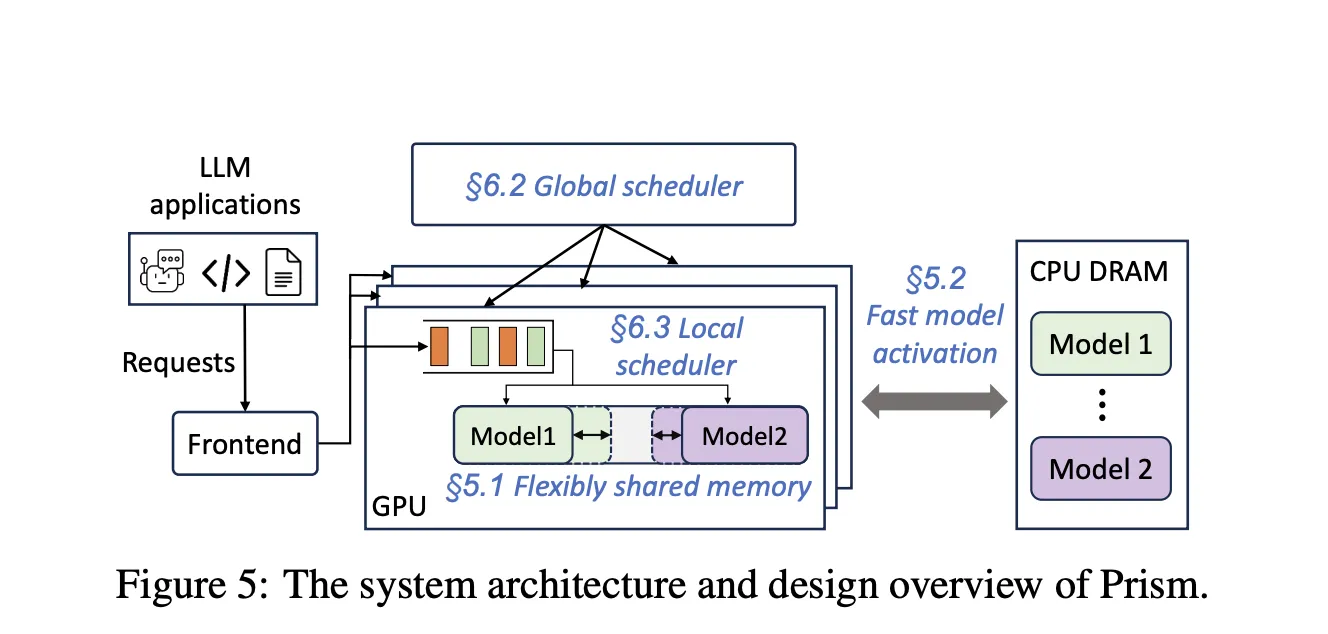

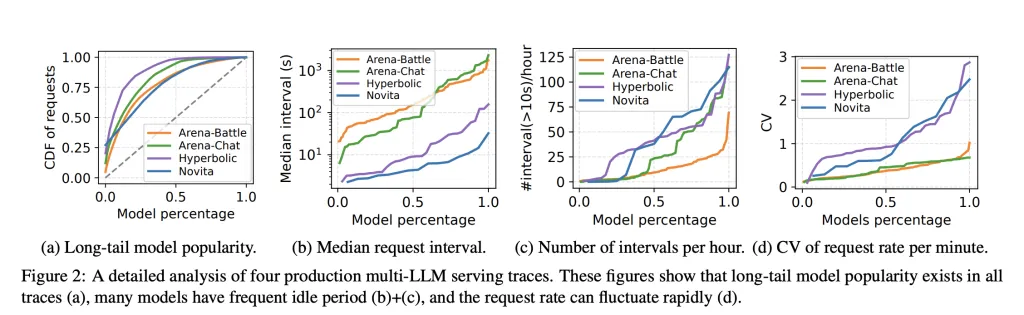

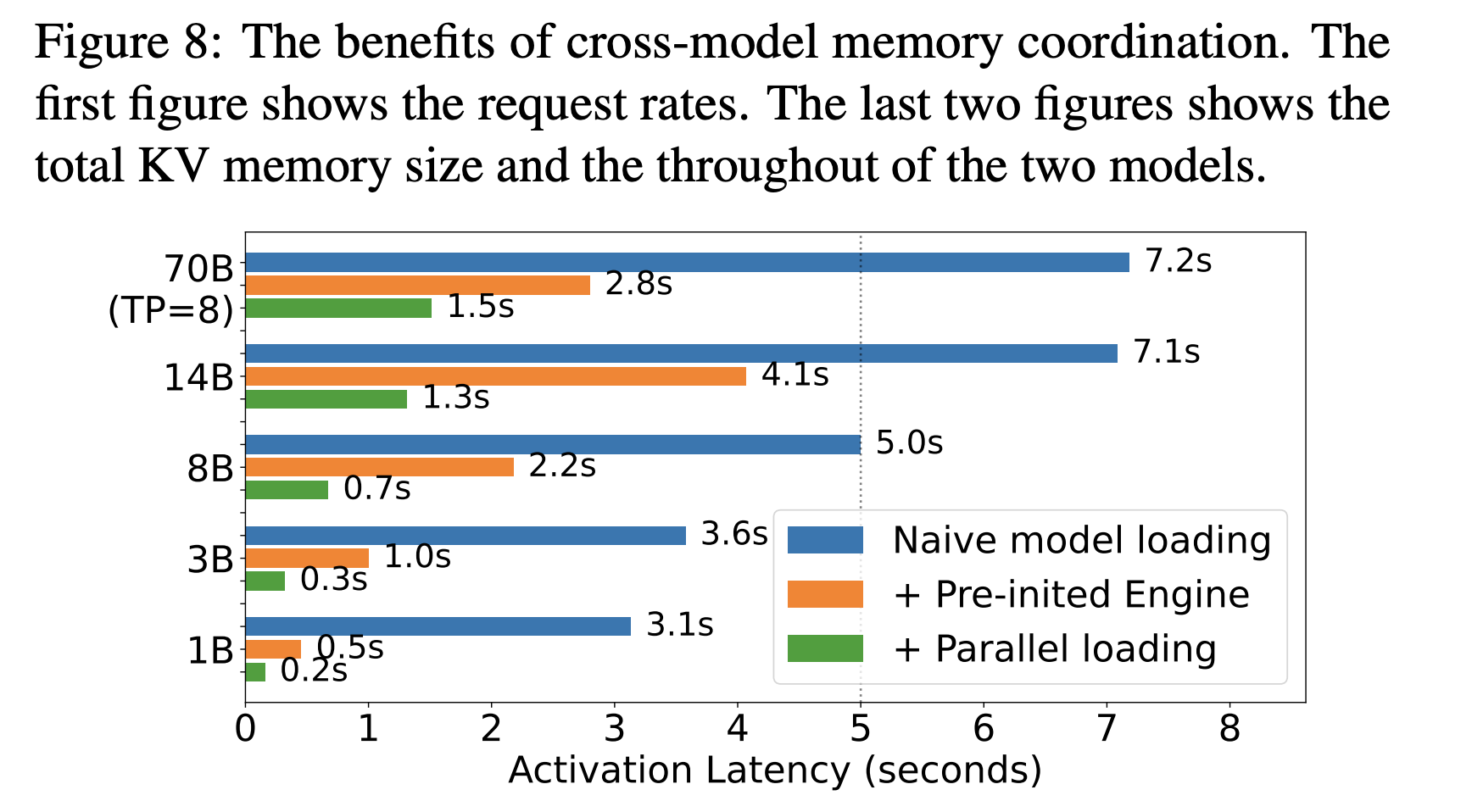

The production load of production holds many models with long tail traffic and spiky bursts. Static bookings leave memory disabled and reduce the initial token time when models must be activated or replaced. This page The price The research paper shows that serving multi-llm needs Memory Cross Model During startup, not just tricky planning. The use of Primsm about Demary Maple of Gray to Virtual pages and two-line schedule, and reports More than 2 times Cost savings and 3.3 times TTFT slo at resus versus previous systems for real tracking. KVCached focuses on primitive memory linking, and provides a reusable component that brings this capability to standard engines.

Performance Signals

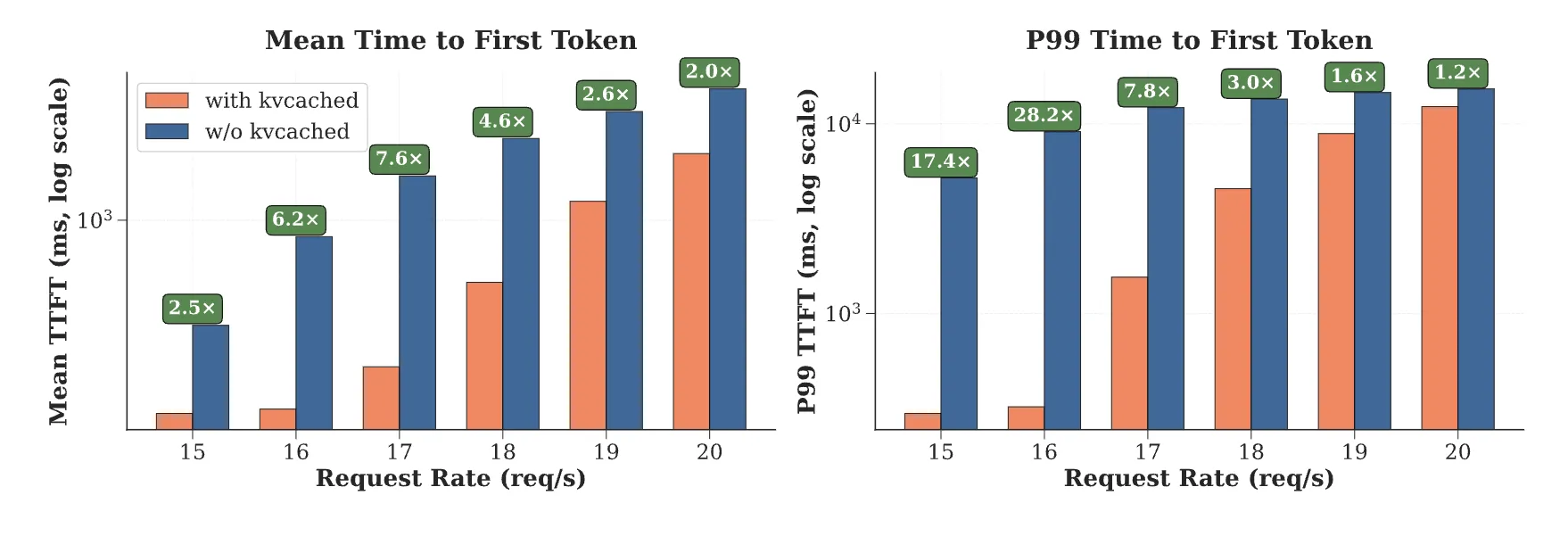

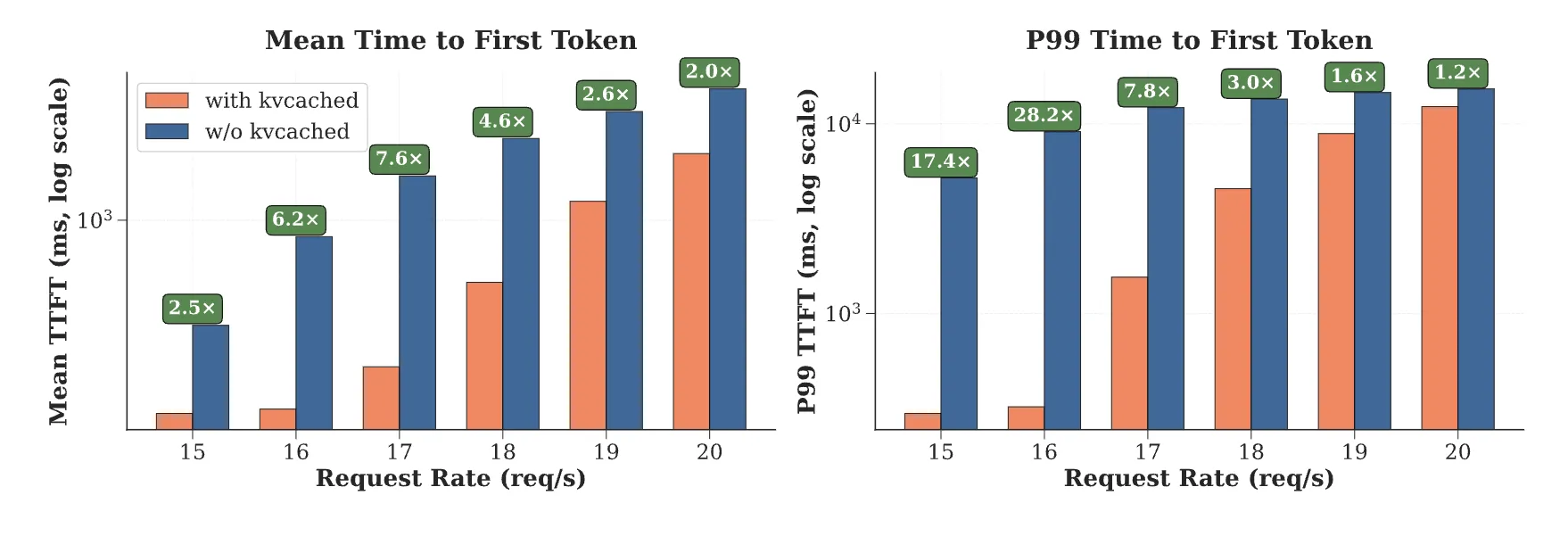

The KVcached team reports 1,2 times to 28 times Immediately the time of the first sign In Multi Model Working, due to faster reuse of freed pages and removal of large static assignments. These numbers appear in many LLM cases where the activation latency is active and the memory head hound controls the tail latency. Research group Note Kvcached's compatibility

How does it relate to recent research?

Recent work has evolved from systematic classification to memory-based methods for KV management. The price Ald-LLM-based VMM distribution priors with multiple settings for linking cross modality models and scheduling. Previous attempts like to be wanted Check out the Cuda VMM for one model that serves to avoid separation without petatatuntion. ARC is clear, use virtual memory to keep KV clutter in virtual space, and then physically MAP pages directly as the workload evolves. KVCached implements this concept as a library, making it easy to find within existing engines.

Active applications for Devs

Colocation on all models: Engines can move small or medium models in one device. When one model is idle, its KV pages are quickly freed and the other model can increase its working set without starting over. This reduces the line blocking head during burst and improves TTFT slo detection.

Work ethic: Prism predicts renewal times approx 0.7 seconds of 8 presentation model and approx 1.5 seconds Of course 70B a model with active streaming. KVCache benefits from the same principles because virtual reservations allow engines to prepare addresses ahead of time, then map pages when tokens arrive.

Autoscaling for Serdless LLM: A beautiful curved page map makes it easy to scale multiple iterations and run cold models in a warm environment with a Memory Footprint. This enables exciting shiny loops and reduces the radicud of hot spots.

Uploading and future work. Virtual memory opens the door to KV Offleat to host memory or NVME when the access pattern allows. Nvidia's latest guide to KV Offlead managed memory in GH200 Class Systems shows that integrated address spaces can expand capacity across all sectors. KVCached caches also discuss download directories and join directories in the world. Ensure throughput and latency in your pipeline, because access point and PCIE Topology have powerful effects.

Key acquisition

- Kvcached features a KV cache using GPU Virtual memory, Engines Reserve Space and Map Official pages on demand, enabling elastic allocation and renewal under heavy loads.

- It integrates with standard measurement engines, especially Sgglang and VLLM, and is released under Apache 2.0, in the acceptance and direct conversion of STRICTRIONS STOCKS.

- Public written benckarkrs report 1.2 times to 28 times faster time to the first token in multi moden running due to the reuse of KVs released due to more reservations.

- Prism shows that cross modal memory decompression, implemented with Demand Mapping and two-level scheduling, delivers more than 2 times the throughput in TTft slo times for the Memory Primitive used.

- For extermination that causes many models with explosions, long KV traffic, visible activation allows safe ecoskation, which is quickly regenerated, with autoscaling regeneration around the 0.7 model of the 70b model in the test of prims.

KVCached is an effective way towards GPU Memory VirtualIzation for LLM Working, not an active operating system, and that's what to specify. The library stores the Virtual address space of the KV Cache, and stores physical pages on demand, which enables elastic allocation across changes with low changes. This aligns with the evidence that cross modal model memory decompression is important to load multimodal model loads and improve slo and cost acquisitions below their original values. Overall, KVcached Teances GPU Memory Coordination for LLM Working, The production value depends on the verification of each cluster.

Look GitHub repo, page 1, page 2 and Technical details. Feel free to take a look at ours GitHub page for tutorials, code and notebooks. Also, feel free to follow us Kind of stubborn and don't forget to join ours 100K + ML Subreddit and sign up Our newsletter. Wait! Do you telegraph? Now you can join us by telegraph.

AsifAzzaq is the CEO of MarktechPost Media Inc.. as a visionary entrepreneur and developer, Asifi is committed to harnessing the power of social intelligence for good. His latest effort is the launch of a media intelligence platform, MarktechPpost, which stands out for its deep understanding of machine learning and deep learning stories that are technically sound and easily understood by a wide audience. The platform sticks to more than two million monthly views, which shows its popularity among the audience.

Follow Marktechpost: Add us as a favorite source on Google.