LongCat-Flash-Omni: Sota Open-Sourle Omni-Modal model with 560B parameters with 27B tuned, which prioritizes real-time audio communication

How do you design a single model that can listen, see, read and respond in real time to text, image, video and audio without losing efficiency? Hongcain's Longcaan team released Gold flash omnithe open source OMNI model with 560 billion parameters and 27 billion active per token, built on the Shortcut article is a connected mix of experts presented. The model extends the backbone for visual, video and audio playback, and maintains a 128k core for continuous long-range and text-level understanding in a single stack.

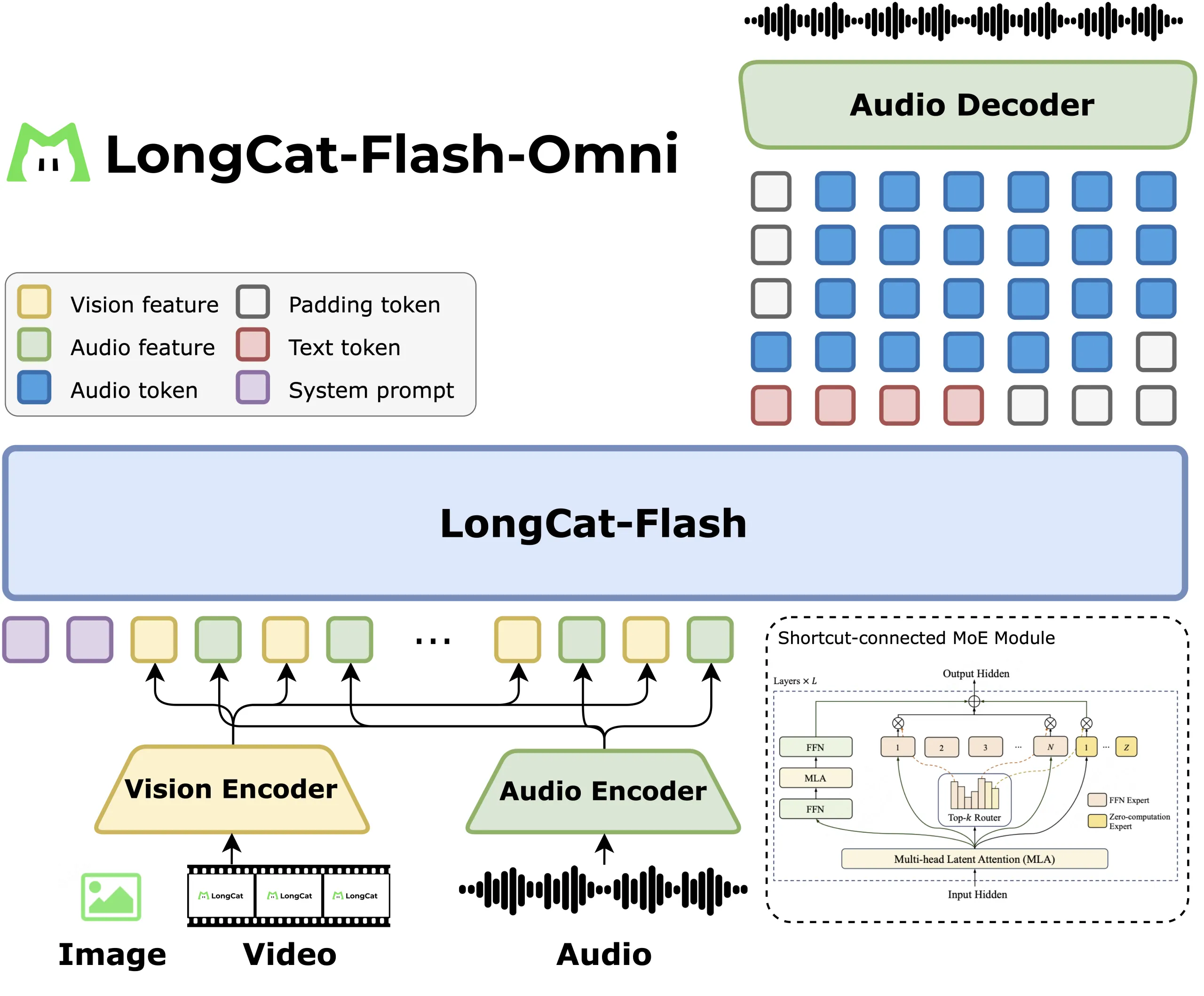

Structures and modal attachments

Longcat Flash Omni keeps the language model unchanged, and adds optical modules. Longcat Vit Encoder processes both images and video frames so there is no separate video tower. Accide encode and Longcat Audio Codec convert the speech into discrete tokens, then the decoder can extract the speech from the same LLM stream, making real-time audio viewing.

Broadcasting and communication feature

The research group describes an intelligent audio visual feature for audio visualization, where audio features, video features and time periods are filled in 1 second parts. The video is removed from 2 frames per second by default, then the rate is adjusted according to the length of the video, the report does not include sample sections on the cheeks of the user or the model, so the correct definition is the length of the sample. This keeps latency low and still provides local context for GUI, OCR and Video Capture functions.

Curriculum from text to omni

Training follows a set curriculum. The research group begins to train the wackbone artist of Longcat Flash, which works with 31B to 31.3b parameters with Toker continues to reverse, then works with text expansion to 128k, then encoder compatibility.

Design system, modality inspired parallelism

Because Encaders and LLM have different integrated patterns, Meituan uses a reverse dynamic method. Encading Winsio and audios are driven by hybrid sharding and activation Recomputation, LLM works with pipe, context and shape similarity, and modalbridge adapts embedding and gradients. The Research Team reports that the multimodal supervised tungang good keeps more than 90 percent of the fulfillment of the text only training, which is the main programs that lead to this release.

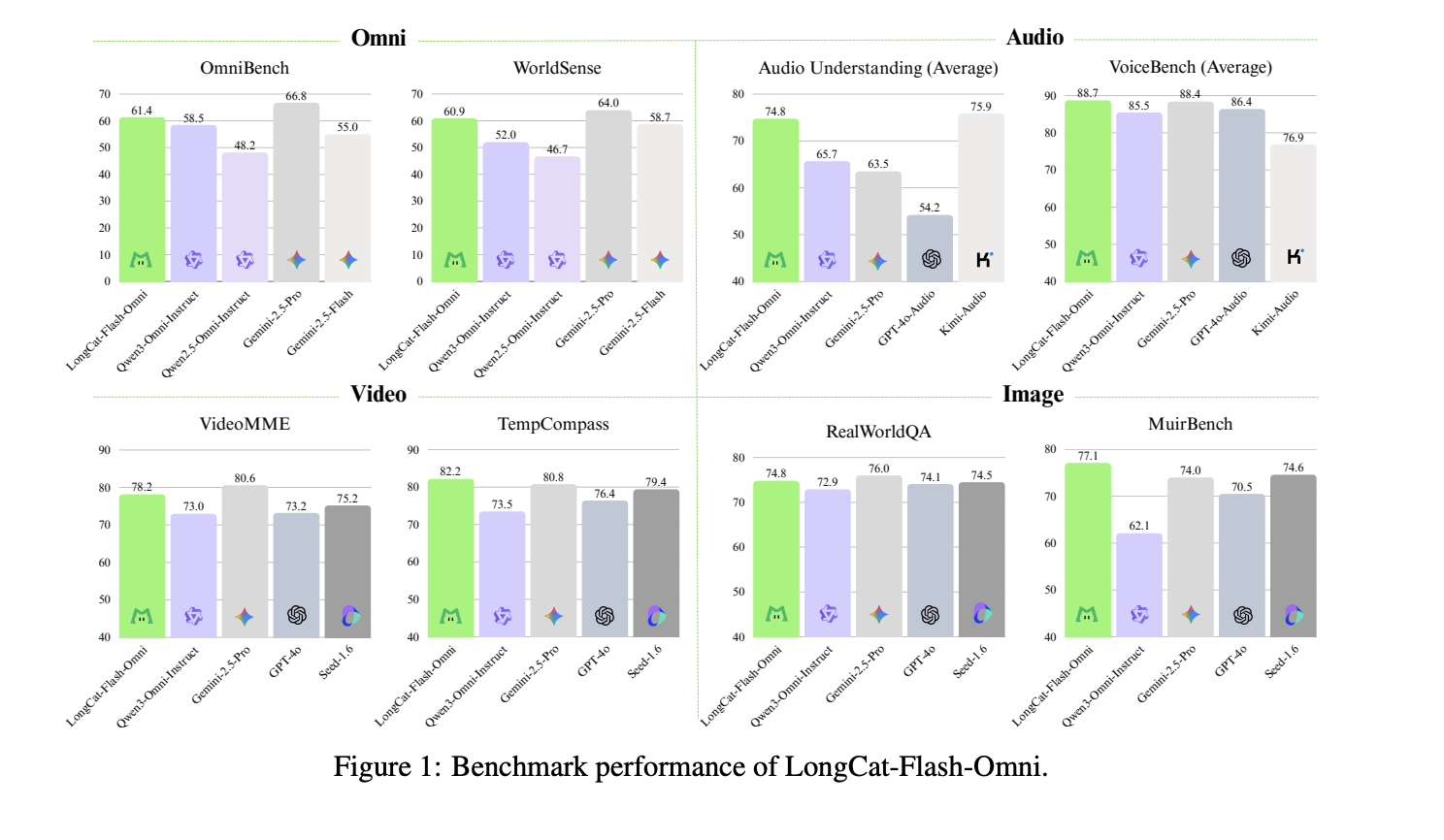

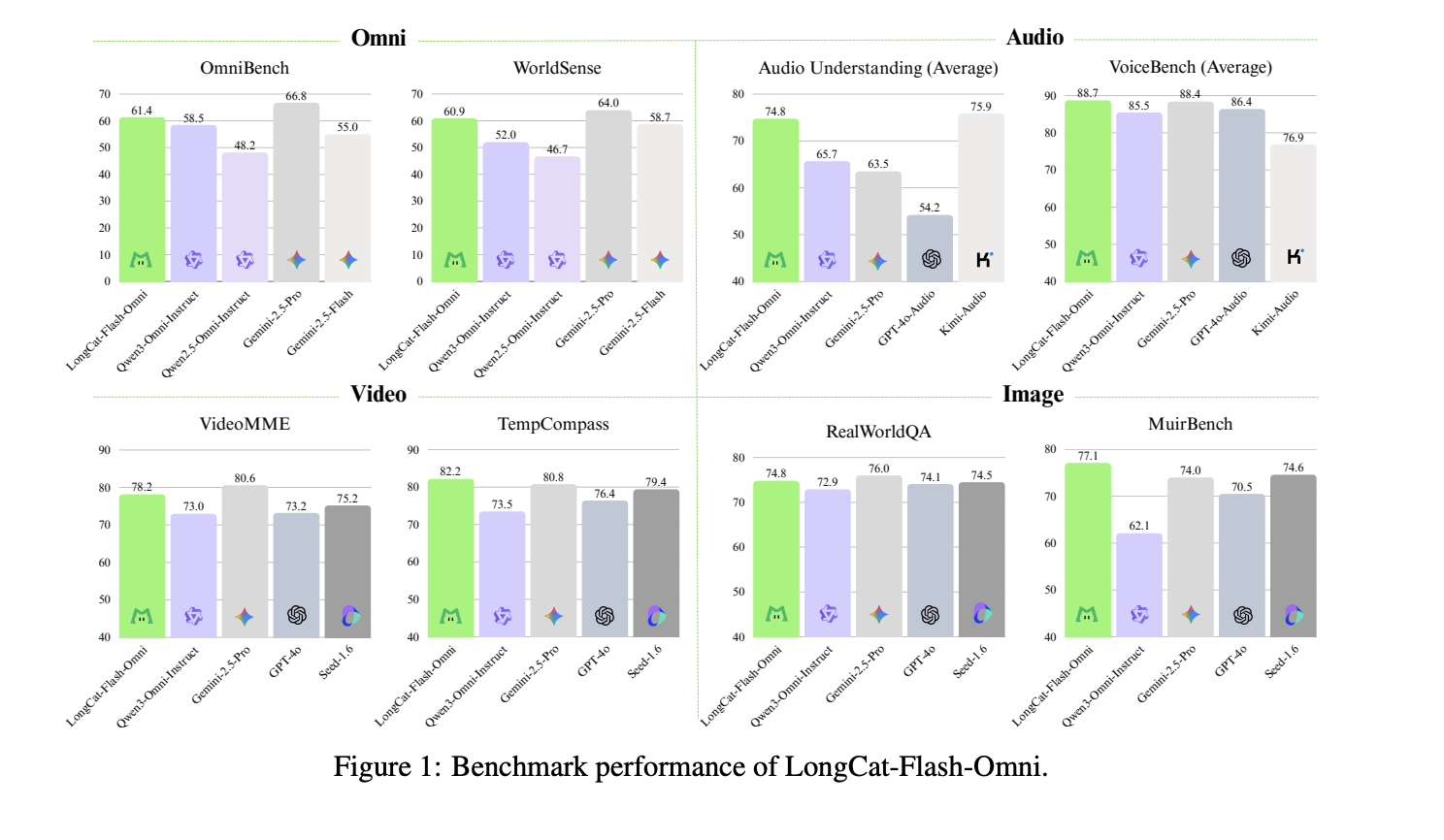

Benches and good posture

Longcat Flash Omni reaches 61.4 on Omnibench, this is higher than Qwen 3 omni teaches at 58.5 and Qwen 25.0, but lower than Gemini 2.5 Pro at 66.8 Pro at 66.8. In videomme it scores 78.2, close to GPT 4O and Gemini 2.5 Flash, and in VoinEnbench it reaches 88.7, slightly higher than GPT 4O audio in the same table.

Key acquisition

- Longcat Flash Omni is an open OMNI Modal open model built back on MEituan's 560B MOE Backbone, it has worked about 27B parameters with TOKEN through the shortcut of connected experts, so it saves a lot of power.

- A video encoding model that integrates with the Longcat Flash LLM broadcast method, using 2 FPS automatic Sampling with Checking of Audio Reordering, which makes real time any real connection.

- The Longcat Flash Omni Scores 61.4 on Omnibench, above the Qwen 3 Omni benchmark at 58.5, but below the Gemini 2.5 Pro at 66.8.

- Meituan uses experience-modified similarity, Vision and audio encoders run with hybrid sharding, LLM works with a pipe, core and 90 percent similarity, which is the Main Portwork Property for Multimodal SFT, which is the basic contribution of the release.

This release shows that Meituan is trying to make omni modal communication work, not experimental. It keeps the 560BB Shortcut connected to the professional mix with 27B enabled, so the backend language remains compatible with previous Longcat releases. Adds streaming audio visuals with 2 FPS automatic FPS sampling and time frame adjustment, so latency remains low without losing the local base. It reports a text of more than 90 percent of the multimodal supervised ngcing good in accordance with the experience balance.

Look Paper, model instruments and Github repo. Feel free to take a look at ours GitHub page for tutorials, code and notebooks. Also, feel free to follow us Kind of stubborn and don't forget to join ours 100K + ML Subreddit and sign up Our newsletter. Wait! Do you telegraph? Now you can join us by telegraph.

Michal Sutter is a data scientist with a Master of Science in Data Science from the University of PADOVA. With a strong foundation in statistical analysis, machine learning, and data engineering, Mikhali excels at turning complex data into actionable findings.

Follow Marktechpost: Add us as a favorite source on Google.