IBM AI TEAM releases Granite 4.0 Nano Series: Compact and open small models built with AI at the edge

Smaller models are often hampered by poor sound quality, weak tools that use formats, and non-existent management. IBM AI Team release Granite 4.0 Nanoa small family of models aimed at home and edge compliance with enterprise controls and open licensing. This family includes 8 models in two sizes, 350m and 1b, with both hybrid ssm and transformer variants, each on base and teach. Granite 4.0 Nano Series models are released under the Apache 2.0 license for native build support for popular runtimes such as VLLM, LLAMA.CPP, and MLX

What's new in the granite 4.0 nano series nano?

Granite 4.0 Nano it consists of four model lines and their base partners. Granite 4.0 H 1B uses hybrid SSM construction and is about 1.5b parameters. The Granite 4.0 h 350m uses a similar hybrid method at 350m. For Running Time Portability IBM also offers granite 4.0 1B and granite 4.0 350m as transformer versions.

| Granite Extraction | Sizes on release | Expertise in housing construction | License and governance | Key notes |

|---|---|---|---|---|

| Granite 13b, original watsonx granite models | 13B Base, 13B instructive, later Char 13b Chat | Decoder only Transformer, Core 8K | IBM Enterprise Terms, Customer Protection | Watsonx public granite models, selected business data, English focus |

| Granite Code Models (open) | 3b, 8b, 20b, 34b code, base and order | Decoder only Transformer, 2 phase Code Training in 116 languages | Apache 2.0 | The first fully open granite line, Code intelligence, paper 2405.04324, available on HF and GitHub |

| Granite Language Models 3.0 | 2B and 8B, foundation and command | Transformer, 128k tutorial core | Apache 2.0 | Rag's Business LLMS, tool usage, summary, post on Watsonx and HF |

| Granite Models 3.1 Languages (HF) | 1B A400M, 3B A800M, 2B, 8B | Transformer, 128k core | Apache 2.0 | Al Ladider for business activities, both basics and command, the same recipe for granite data |

| Granite Models 3.2 Languages (HF) | 2B is instructive, 8B is instructive | Transformer, 128k, better fast | Apache 2.0 | Iterative Quality Bump on 3.x, maintains business alignment |

| Granite Models 3.3 Languages (HF) | 2B base, 2B Tutorial, 8B Base, 8B Tutorial, All 128k | Decoder only transformer | Apache 2.0 | Latest 3.x line in HF before 4.0, add fim and better following commands |

| 4.0 language models | 3B micro, 3b h micro, 7b h tiny, 32b h tiny, plus transformer variants | Hybrid Mamba 2 Plus Transformer for H, Pure Transformer for compatibility | Apache 2.0, ISO 42001, cryptographically signed | The beginning of the hybrid generation, low memory, agent friendliness, the same governance in all sizes |

| Granite 4.0 Nano language models | 1B H, 1b h command, 350m h, 350m h command, 2b transformer, 2b transformer teach, 0.4b transformer teach, 0.4b Transformer teach | H models are hybrid ssm plus plus transformer, non h are-transformer pure | Apache 2.0, ISO 42001, signed, 4.0 Pipeline | Small versions of granite, designed for odge, local and browser, run on vllm, llama.cpp, MLX, Watsonx |

Facilities and training

IH Variants separate SSM layers with Transformer layers. This hybrid design reduces memory growth compared to pure attention, while maintaining the production of transformer blocks. The nano models did not use a reduced data pipeline. They were trained in the same granite 4.0 method and more than 15t tokens, and then it was a structured training to deliver a solid use of the tools and the following instructions. This carries power over power from large granite 4.0 models to 2B scales.

Benchmarks and competitive context

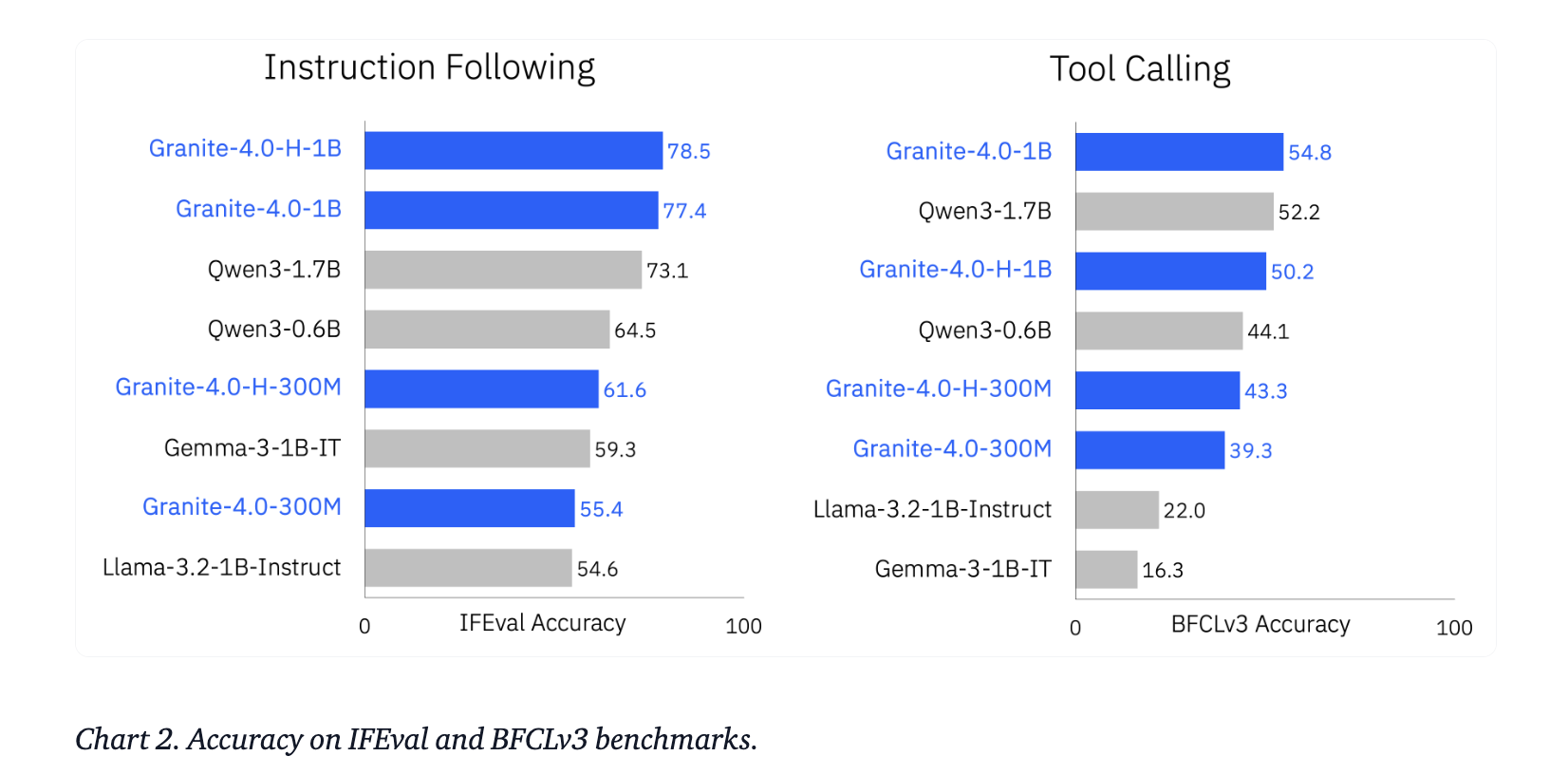

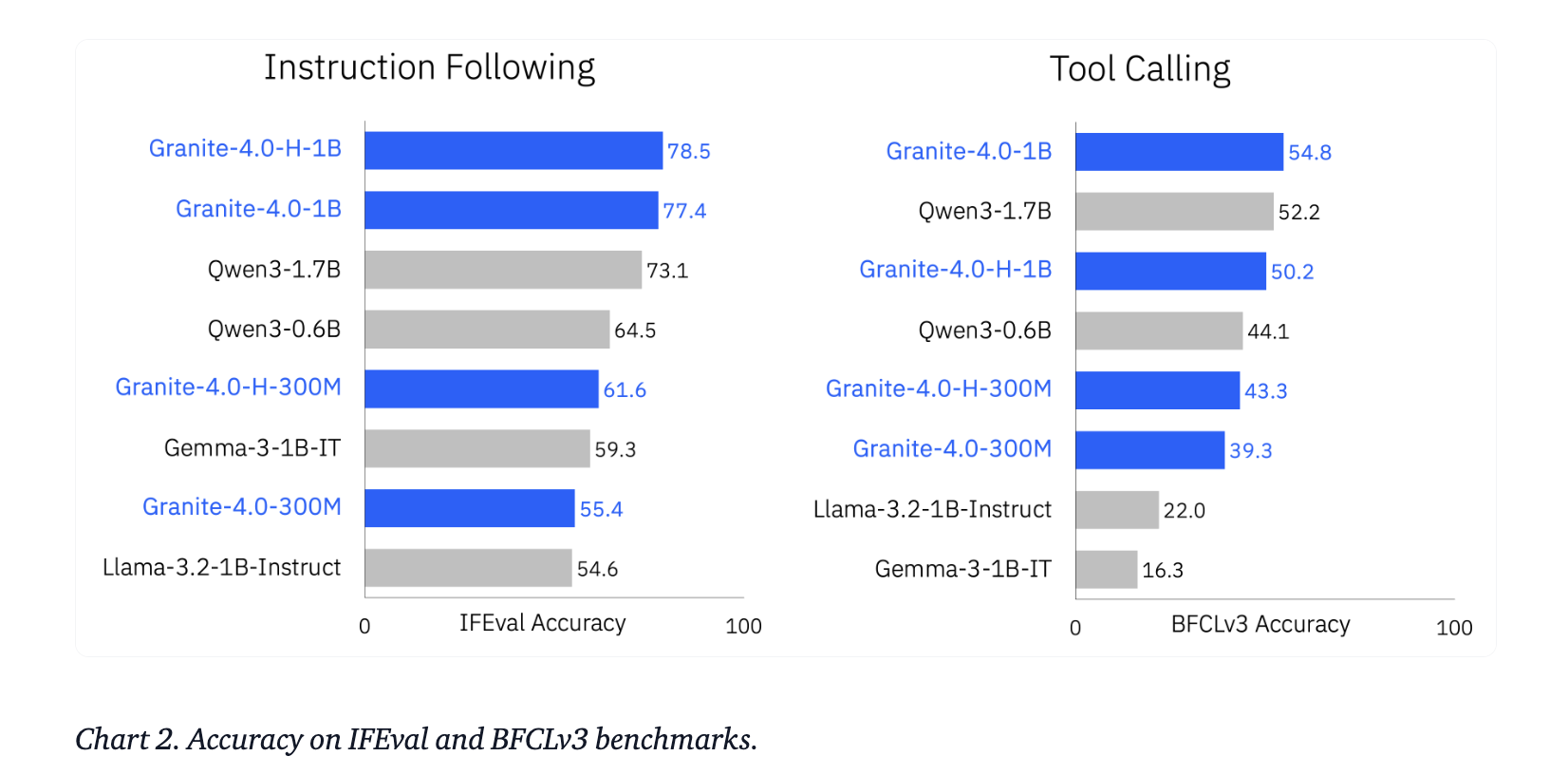

IBM compares the granite 4.0 nano to other sub-2B models, including the QWEN, Gemma, and Litaidai LFM. The reported aggregates show a significant increase in skills across general information, statistics, coding and security in the same parameter budgets. In the Agent functions, the models investigate several peers in Ifeval and in Berkeley the function calls the V3 frontend.

Key acquisition

- IBM released 8 granite granite 4.0 nano models, 350m and about 1B each, in hybrid SSM and various transformers, still in the base and teaching, all under Apache 2.0.

- Hybrid H models, Granite 4.0 h 1b about 1.5b parameters and granite 4.0 h 350m about 350m, so the power was found in a large family and not a branch of reduced data.

- The IBM Team reports that Granite 4.0 Nano competes with other small 2b models such as Qwen, Gemma and Imporment in IVEVAL and BFCLV3 with the IVERENTS tool.

- All granite 4.0 models, including the nano, are properly signed by the nano, signed according to the ISO 42001 mark and released for business use, providing service and governance that are common models of society.

- The models are available for desktop and ibm watsonx.ai with runtime support for vllm, llama.cpp and MLX, enabling local level deployment, edge and browser, software groups.

IBM is doing the right thing here, taking the same pipeline of granite 4.0 for granite training, the same scale of 15t, hybrid mamba 2 plus edge of construction, then about 1b to 1bs to use 1B large models available. The models are Apache 2.0, ISO 42001 compliant, cryptographically signed, and already unbreakable in VLLM, LLAMA.CPP and MLX. Overall, this is a neat and smart way to use small LLMs.

Look Model instruments in hf and Technical details. Feel free to take a look at ours GitHub page for tutorials, code and notebooks. Also, feel free to follow us Kind of stubborn and don't forget to join ours 100K + ML Subreddit and sign up Our newsletter. Wait! Do you telegraph? Now you can join us by telegraph.

AsifAzzaq is the CEO of MarktechPost Media Inc.. as a visionary entrepreneur and developer, Asifi is committed to harnessing the power of social intelligence for good. His latest effort is the launch of a media intelligence platform, MarktechPpost, which stands out for its deep understanding of machine learning and deep learning stories that are technically sound and easily understood by a wide audience. The platform sticks to more than two million monthly views, which shows its popularity among the audience.

Follow Marktechpost: Add us as a favorite source on Google.