MiniMax releases Minimax M2: An open Mini model built for max codes and agentic workflows at 8% the price of ntaude sonnet and ~2x faster

Can open source work with powerful coding functionality at a fraction of the cost of the Flagship model while maintaining a long tool using MCP, shell, browser, and code, and code? The Minimax team has just released Minax-m2A mix of experts in the MOE model is optimized for code flow and agent performance. The weights are published on the surface under the mit license, and the model is organized by the end of the last use of the tools, the long complete plans, the memory saving and the latency in the Check during the Agent Loops.

Structures and why does size work?

Minax-m2 Is the compact moe that routes followed about 10B active parameters per token. Minimal performance reduces memory pressure and tail latency in plan, execute, and verify loops, and allows parallel runs of CI, lookup, and restore chains. This is a performance budget that allows for speed and cost savings associated with denser models of similar quality.

Minax-m2 it is a connected thinking model. The research group is threatened by its own internal thinking

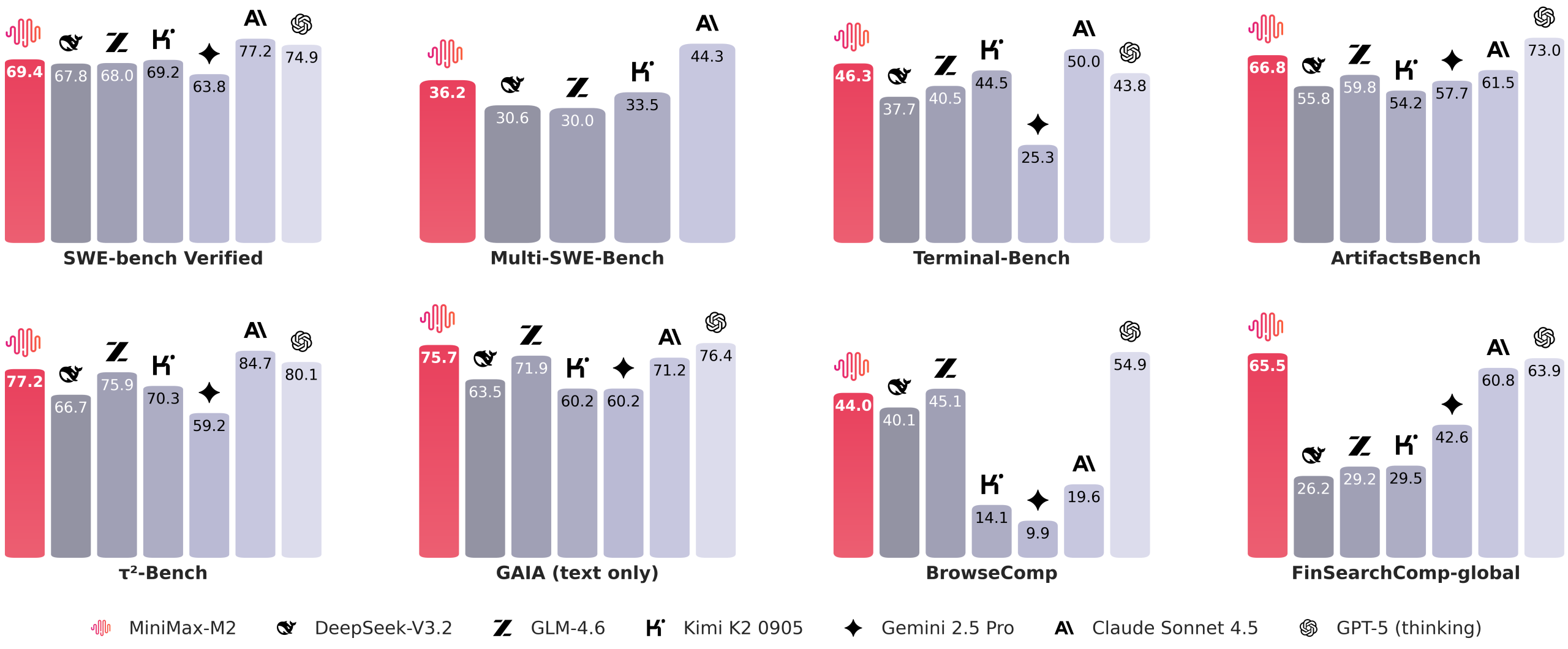

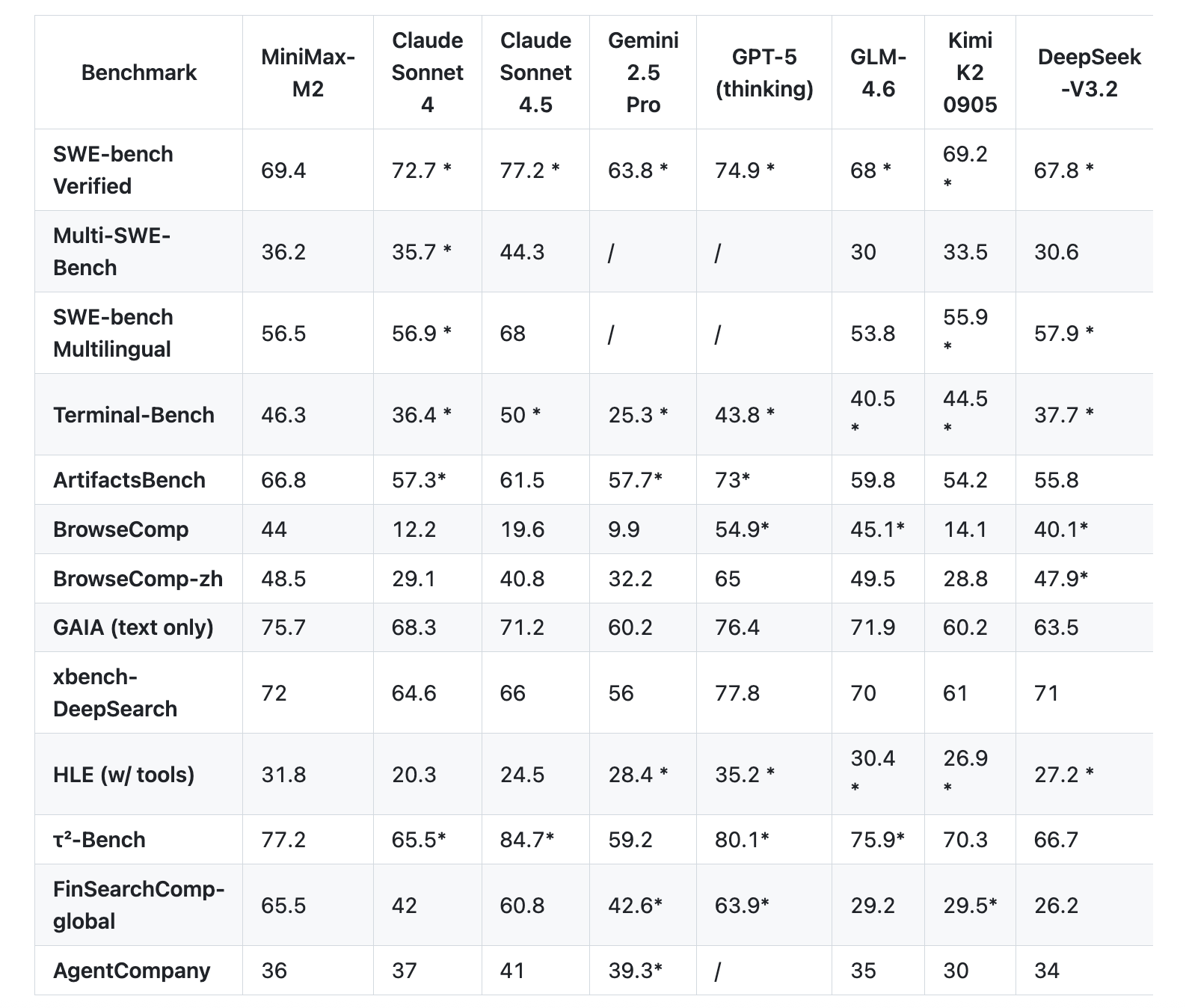

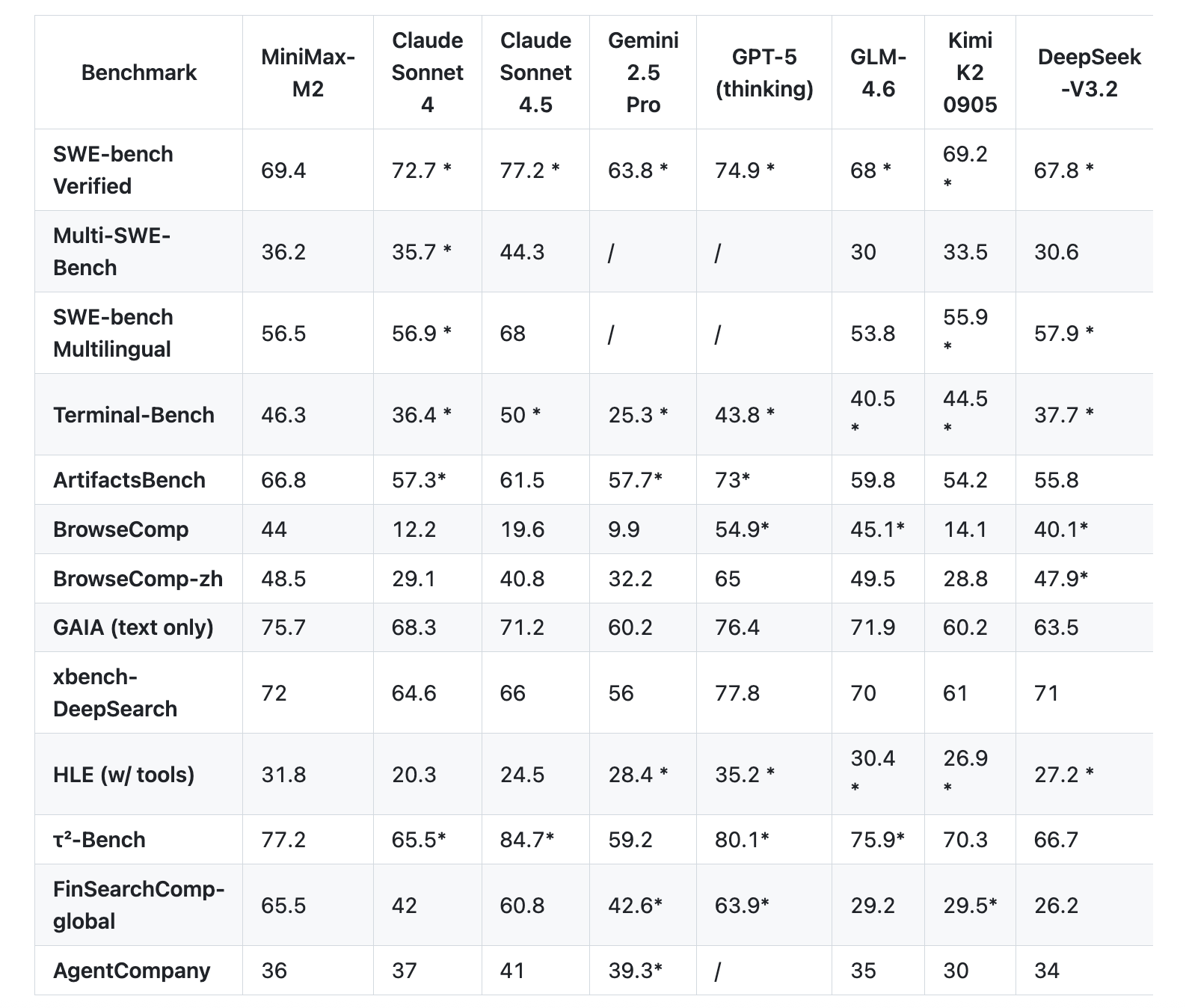

Benches aim to include coders and agents

The minimax team reports the agent set and code testing are closer to developer performance than static qa. On the death bench, the table shows 46.3. On the various swer bench, it shows 36.2. In PresenoComp, it shows 44.0. SWe Bench verified listed at 69.4 for scaffold details, OpenHands with 128k steps and 100 steps.

Minimax's official announcement emphasizes the prices of Claude Sonnet, and close to 2x speed, and windows of free entry. The same note provides some Pocken prices and the last time of the case.

Compare m1 vs m2

| A feature | Minimax m1 | Minimax M2 |

|---|---|---|

| Absolute parameters | A total of 456B | 229B in Model Card Metadata, Model card text says figure 230B |

| Parameters are valid per token | 45.9B is active | 10b is active |

| Design core | Professional hybrid mix with lightning attention | A sparse mix of scholars referring to code testing and agent navigation |

| Thinking format | Budget Thinking in Disasters 40k and 80K in RL training, no tag idea thinking | Thinking connected with |

| Benches are highlighted | Aime, LiveCodeberch, SWE-Bench verified, Tau-Bench, long context mr, mmlu-pro | Terminal-Bench, various swer bench, Swen-Bench confirmed, browser, Gaia Text only, Intelligence Analysis |

| Default | Heat 1.0, High P 0.95 | Model card shows temperature 1.0, Top P 0.95, Top K 40, Laulwa page shows top k 20 |

| Working for Leadership | VLLM is recommended, Transformers method is also documented | VLLM and Suglang Recommended, Calling Tool provided |

| Basic focus | Several considerations, effective measurement of test time, strengthening of CISPO | Agent and Code Faintflowflow workflows across shell, browser, retrieval, and code runners |

Key acquisition

- M2 ships as open arms in the face mask under the mit, and is safe in F32, BF16, and FP8 F8_E4M3.

- The model is a compact moe with 229B total parameters and ~ 10b active per token, which bounds the memory usage level and the tail strength in the system, do, verify general loops of the agents.

- The output is to wrap the internal logic in

... - The reported results cover the terminal-bench, (various-) SWE-Bench, browser, and others, scaffold notes for reproble, and 0 work is written Suglang and Vllm with references to send concrete.

Editorial notes

The MinIMax M2 countries have open instruments under the mit, a mixture of experts designed with 229B parameters and about 10b sung with agent loops and coding functions with lower memory and stea ami. It is submitted to SafeTensirs face-to-face testing in FP32, BF16, and FP8 formats, and provides submission notes and a discussion template. The API documentation is a variety of compatibility documents and lists pricing with a limited free trial window. VLLM and Suglang cooking is available for local operation and observation. Overall, the Minimax M2 is a solid solid release.

Look API Doc, tools and repo. Feel free to take a look at ours GitHub page for tutorials, code and notebooks. Also, feel free to follow us Kind of stubborn and don't forget to join ours 100K + ML Subreddit and sign up Our newsletter. Wait! Do you telegraph? Now you can join us by telegraph.

Michal Sutter is a data scientist with a Master of Science in Data Science from the University of PADOVA. With a strong foundation in statistical analysis, machine learning, and data engineering, Mikhali excels at turning complex data into actionable findings.

Follow Marktechpost: Add us as a favorite source on Google.