Alaba Ai Team released just Ovis 2.5 Multimodal llms: OPEN-SOURCE AI ASSIGNMENT FOR AN EXCLUSION INSPECIAL AND CONVENTION

OVIS2.5, a large model of the latest language multimodal language (MLM) in AIDC-AII's group of Aiscba, makes waves in an open AI in 9b and various parameter. OVIS2.5 sets new performance benchmararks and efficiency in delivering the technical guidance preparation for the observation, multimodal, multilingable bounds facing the visual information accurately and complex information.

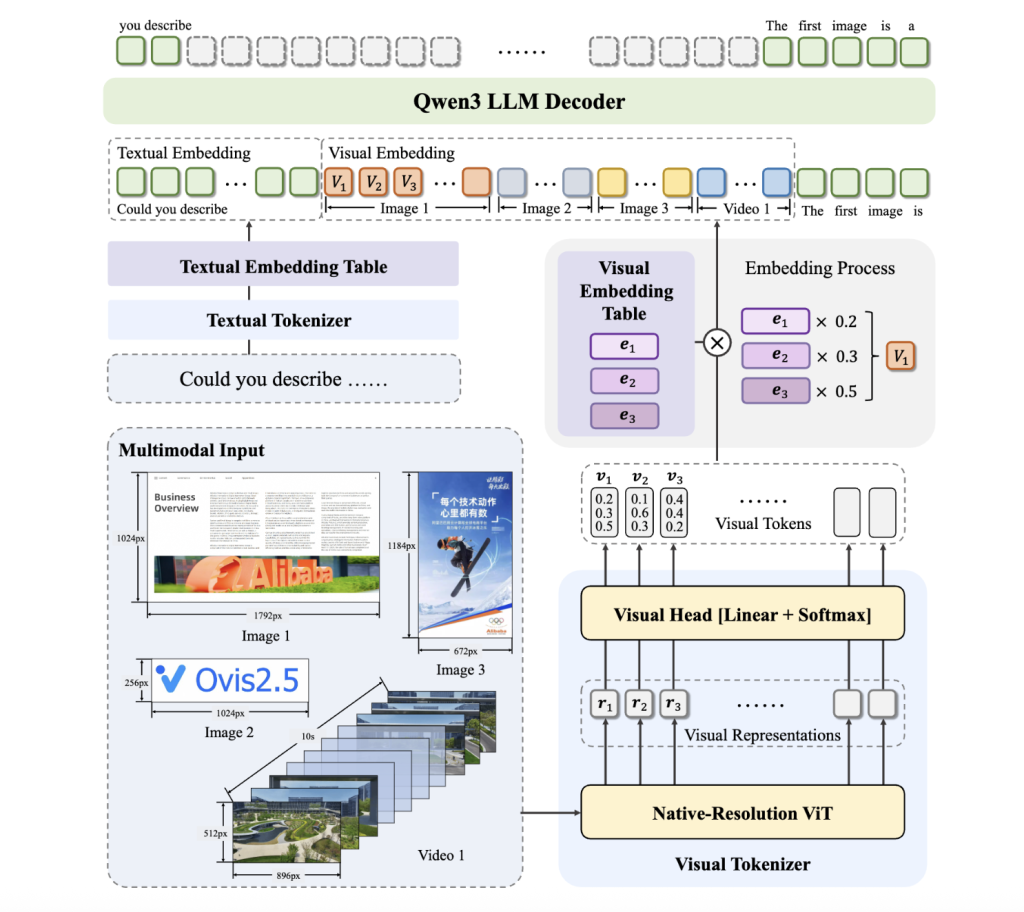

The Vision of Resolving the Native and Deep Thinking

New calling on Ovis2.5 its traditional transformer integration (Navit), which processes images in their first, variable decisions. Unlike previous models depending on raising or forced reinstatement, which often causes the global status and good details, the navit maintains the full integrity of both common charts and natural photos. This renewal allows the model to exceed large work from scientific papers to heavy infographics and forms.

Coping with challenges in consultation, OVIS2.5 uses curriculum passing beyond the normal Chain-of-Reving (COT) curriculum. Its training data includes the “imaging samples” of preparation and meditation, which reaches the “thought-time thinking mode at the time of seeing. Users can allow this mode (as zealously discussed in the Locallllama Reddit (session) to trade in rapid response to the accuracy of the step in step and model to understand. This is especially beneficial for the activities that require a deep multimodal evaluation, such as a scientific question to comment or solve mathematical problems.

Reaches for work and state consequences

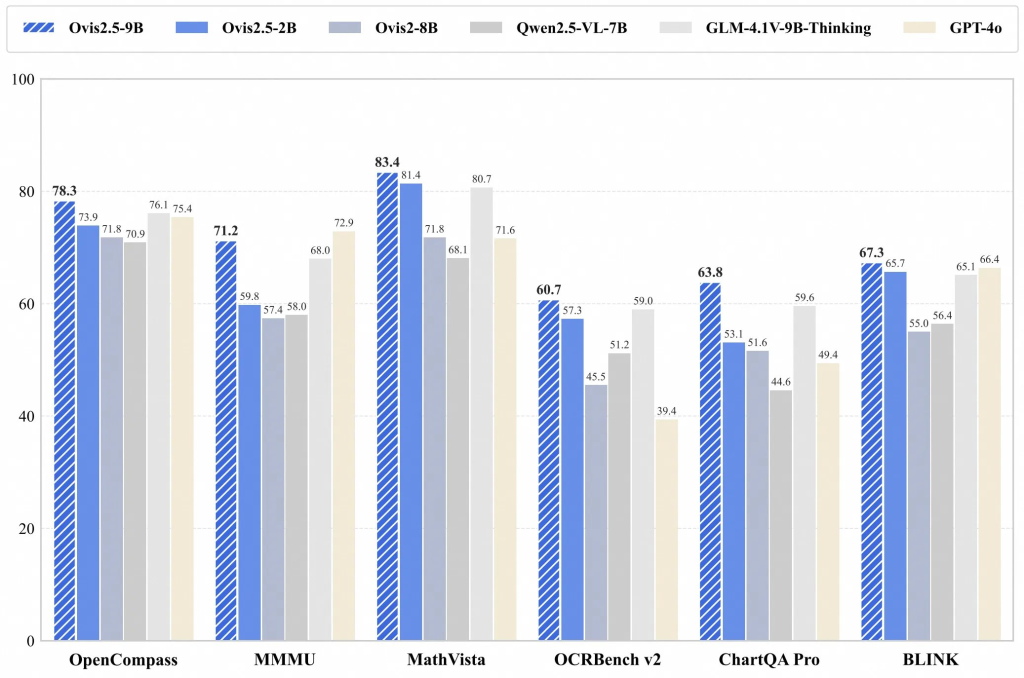

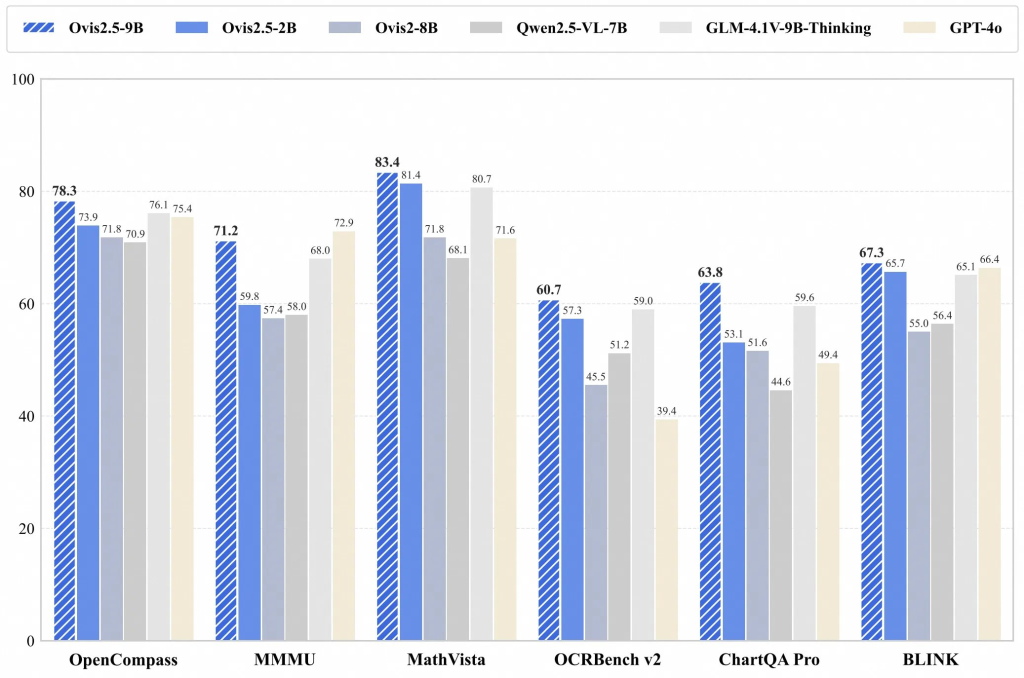

Ovis2.5-9B is up to the average number of 78.3. Ovis2.5-2B Scores 73.9, regular setup model setup models on the device or resources test. Both models bring separate effects to special, leading domain:

- The title is toeason (Mathvista, Mmmu, Math)

- OCR analysis and chart (Ocrbench V2, Charcha CE)

- RefCoco, RefcoG)

- Video and Multi-Image QUSIsion (Blink, VideeMe) Ovis2_5_Tech_report.pdfx

Technological comments on Reddit and X highlights marvelous progress in the OCR and the processing of documents, and users noticing the text.

Top-effective training and fraudulent shipping

OVIS2.5 Make good final training by hiring multimodal employment and advanced hybrid developments, bringing until 3-4 × speeds. The 2B of lightless 2B continues to continue a series of '”a small model, a large filosophy, the philosophy, enables higher multimodal understanding to Mobile devices.

EVIS2.5 Models are recently received (9B and 2B) marked success in the Multimodal Aultimodal Aulitimodal Aulitimodal Aui, boasting state-of-the-art scores on the front of Openconcompass. Important establishment including the transformation transformation transformation of the higher information, and the “Ovis2.5 Excelli-imaginary mode. of the contributions by AI. Its focused training and 2b lack of 2b different makes Multimodal skills reach both researchers and forced services.

Look Technical paper and models in face of face. Feel free to look our GITHUB page for tutorials, codes and letters of writing. Also, feel free to follow it Sane and don't forget to join ours 100K + ml subreddit Then sign up for Our newspaper.

Asphazzaq is a Markteach Media Inc. According to a View Business and Developer, Asifi is committed to integrating a good social intelligence. His latest attempt is launched by the launch of the chemistrylife plan for an intelligence, MarktechPost, a devastating intimate practice of a machine learning and deep learning issues that are clearly and easily understood. The platform is adhering to more than two million moon visits, indicating its popularity between the audience.