Meta Ai introduces Deepconf: The first AI of AI Fulfilling 99.9% in AIM 2025 with open models using GPT-OSS-120B

Large models of languages (llms) and restart AI, by thinking the same with the commonly adaptation methods of payment as a very important advances. However, these processes are based on basic trading: Helping many ways of consultation strengthens the accuracy but at the cost of meeting. A group of researchers from Meta Ai and UCSD informed Thinking Deeply With Confidence (Deepconf)A new AI nearly eliminated this trade. Deepconf Delevers State-of-The-Art consultation with Dramatic Perfain Fains-ALeasing, For example, 99.9% accuracy In the Rululing AUULLING AUULLING AUCUNME 2025 Maths using Open-Source GPT-OSS-120B, while you need to 85% tokens produced by than to think the same common.

Why is DEEDCONF?

The same thinking (adapting and most of the standard De Fu Fu Fu Fu Fu Fu Fu Cho Excellence of LLM: Produce many election solutions, and select the most common answer. While working successfully, this method has Diration is returned-Acturacy plateaus or decrease as if many methods are still sample, because high-quality consultation traces can put a vote. In addition, producing hundreds or thousands of clues each question is very expensive, both in time and Compute.

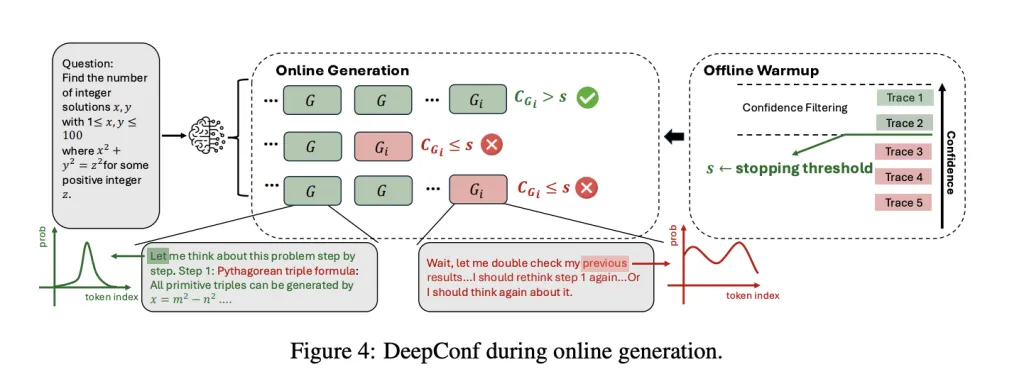

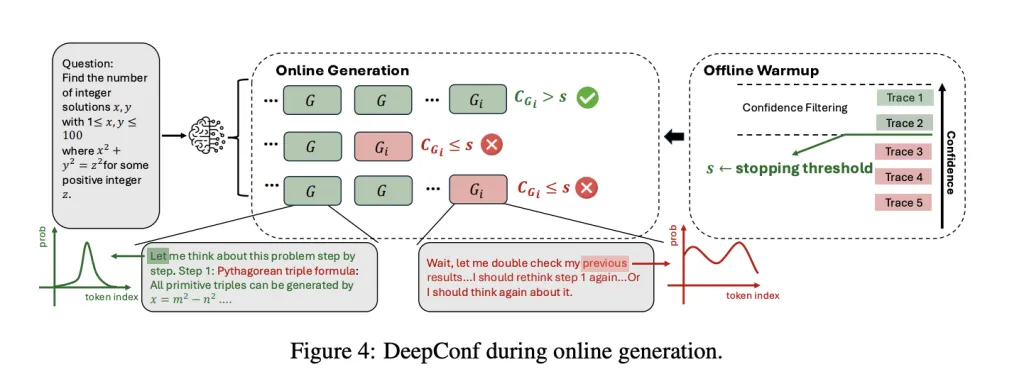

Deepconf deals with these challenges by Exploit llm's llm signals itself. Rather than carry up all the follow-up follow-up, it affects the ways that agree in low ways – or Far generations (online) or thereafter (Offline) – Let trajectors only be very reliable to appreciate the final answer. This strategy is Model-Agnosticit requires No Training or Training of HyperParameterand may be protected by existing model or framework that applies to the changes of the smaller code.

How to work Deepconf: Confidence As Director

Deepconf introduces several progress on how measured by confidence and applied:

- Token Ceemet: In each tokens produced, combine smaller potential logging in to Top-K elections. This gives a measure of conviction.

- Group confidence: Average token to the self-esteem in a slippery window (eg, 2048 tokens), to provide well-made signal, between the quality of thinking.

- The Suthan Confidence: Focus on the final part of tracking a consultation, where the answer resides, holding late.

- The lowest confidence in the lowest: Identify the reliable part in the track, which often shows a reset.

- Percentage confidence below: Highlight the worst parts, which are highlighted by mistakes.

These metrics and then used for Weight votes (Counting higher follow-up) or Filters of filtering (Only the highest fingers is reliable). In Internet modeDeepconf stops making tracks as soon as its confidence decreases under the strong burning limit, reduce the abundance of waste integration.

Important Results: Working and Working well

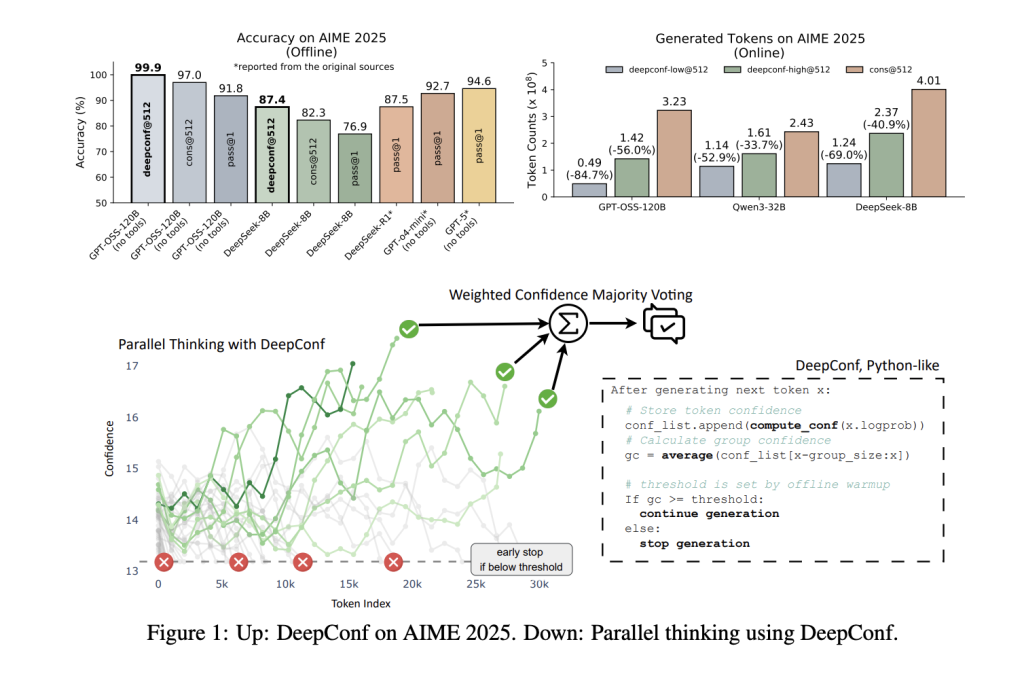

Deepconf was evaluated across the many Benchmarks (AIME 2024/2025, Hmmt 2025, Brumo25, GPQA-Diamond) and models (Deepseek-8b, QPT-OSS). The results are surprising:

| Statue | Dataset average | Pass @ 1 ACC | Cons @ 512 ACC | Deepconf @ 512 ACC | Tokens are saved |

|---|---|---|---|---|---|

| GPT-OSS-120B | AIME 2025 | 91.8% | 97.0% | 99.9% | -84.7% |

| Deepseek-8b | AIME 2024 | 83.0% | 86.7% | 93.3% | -77.9% |

| QWEN3-32B | AIME 2024 | 80.6% | 85.3% | 90.8% | -56.0% |

To improve performance: For all models and datasets, DEEPCONF is upgrading the accuracy ~ 10 percent percent Over a general vote, usually filled with the upper limit of Benchmark.

Ultra-Works well: By setting early in order to be lower, DEEPCONF reduces the total number of tokens produced by 43-85%without loss (and usually profits) with the final accuracy.

Connect & Play: Deepconf works out of box and any model – no good planning, no hyperparameter search, and no changes to low art. You can throw your existing stains (eg vllm) with ~ 50 code lines.

It is easy to move: The method is used as non-adhesive additions in existing engines, which only require access to the Logrobs of Token-Level Logrobs and a few logic lines of confidence and standing early.

Simple Compilation: Small Code, High Impact

Deepconf implementation is very easy. With VLLM, changes are small:

- Expand the LogProb Process Tracking the confidence of SLIDING-windows.

- Add Start Start Check before issuing each result.

- Pass the galleries of confidence With API, without model return.

This allows any corresponding conclusion to the Openai to support the DeepConf with one additional setting, making it a less time to accept in production areas.

Store

Meta Ai Deepconf represents a jump In the llm is consultation, bringing higher accuracy and well-shown efficiency. By making dynamic confidence in the internal confidence of the model, the Deepconf reaches what was not available in open models: Continuous results in Elite consultation activities, fraction of consolidation costs.

Kilombo

FAQ 1: How does it improve accuracy and efficiency differences in comparison with great votes?

Deepconf filters – Voting first to be followed with higher model confidence, increasing the accuracy of 10 percent of all benches reach the same benches when you vote alone. At the same time, its first termination of lower confidence in slashes token token up to 85%, providing both performance and effective service delivery

FAQ 2: Does Deentconf be used for any model of language or operating frame?

Yes. Deepconf is a perfect example of Agnostic and can be integrated at any proten stack-includes open models and trading models – without modification or conversion. Shipment requires only small changes (~ 50 VLLM code lines), Tongs of Tokeng Tokvobs that strengthens your confidence and treats early standing.

FAQ 2: Does Deepconf need to return, special data, or complicated redemption?

No. Deepconf is fully operational during observation, which does not require further training, or hyperparameter searches. It uses only the built-in exit and works quickly with regular API programs of the leading framework; It is strong, stability, and shipment in realistic activities without interruption.

Look Page and project page. Feel free to look our GITHUB page for tutorials, codes and letters of writing. Also, feel free to follow it Sane and don't forget to join ours 100K + ml subreddit Then sign up for Our newspaper.

Asphazzaq is a Markteach Media Inc. According to a View Business and Developer, Asifi is committed to integrating a good social intelligence. His latest attempt is launched by the launch of the chemistrylife plan for an intelligence, MarktechPost, a devastating intimate practice of a machine learning and deep learning issues that are clearly and easily understood. The platform is adhering to more than two million moon visits, indicating its popularity between the audience.