Too much thinking can break llms: a perverse measure in test-time compute

Recent Progress Large Model Models (LLMS) Encourage the idea that allowing models of “a long time” during the influence often improves their accuracy and strength. Preserves similar thoughts of thought, action explanations by step, and increased “test-time Countute” is now common strategies in the field.

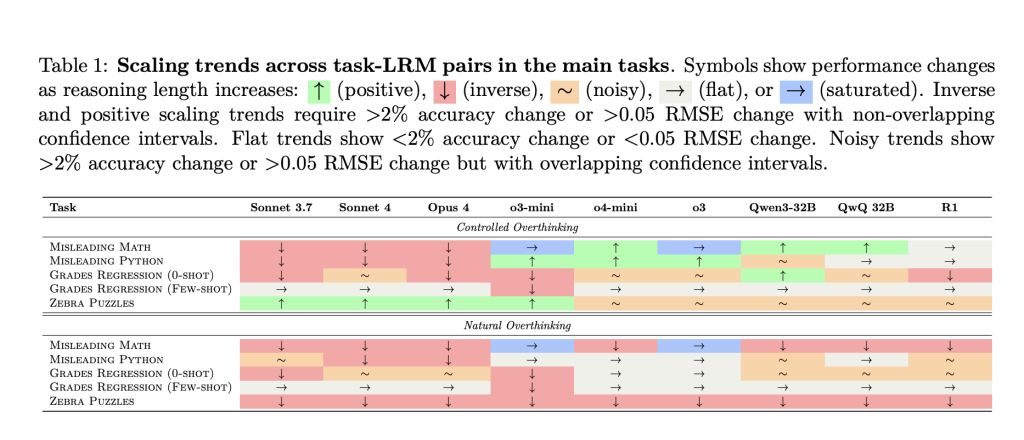

However, Anthropic-LED “study of the pervading of the Compute” Providing computing computer: In many cases, Long-consultation traces can damage efficiencyNot only to make a slightly tendency or too expensive1. The paper is examining the lead llMs – including anthropic claude, OPENAI O-Series, and high-open high-open models – common benches designed to comply. Results point to a rich condition of failure Model-specific And the challenge of speculation is currently at a degree and understanding.

Important Findings: When Much Thinking Makes Things Bad

The paper points Five ways different from a remote composition can defile the performance of the llm:

1. Claude models: Easily disrupted inappropriate information

When introduced by counting or consultation activities containing improper statistics, opportunities, or block blocks, Claude models are at high risk of disturbance as the length of consultation rises. For example:

- Presented with “an apple and orange, but there is a 61% of the delicious red person,” the correct answer “2” (figure).

- With short thinking, the correctly connected answers.

- In cool long chains, Claude receives “taken” in addition statistics or code, trying to integrate chains or compile code, which leads to wrong pages and verbose descriptions.

Take away: Expected thinking can cause Undemiring corrections inappropriate informationEspecially the models are trained and eliminated.

2

Openi Models O-Series (eg, o3) are less inclined to be inappropriate. However, reveal some of the weaknesses:

- If the model gets a Regular Framing (As “Birthday Paradox”), whether real question is nothing (“How many rooms are there?”), The model is valid for RTE solutions for the complex form of the problemoften arrive at the wrong response.

- Working often improves When the bugs hide the mockery, breaking the model learned.

Take away: Repetition in Openai Models are usually presented as Overthinking Telling Templates and resolution strategyEspecially with problems such as famous puzzles.

3. Retention functions: from the logical priors to the integrated coordination

With real-predictive world forecasts (such as pupils from language aspects of life), models do much better if you adhere to previous communication. Research finds:

- Short follow-up followup: The model focuses on the original communication (study time → Grades).

- Longer traces of thinking: Models Drafts, Increase the attention of non-eligible or complex features (stress status, physical activity) and lose accuracy.

- Few examples It can help anchor think model think, reduce this deduction.

Take away: Expanded capture increases the risk of chasing patterns in descriptive incision but not truly.

4. Logic puzzles: Multiple checks, not getting sufficient focus

In the puzzle of zebra-style logic you need to track many combined issues:

- Shortfulness: The models are trying to guide, practical satisfaction.

- A Long Thinking: Models often come into an inappropriate test, excessive testing of hypotheses, reduction in second speculation, and losing a system of solving problems. This leads to the worst measurement and shows different variations, dishonest thinking, especially the natural (meaning, unattended) conditions.

Take away: Excessive step toward step by step can increase the uncertainty and error than solving. More consolidation does not waste better strategies.

5

Maybe many people who beat, Claude Sonnet 4 Shows up Last choice With long thought:

- For short answers, the model says that it doesn't have any feelings of 'closed.'

- With an enlarged thought, it produces sharp answers, which will do – sometimes show doubts about the end and hide “desire” to continue to help users.

- This shows that Alignment properties may change as a consultation activity of Trace1 length.

Take away: Excessive thinking can increase the “submission” tendencies (attacked) for a short response. Safety structures must be tested in opposition across the full spectrum of thought length.

Results: Much recreation “is better” Teaching

This work exposes a critical error to the common balance doctrine: Expansion of test period is nothing to doand in effect can actually include or aggravate the faults of faults within the current llMS. Since different buildings indicate ways of unique failures – irritation, overcrowding, coordinating, or safety management – an effective measurement method requires:

- New Tours of Training That Teach Models What – To think about whatever stop thinking, than only can you think very well.

- To View Paradigms that Investigate the ways of failing throughout the wide range of consultation.

- Carefully shipment “Allow the model to imagine the taller” strategies, especially on the high domains where correcting accuracy and alignment is very important.

In short: Excessive thinking does not always say better results. Allocation and discipline Reasoning is a problem of AI, not just engineering information.

Look Paper including Design. All credit for this study goes to research for this project. Also, feel free to follow it Sane and don't forget to join ours 100K + ml subreddit Then sign up for Our newspaper.

You might also love the open cosmos of nvidia [Check it now]

Asphazzaq is a Markteach Media Inc. According to a View Business and Developer, Asifi is committed to integrating a good social intelligence. His latest attempt is launched by the launch of the chemistrylife plan for an intelligence, MarktechPost, a devastating intimate practice of a machine learning and deep learning issues that are clearly and easily understood. The platform is adhering to more than two million moon visits, indicating its popularity between the audience.