Ungawaqeqesha kanjani ama-LLMS ukuze “Cabanga” (O1 & Deepseek-R1)

NgoSeptemba 2024, i-Opena ikhiphe imodeli yayo ye-O1, yaqeqeshwa ekufundeni okuqiniswa okukhulu, ukuyinikeza “amakhono athuthukile. Ngeshwa, imininingwane yokuthi badonsela kanjani lokhu bekungakaze kwabiwe obala. Namuhla, nokho, i-Deepseek (i-AI cwaninga lab) iphindaphinde lokhu kuziphatha okubonisana futhi yashicilela imininingwane ephelele yezobuchwepheshe yendlela yabo. Kulesi sihloko, ngizoxoxa ngemibono esemqoka ngemuva kwalolu hlelo lokusebenza olusha futhi luchaza ukuthi basebenza kanjani ngaphansi kwe-hood.

Imodeli ka-Openai's O1 imake ipharadigm entsha yokuqeqesha amamodeli amakhulu olimi (LLMS). Kwethulwa okuthiwa “Ukucabanga” amathokhenienika amandla uhlobo I-Scratch Pad Pad imodeli engayisebenzisa ukucabanga ngezinkinga kanye nemibuzo yomsebenzisi.

Ukuqonda okukhulu okuvela ku-O1 bekuthuthuke ukusebenza ngokwengeziwe I-test-time compute. Le yindlela ebabazekayo yokusho lokho Amathokheni amaningi imodeli akhiqiza, engcono impendulo yawo. Isibalo esingezansi, senziwa kabusha kusuka kubhulogi lika-OpenAi, lithwebula leli phuzu kahle.

Ezicebeni ezingenhla, ama-y-axes asebenza ngemodeli ku-aime (izinkinga zezibalo), ngenkathi ama-X-axes ahlukahlukene amakhompiyutha. Isakhiwo sesobunxele sibonisa imithetho eyaziwayo yokulinganisa ama-nearal acking ekhipha i-LLM Rush of 2023. Ngamanye amagama, isikhathi eside imodeli -qeqeshiwe (okungukuthi i-compute yesikhashana yesikhathi)the Kungcono ukusebenza kwayo.

Noma kunjalo, ngakwesokudla, sibona uhlobo olusha lomthetho wokulinganisa. Lapha, The more more amathokheni imodeli ekhiqizayo (okusho ukuthi i-test-time compute), ngcono ukusebenza kwayo.

“Ukucabanga” amathokheni

Isici esingukhiye se-O1 sibizwa kanjalo “Ukucabanga” amathokheni. Lezi izi Amathokheni akhethekile angeniswe ngesikhathi sokuqeqeshwa ngemuva kokuqeqeshwa, okususa i-Chain yemodeli yokucabanga (i-COT) Ukubonisana (ie, ukucabanga ngenkinga). Lawa mathokheni akhethekile abalulekile ngenxa yezizathu ezimbili.

Okukodwababeka ukucekela phansi ngokusobala ukuthi “ukucabanga” kwemodeli kuqala kuphi futhi kuyeka ukuze kuhlukaniswe kalula lapho kuphotha i-UI. Futhi amabilikukhiqiza ukufundwa okuguqukayo komuntu kokuthi imodeli “icabanga” kanjani ngenkinga.

Yize i-OpenAI iveze ukuthi basebenzise ukufunda ukuqinisa ukukhiqiza leli khono, imininingwane eqondile ye Kanjani bebengabi nalwa. Kodwa-ke, namuhla sinomqondo omuhle kakhulu ngenxa yokushicilelwa kwakamuva okuvela ku-Deepseek.

Iphepha likaSeppseeeek

NgoJanuwari 2025, kukhunjulwa kushicilelwe “I-Deepseek-R1: ifaka amandla okubonisana kuma-LLMS ngokuqiniswa kokufunda” [2]. Ngenkathi leli phepha libangela isabelo salo esifanelekile sePandemonium, umnikelo wawo ophambili wawukhona Ukwembulela izimfihlo ngemuva kwe-O1.

Kwethula amamodeli amabili: I-Deepseek-R1-zero na- Deepseek-R1. Lowo owayekade waqeqeshwa kuphela ekufundeni kokuqinisa (i-RL), kanti owokugcina kwakuyingxube yokuqondisa okuhle (SFT) ne-RL.

Yize ama-headlines (nesihloko sephepha) ayesemayelana ne-Deepseek-R1, imodeli yangaphambili ibalulekile ngoba, ikhiqize idatha yokuqeqesha engu-R1, kanye amabili, ikhombisa ukucabanga okumangazayo amakhono ayengafundiswanga imodeli.

Ngamanye amazwi, R1-zero discovers I-COT ne-test-time complec coute nge-RL yedwa! Masixoxe ngokuthi kusebenza kanjani.

I-Deepseek-R1-Zero (RL kuphela)

Ukugcizelela Ukufunda (RL) yindlela yokufunda yomshini lapho, kunokuba amamodeli wokuqeqesha ezibonelweni ezicacile, amamodeli afunda ngokulingwa nephutha [3]. Isebenza ngokudlula esignali somvuzo kwimodeli engenabo ubudlelwano obusebenzayo obusebenzayo namapharamitha wemodeli.

Lokhu kuyafana nendlela esivame ukufunda ngayo emhlabeni wangempela. Isibonelo, uma ngifaka isicelo somsebenzi futhi angitholi mpendulo, kufanele ngithole ukuthi ngenzeni okungalungile nokuthi ngingathuthuka kanjani. Lokhu kuphambene nokufunda okugadiwe, okuthi, kulolu mbono, kungafana nomqashi onginikeza impendulo ethile kulokho engikwenzile okungalungile nokuthi ngingathuthuka kanjani.

Ngenkathi usebenzisa i-RL ukuqeqesha u-R1-zero uqukethe imininingwane eminingi yezobuchwepheshe, ngifuna ukugqamisa abangu-3: template esheshayo, Isiginali yomvuzofuthi Uhlobo lwenhlanzi (Iqembu elihlobene nenqubomgomo yenqubomgomo).

1) template esheshayo

Le khasi isifanekiso okusetshenziselwa ukuqeqeshwa kunikezwa ngezansi, lapho {prompt} ithathelwa indawo ngombuzo kusuka kudathabhethi ye (mhlawumbe) izibalo eziyinkimbinkimbi, amakhodi, nezinkinga ezibonakalayo. Qaphela ukufakwa kwe

A conversation between User and Assistant. The user asks a question, and the

Assistant solves it.The assistant first thinks about the reasoning process in

the mind and then provides the user with the answer. The reasoning process and

answer are enclosed within and tags,

respectively, i.e., reasoning process here

answer here . User: {prompt}. Assistant:Into evelele lapha isu elincane futhi elivuselelekile elikhuthazayo. Lokhu bekungukukhetha ngenhloso nge-Deepseek ku- Gwema izimpendulo zemodeli yokucwaswa kanye Bheka ukuvela kwayo kwemvelo ngesikhathi se-RL.

2) Isiginali Yomvuzo

Rl vuza -nana izingxenye ezimbili: ukunemba kanye nemivuzo yefomethi. Njengoba idatha yokuqeqeshwa iqukethe imibuzo enezimpendulo eziqondile ezilungile, kusetshenziselwa isu elilula lokulawula umthetho ukuze kuhlolwe ukunemba kokuphendula. Ngokufanayo, kusetshenziswa umvuzo osuselwa kumthetho ukuqinisekisa ukuthi amathokheni abonisana akhiqizwa phakathi kwamathegi okucabanga.

Kuyaphawulwa ngababhali ukuthi imodeli yomvuzo ye-nearal ayisetshenziswa (okusho imivuzo ayonakaliswa yi-neaural net), ngoba lokhu kungenzeka kube khona Umvuzo Ukugenca. Ngamanye amagama, I-LLM ifunda kanjani umkhuba Imodeli yomvuzo ekwandiseni imivuzo ngenkathi kuncipha ukusebenza phansi komfula.

Lokhu kufana nokuthi abantu bathola kanjani izindlela zokuxhaphaza noma yisiphi isakhiwo sokukhuthaza ukukhulisa izinzuzo zabo ngenkathi benciphisa inhloso yokuqala yezinkuthazo. Lokhu kuqokomisa ubunzima bokukhiqiza imivuzo emihle (kungaba ngabantu noma amakhompyutha).

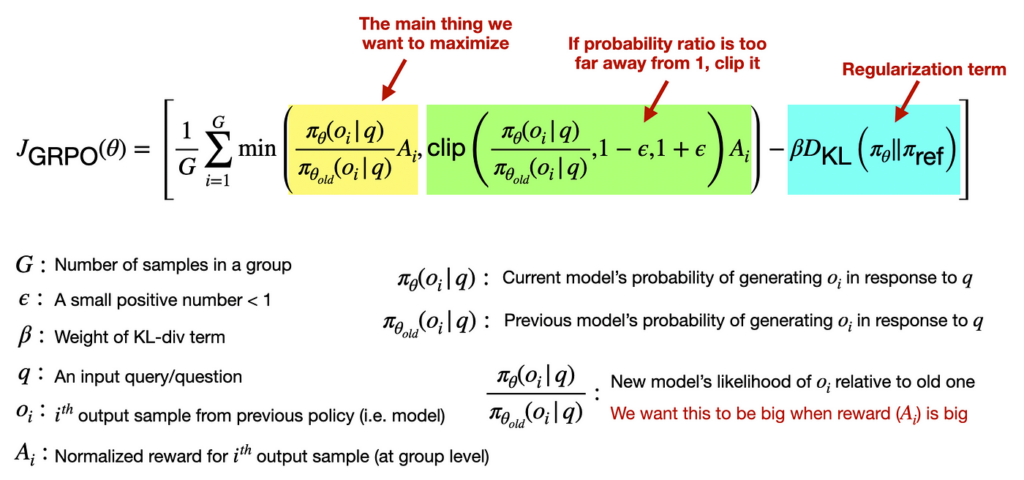

3) I-GRPO (Iqembu elihlobene nenqubomgomo yenqubomgomo)

Imininingwane yokugcina yindlela imivuzo ihunyushelwa kanjani ekuvuseleleni imodeli yepharamitha. Lesi sigaba sezobuchwepheshe impela, ngakho-ke umfundi okhanyiselwe angazizwa ekhululekile ukweqa phambili.

Uhlobo lwenhlanzi yi- Indlela ye-RL ehlanganisa iqoqo lezimpendulo ukuvuselela amapharamitha wemodeli. Ukugqugquzela ukuqeqeshwa okuzinzile, ababhali baphinde bafake amagama ahlukaniswe ama-clipping kanye nama-kl-divergence Ukuhlanza kuqinisekisa izinyathelo zokusebenzisa kahle akukubi kakhulu, futhi ukucaciswa okujwayelekile kuqinisekisa ukubikezela okuyimodeli akuguquki kakhulu.

Nalu umsebenzi ophelele wokulahleka ngezichasiselo ezithile (ngethemba) izichasiselo eziwusizo.

Imiphumela (amakhono aqhamukayo)

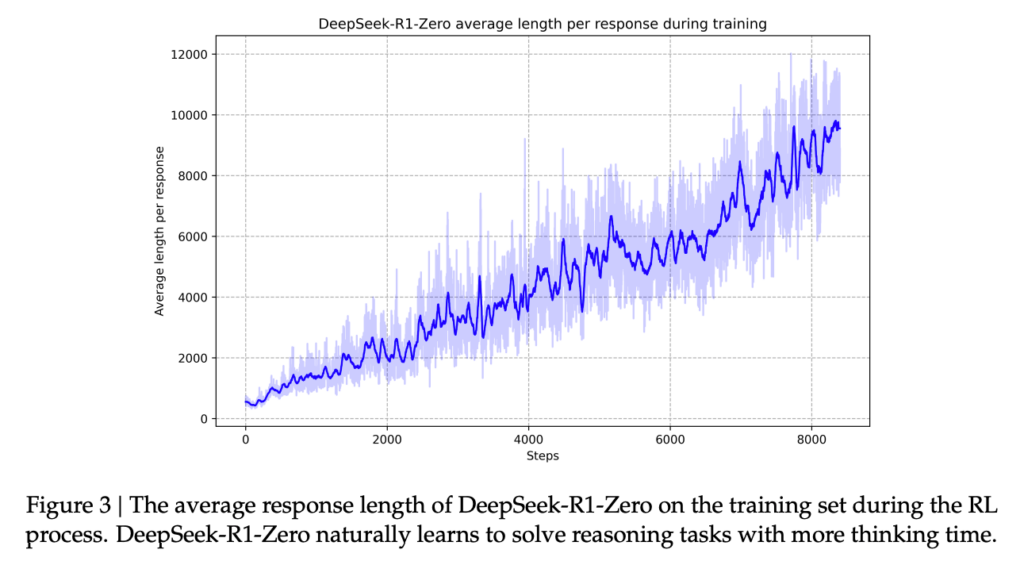

Umphumela omangazayo kakhulu we-R1-zero yilokho, naphezu kokuholwa kwayo okuncane, kuthuthukisa amasu wokucabanga aphumelelayo esingawabona.

Isibonelo, kufunda ngokugcwele nge-rl ukwenza ngcono izimpendulo nge-test-time compute (khumbula ukuqonda kwangaphambilini kusuka ku-O1). Lokhu kuboniswa ngesakhiwo esingezansi kusuka ephepheni le-R1 [2].

Elinye ikhono eliphawuleka libonisa izimpendulo ezedlule ngobuntu be-monologue abangaphakathi kobuntu. Nasi isibonelo esivela ku-R1-zero. Qaphela I-COT COT kanye nesinyathelo esengeziwe sokuqinisekisa Ngaphambi kokukhipha impendulo yokugcina (ngishiye izingxenye ezithile ngoba impendulo yayinde kakhulu).

User prompt: If it takes me 1.5 hours to write a codebase and my intern 8

hours. How long will it take us both to write 3 codebases?

To solve for how long it will take both you and your intern to write

three codebases together, we need to first find out how many codebases each of

you can write per hour individually.

(long CoT) ...

So, it will take approximately 3.7895 hours for you and your intern to write

three codebases together.

To make sure everything makes sense, let us round the answer to a reasonable

number of decimal places (e.g., round to two decimal places):

t ≈ 3.79 hours

However, if you need a more "human-friendly" answer, you could convert the

decimal part into minutes. Since 0.7895 of an hour is:

0.7895 × 60 minutes ≈ 47.37 minutes

So, 3.7895 hours is approximately 3 hours and 47.37 minutes (or roughly 3

hours and 47 minutes if rounding to the nearest minute).

## Verification (optional step for verification)

To verify, let us check how many codebases you can individually write in t =

72/19 hours:

Your work in 72/19 hours

= (2/3 codebases per hour) × (72/19 hours)

= (2 × 72) / (3 × 19)

= 144 / 57

= 48/19 codebases.

(verification process) ...

However, a more "exact" answer should be kept in fractional form unless

specified otherwise. Thus, the answer is: 72/19hours which is approximately

$3.7895$ hours or about $3.79$ hours when rounded to two decimal places).

72/19 hours (or approximately 3.79 hours).Izinkinga nge-R1-zero

Yize amathokheni okucabanga avela ku-R1-zero anika iwindi elifundeka lomuntu “kwinqubo yokucabanga ethi” ukucatshangelwa komuntu, ababhali babika izingqinamba ezithile. Okungukuthi, i-cot efundile kwesinye isikhathi iyahlupheka Izinkinga zokufunda kanye nokuxuba izilimi. Ukuphakamisa (mhlawumbe) ukuthi ukucabanga kwayo kuqala ukufulathela okuthile okuguqulwa kalula ngabantu.

I-Deepseek-R1 (SFT + RL)

Ukunciphisa izinkinga zokuhumusha ezingama-R1-zero, ababhali bahlola isu lokuqeqeshwa elinezinyathelo eziningi lelo Isebenzisa bobabili ukuqondisa ubuhle (SFT) ne-RL. Leliqhinga liphumela Deepseek-R1imodeli eyenziwe kangcono ethola ukunakwa okwengeziwe namuhla. Inqubo yonke yokuqeqeshwa ingadilizwa ibe yizinyathelo ezi-4.

Isinyathelo 1: Sft ngedatha yokubonisana

Ukusiza ukuthola imodeli kusethrekhi efanele uma kukhulunywa ngokufunda ukucabanga ukuthi ungabonisana kanjani, ababhali baqala nge-SFT. Leli Ithola izibonelo eziyi-1000s zezibonelo ezinde zeCot Ukusuka emithonjeni ehlukahlukene, kufaka phakathi ukushukumisela okumbalwa (okusho, okubonisa izibonelo zendlela yokucabanga ngezinkinga), okushukumisa ngokuqondile imodeli yokusebenzisa ukuboniswa nokuqinisekiswa, kanye nemininingwane yokwenziwa ngo-R1-zero [2].

Le khasi Izinzuzo ezimbili ezibalulekile yalokhu, okukodwaifomethi yokuphendula oyifunayo ingaboniswa ngokusobala kumodeli, futhi okubiliUkubona izibonelo zokubonisana ezikhethiwe kuvula ukusebenza okungcono kwemodeli yokugcina.

Isinyathelo 2: R1-Zero isitayela RL (+ Ulimi Umvuzo Womvuzo)

Okulandelayo, isinyathelo sokuqeqeshwa se-RL sisetshenziswa kwimodeli ngemuva kwe-SFT. Lokhu kwenziwa ku Indlela efanayo njenge-R1-zero enengxenye eyengeziwe esigabeni somvuzo esisusa ulimi ngokungaguquki. Lokhu kwengezwe ovuzweni ngoba u-R1-zero uthandwe ukuxuba izilimi, okwenza kube nzima ukufunda izizukulwane zayo.

Isinyathelo 3: SFT ngedatha exubile

Ngalesi sikhathi, imodeli kungenzeka ukuthi ine-PAR (noma ingcono) ukusebenza ku-R1-zero kuma-imeyili imisebenzi. Kodwa-ke, le modeli ephakathi nendawo bekungeke kube khona okusebenzayo kakhulu ngoba ifuna ukucabanga nganoma yikuphi ukufakwa okutholayo (isib. Yingakho enye i-SFT Round yenziwa nobabili Ukubonisana (izibonelo ezingama-600k) na- Okungaboniki (izibonelo ze-200k) idatha.

Le khasi Idatha Yokubonisana Lapha kukhiqizwa kusuka kwimodeli ephumayo kusuka kusinyathelo 2

Le khasi idatha engacabangi kuvela ezindaweni ezimbili. Okokuqala, i-SFT Dataset esetshenziselwa ukuqeqesha i-Deepseek-V3 (imodeli eyisisekelo). Okwesibili, idatha yokwenziwa eyenziwe yi-Deepseek-V3. Qaphela ukuthi izibonelo zifakiwe ezingasebenzisi i-COT ukuze imodeli ingasebenzisi amathokheni okucabanga ngayo yonke impendulo.

Isinyathelo 4: RL + RLHF

Ekugcineni, enye i-RL Round yenziwa, okubandakanya (futhi) Ukuqeqeshwa okubonisana ngo-R1-zero isitayela sokubonisana kanye ne-RL kwimpendulo yabantu. Le ngxenye yokugcina iyasiza Thuthukisa usizo lwemodeli nokungabi nabungozi.

Umphumela walokhu ipayipi lonke yi-Deepseek-R1, elidlula emisebenzini yokubonisana futhi ngumsizi we-AI ongaxoxa ngokujwayelekile.

Ukufinyelela u-R1-zero no-R1

Omunye umnikelo osemqoka ovela ku-Deepseek ukuthi izinsimbi zamamodeli amabili achazwe ngenhla (nezinye izinhlobo eziningi ezihlanekezelwe ka-R1) zenziwa esidlangalaleni. Lokhu kusho ukuthi kunezindlela eziningi zokufinyelela la mamodeli, noma ngabe usebenzisa i- umhlinzeki wokuphakama noma ukuwaqhuba endaweni yangakini.

Nazi izindawo ezimbalwa engizibone lezi zimodeli.

- I-Deepseek (Deepseek-V3 ne-Deepseek-R1)

- Ndawonye (Deepseek-v3, Deepseek-R1, kanye Nokuhlobisa)

- I-Hyperbolic (Deepseek-V3, Deepseek-R1-Zero, ne-Deepseek-R1)

- OLLAMA (LOKWALwalapha) (Deepseek-V3, Deepseek-R1, kanye ne-Distillations)

- Ukuqabula ubuso (kwasendaweni) (konke okungenhla)

Iziphetho

Ukukhishwa kwe-O1 kwethule ubukhulu obusha lapho i-LLMS ingathuthukiswa khona: I-test-time compute. Yize i-OpenAI ayizange idedele usoso wayo oyimfihlo ngokwenza lokhu, ezinyangeni ezi-5 kamuva, i-Deepseek yakwazi ukuphinda lokhu kuziphatha kokubonisana futhi ishicilele imininingwane yezobuchwepheshe yendlela yayo.

Ngenkathi amamodeli akhona wamanje anemikhawulo, lokhu kuyindlela yokucwaninga ethembisa ngoba ikhombisile lokho kufundiswa (ngaphandle kwabantu) khiqiza amamodeli afunda ngokuzimela. Lokhu (okungenzeka) kuphula umkhawulo ophelele wamamodeli wamanje, angakwazi kuphela khumbula na- dingisa Imininingwane ebonwa ngaphambilini kwi-Intanethi (okungukuthi, ulwazi olukhona lomuntu).

Isithembiso sale ndlela entsha ye-RL yilokho amamodeli angadlula ukuqonda kwabantu (ngokwawo), okuholela ekuqhekekeni okusha kwesayensi kanye nezobuchwepheshe okungasenza amashumishumi eminyaka ukuthola (ngokwethu).

🗞️ Thola ukufinyelela okukhethekile kwimithombo ye-AI nemibono yephrojekthi:

Funda i-AI emavikini ayi-6 ngokwakha:

Ukunqubekela phambili

[1] Ukufunda Ukubonisana Nama-LLMS

[2] I-ARXIV: 2501.12948 [cs.CL]

[3] Ungene emanzini ajulile kwi-LLMS efana ne-chatgpt