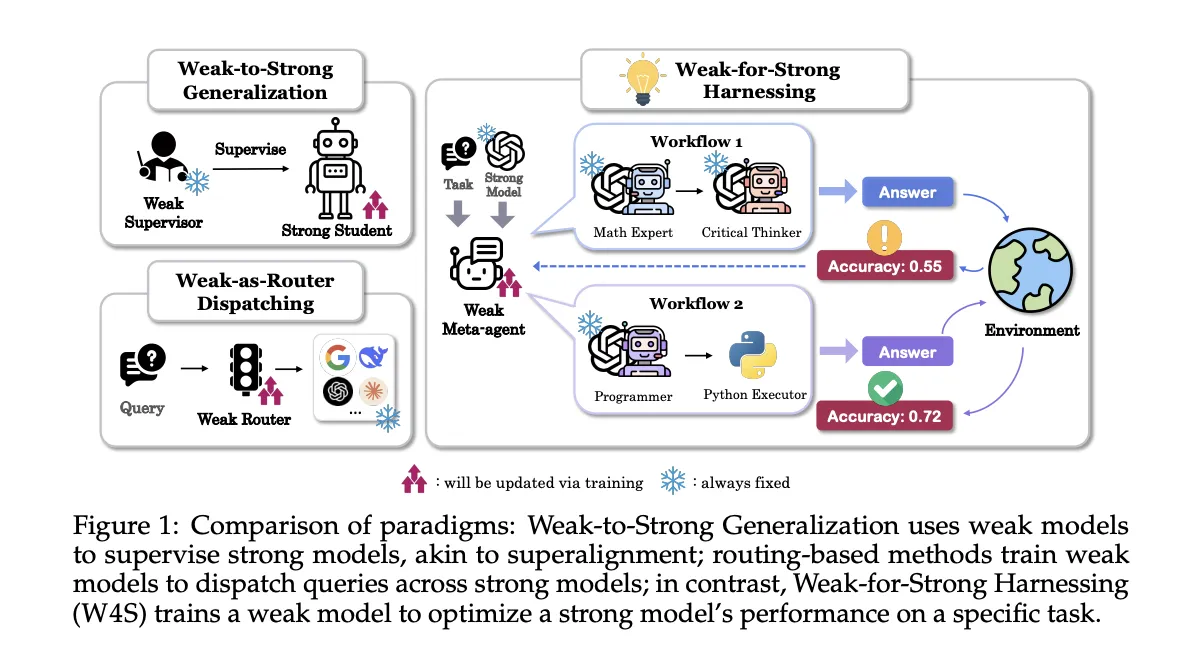

Weak-for-strong (w4s): A novel learning algorithm that trains a meta-weakness to design agentic workflows with dynamic llms

Investigators from Stanford, EPFL, and the UN enter Strong binding, W4sa new RL learning system that trains a small meta-agent to design and analyze functional code that calls a dynamic case model. The meta-agent does not live in a rigid model, it learns to program it. W4S formalizes the workflow as a multi-decision making process, and trains a meta-agent with a method called Enhancing Functional Learning for Agentic Jobs, Rlao. The research team reports consistent gains across all 11 benchmarks with 7B Meta-Agent trained for approximately one GPU hour.

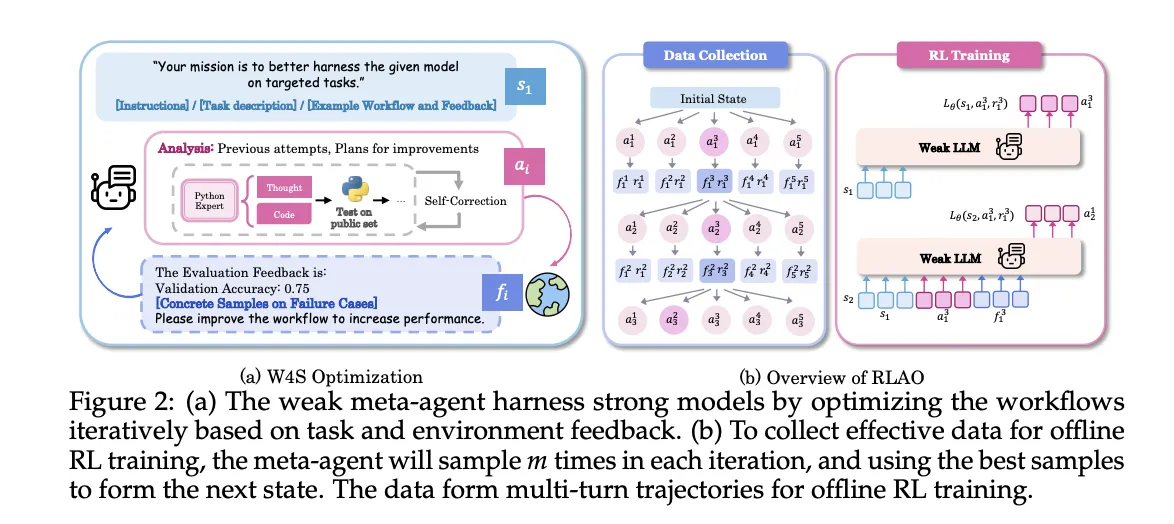

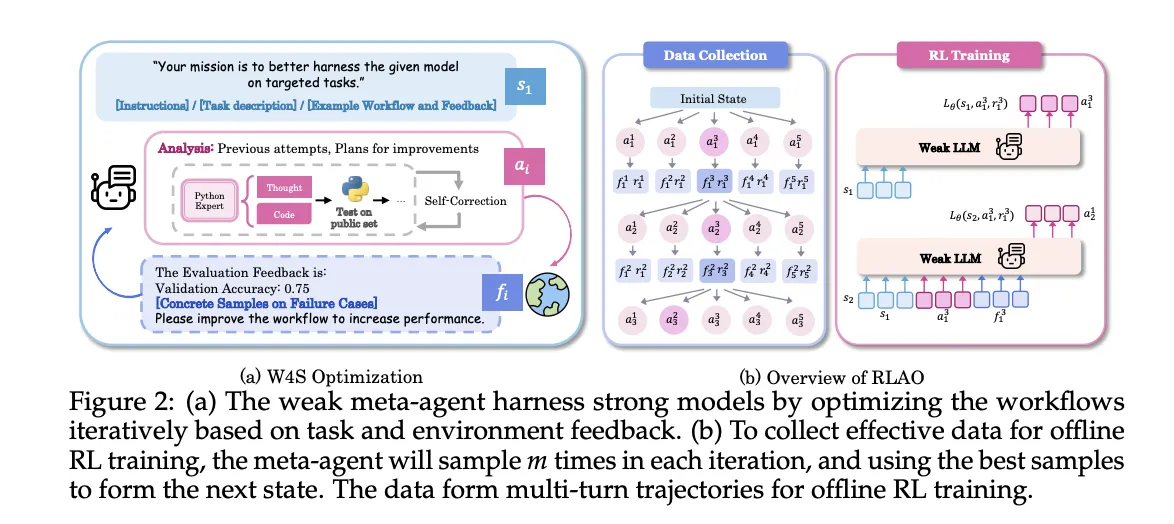

W4s work in turns. The state contains the work orders, the current work flow, and the response from the previous execution. An action has 2 components, a change analysis, and a new Python workflow code that executes those changes. The environment extracts the code from the validation objects, retrieves the correct and failure cases, and provides a new state for the next opportunity. Meta-agent can run a quick check on one sample, if up to 3 errors appear to be corrected, if errors continue the action is skipped. This loop provides a learning curve without affecting the weights of the vultures.

W4S works as a private loop

- Work flow: The weak meta-agent writes the new flow received by the strong model, expressed as fatal Python code.

- Execution and Response: A robust model derives a workflow from validation samples, and returns accuracy and error cases as feedback.

- Immersion: The meta agent uses the response to update the analysis and workflow, then repeats the loop.

Enhancing Operational Learning in Agenticflew (RLAOWe are divided

RLAO is an offline optimization process over multiple trajectories. In each iteration, the system samples more samples, saves the best action to advance the state, and saves others training. This policy has been restructured with a weighted reward for recovery. The reward is smart and compares the current confirmed accuracy in history, given a high weight when the new result hits the best, a low weight is given when we hit the last iteration. This objective favors robust development while controlling testing costs.

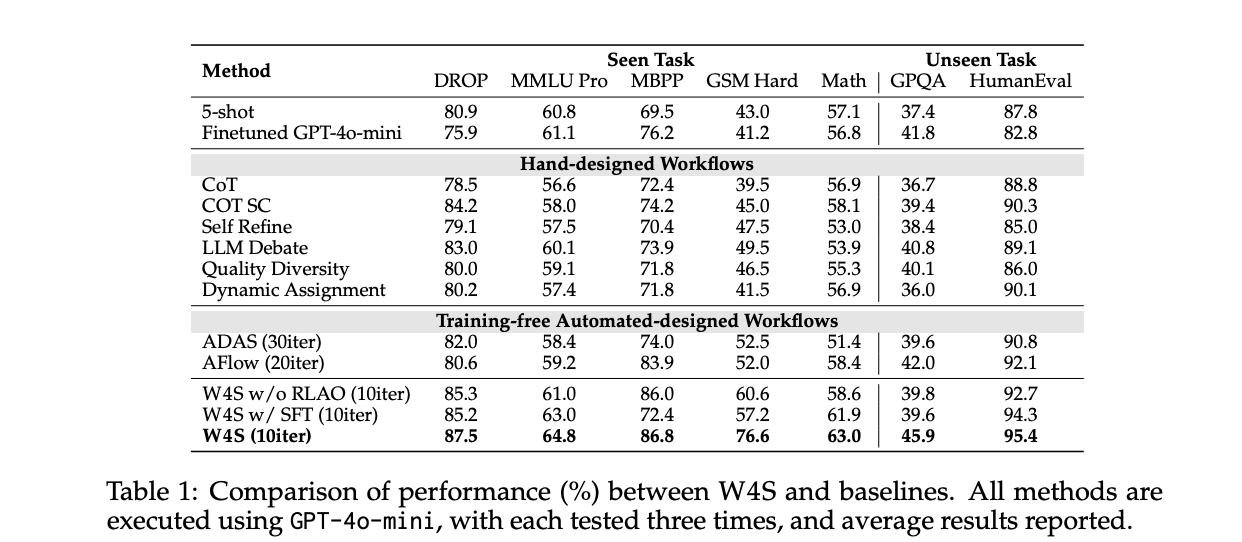

Understanding the results

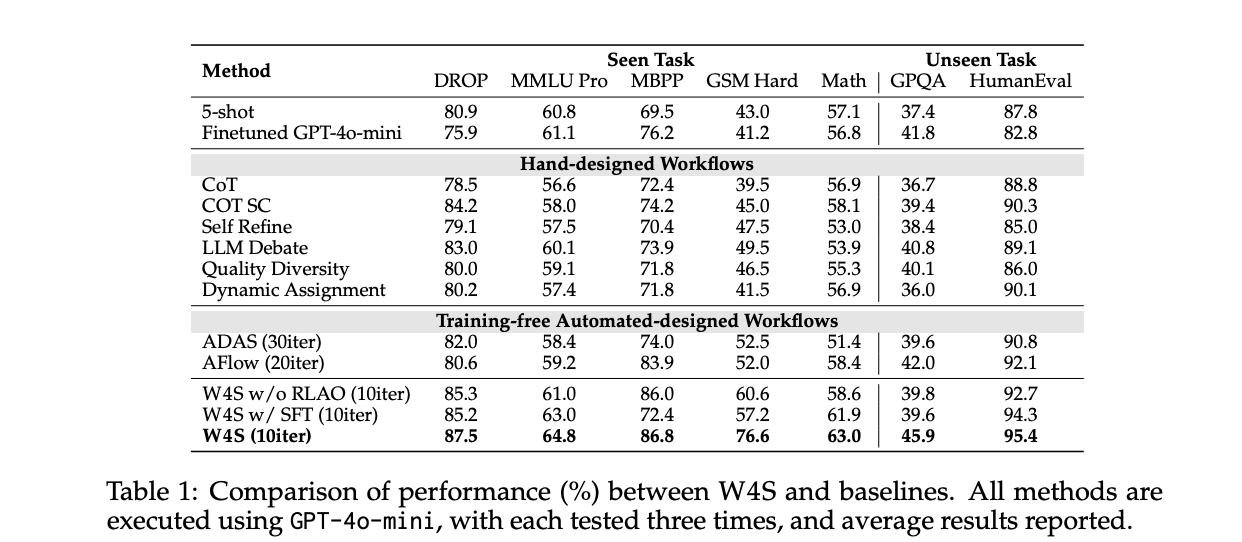

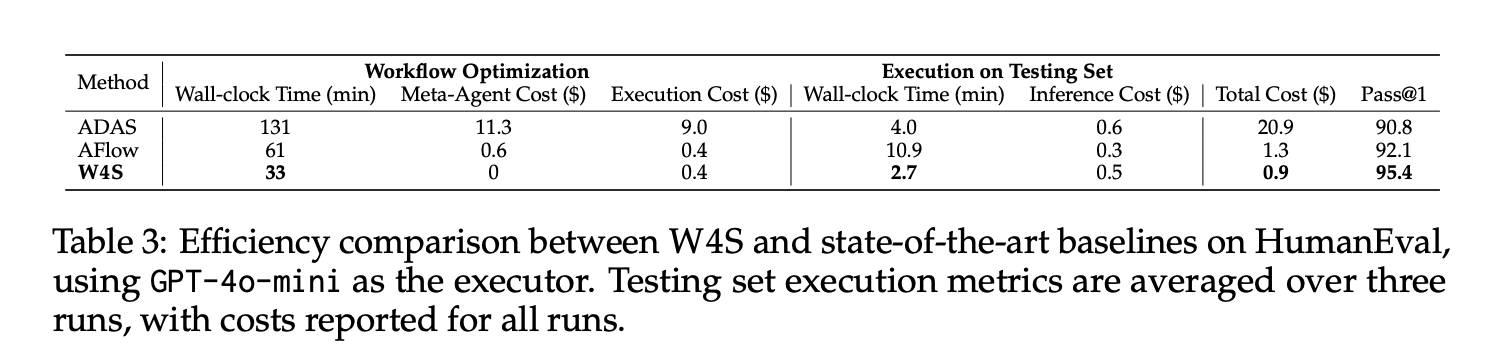

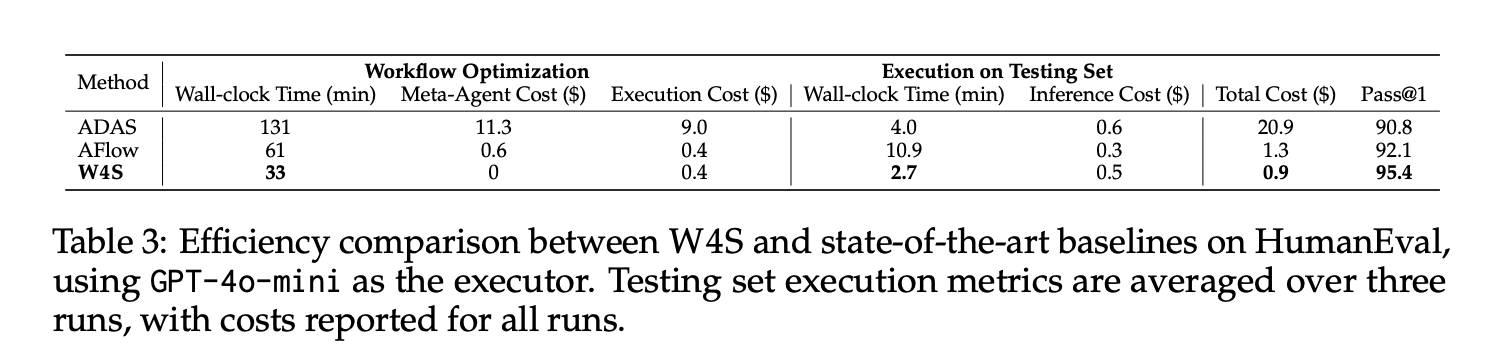

In Humental with GPT-4O-MINI as a Wife, W4s reaches more than @ 1 in 95.4, it costs about 33 minutes of work about 0.5 dollars, about 0.9 dollars. Under the same thing, it sleeps with ADAS Trail this number. The average implied gains against the default baseline ranged from 2.9% to 24.6% across the 11 benchmarks.

In statistical transfer, the meta-agent is trained on GSM Plus and MGSM with GPT-3.5-turbo as Mfakisine, and then analyzed on GSM8K, SVAMP, and SVAMP. The paper reports 86.5 on GSM8K and 61.8 on GSM Hard, both default settings. This shows that the transfer of learned orchestrations to related tasks without training the executor.

Beyond the tasks seen with the GPT-4O-MINI as a Wife, W4S goes through the training of free automatic methods that have not learned the programmer. This research also escapes that where the meta-agent is trained for better guidance than Rlao, the rlao agent yields better accuracy under the same budget. The research group installs the basic grpo of grpo in the weak model 7b of GSM Hard, W4s sprouted under limited compute.

ITETER AbSHERS Story. The Research Team puts W4S to 10 activation turns in the front tables, while Aflow runs for almost 20 turns and ADAS runs 30 turn possibilities. Despite the few turns, W4S achieves high accuracy. This suggests that programming over the code, combined with validation feedback, makes the search more sampled.

Key acquisition

- W4s trains a 7b vulnerability meta-agent with Rlao to write a Python flow with powerful killer harnesses, modeled as a comprehensive MDP.

- In Humental and GPT 4O MINI as a Wife, W4S reaches a Pass @ 1 of 95.4, with a performance of 33 minutes and an average cost of 0.9, beating the automatic bases under the same circus.

- Across the 11 benchmarks, the W4s improve the most powerful base by 2.9% to 24.6%, while avoiding the fine tuning of the solid model.

- The method works in an iterative loop, generating a workflow, extracting it from the validation data, and then analyzing it using the feedback.

- ADAS AND DISCOVERY AND LEARNING OR USED ONLINE FOR FREE, W4S is unique by training the programmer to learn offline.

W4s aims for the installation of decorative surfaces, not model tools, and trains the 7b meta agent to be programmed with a workflow that calls for dynamic vendors. W4S is officially the workflow of the work flexibility as a comprehensive MDP and makes a scheduler with RLAO using offline trajectories and re-examination to return. Results reported Show Pass @ 1 of 95.4 on Humeval with GPT 4O MINI, average rains 2.9% to bear 11.6% genchmarks of meta agent. Framing compares cleanly with ADAS and is able to breathe, which agent designs or code graphs, while W4S adjusts the file extension and reads the editor.

Look Technical paper and Github repo. Feel free to take a look at ours GitHub page for tutorials, code and notebooks. Also, feel free to follow us Kind of stubborn and don't forget to join ours 100K + ML Subreddit and sign up Our newsletter. Wait! Do you telegraph? Now you can join us by telegraph.

Michal Sutter is a data scientist with a Master of Science in Data Science from the University of PADOVA. With a strong foundation in statistical analysis, machine learning, and data engineering, Mikhali excels at turning complex data into actionable findings.

Follow Marktechpost: Add us as a favorite source on Google.