Thinking Motivation begins Tiller: A low-trained API restructured to distribution of llm well without hiding knots

Thinking equipment is issued TinkerPython API allows researchers and developers to write air training training in the area while the platform removes them from spreading the collections of the GPU. Pitch is small and technological: Keep full control of information, purposes, and performance measures; Move editing, tolerating the error, and decorating multi-node. The service is located in the private beta area with the waiting list and begins free, transferred to the implementation of the use in the “weeks coming.”

Okay, but tell me what is it?

Tinker reveals Low-Level level– The highest “trains” train () “wrappers. The main calls include forward_backward, optim_step, save_statebesides sampleProviding users to control the correct control of titles, Optimizer decrease, and test / observation within the loops. General Work Travel: then a Lora person Training Client against the Basic Model (eg LLAMA-3.2-1B), forward_backward/optim_stepContinue the State, and get a sample client to check or send weights.

Important features

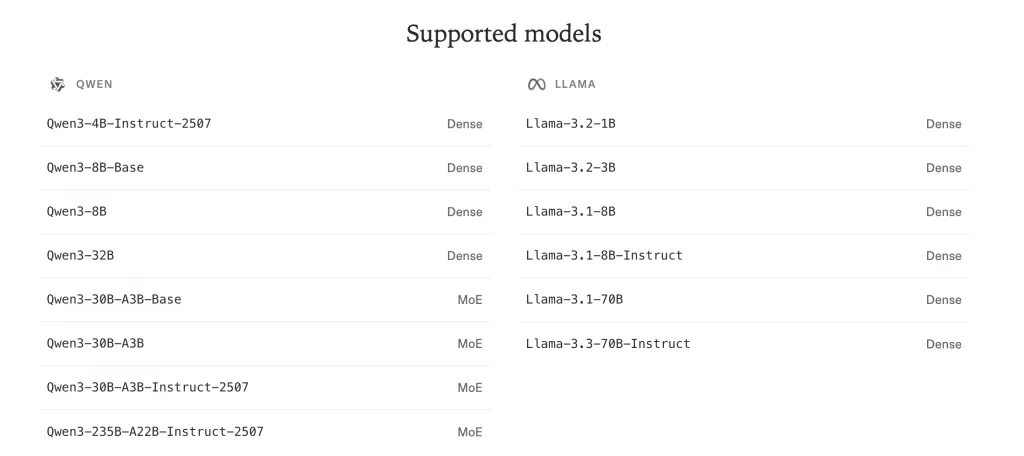

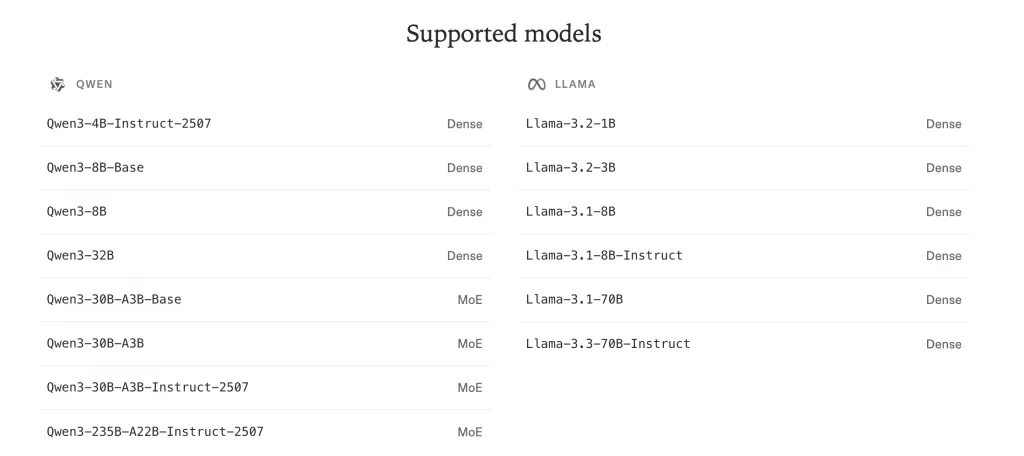

- The compound of open mass. Good families such as Llam including Eweincludes major types of mixture-unique professional (eg. QWEN3-23B-A22B).

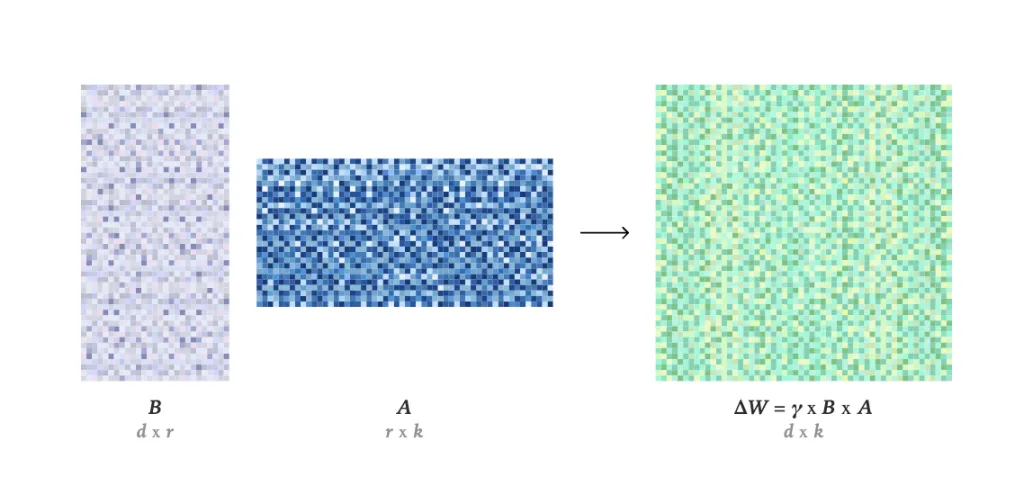

- Post-based mail training. Features of tinker Adapting to low conditions (Lora) than full order; Their technology note (“Lora without regrets”) to argue with Lora can match full

- The artistic art. Download trained adapter instruments to use external tinker (eg in your preferred decoration / provider).

What is running on?

Telephone thinking team sets tinker as Platform with training after training High-weight models from small llms until they reach large mixture plans, a good example QWEN-235B-A22B as a supported model. Change models are deliberately changing-converted the cord cover and norerun. Under the hood, runs scheduled in the inner clust of thinking; Lora method enables integrated joint pools and higher low use.

🚨 [Recommended Read] Vipe (Video Pose Pose): A Powerful and Powerful Tool of Video 3D video of AI

Tinker Cookbook: Reference training sores and training methods

To lower the boilerplate while keeping API in core API Lean, the group publish Tinker Cookbook (Apache-2.0). Consists of patches ready for use Taught Term including Emphasis on ReadingExamples used for RLHF (STS-Stage Soft → Reward Modeling → Policy RL), Rewards of mathematical rewards, Tools for Use Tool / Rengreed, Pun Distillationbesides a variety of agent To set up. Repo also and SHIPS Lora Hypertparameter to calculation and testing of assessment (eg, evaluating).

Who already used it?

First users enter groups at Pillow (Gödel Prever) group, Infoddld (Rotskoff Chemistry), C Berkeley (Skyrl, async off-Alent Multi-agent / tool usage rl), and Redwood research (Rl in qwen3-32B control tasks).

Tinker Private private bait right now with Waiting list up. Service Freeby Values based on use planned soon; Organizations are requested to contact a party directly into the box.

I love that tinker points low primedles (forward_backward, optim_step, save_state, sample) instead of monolithic train()-The storage purpose, reward formation, and testing of my control while loading all-and many in their groups are managed. The original Lora-first state of the cost and the Turnaround, and their antagonistic evaluation. The Cookbook's RLHF and SL Respeteceoops are the startup method, but I will judge the deductions of the deductions, and the data management systems (PII management) during real functions.

Overall I prefer tinker's Open, Flexible API: Allows me to customize open llms with clear links in clear training methods while service is caught in distributed. Compared with the closed systems, this is controlling the algorithmic control (loss, RLHF flow, data submission) and reduces the potential to study and use.

Look Technical Details including Subscribe to our waiting list here. If you are a university or organization seeking higher access, contact [email protected].

Feel free to look our GITHUB page for tutorials, codes and letters of writing. Also, feel free to follow it Sane and don't forget to join ours 100K + ml subreddit Then sign up for Our newspaper. Wait! Do you with a telegram? Now you can join us with a telegram.

Michal Sutter is a Master of Science for Science in Data Science from the University of Padova. On the basis of a solid mathematical, machine-study, and data engineering, Excerels in transforming complex information from effective access.

🔥[Recommended Read] NVIDIA AI Open-Spaces Vipe (Video Video Engine): A Powerful and Powerful Tool to Enter the 3D Reference for 3D for Spatial Ai