Meta Superineinininilline Labs introduced SUBRAG: RAG Establishment of 16 × Things and Fast Advance

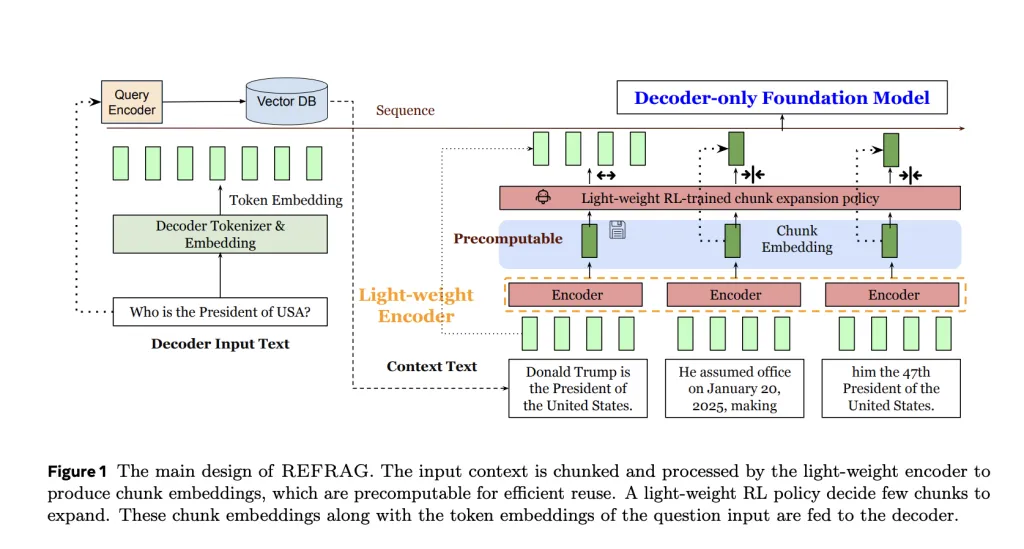

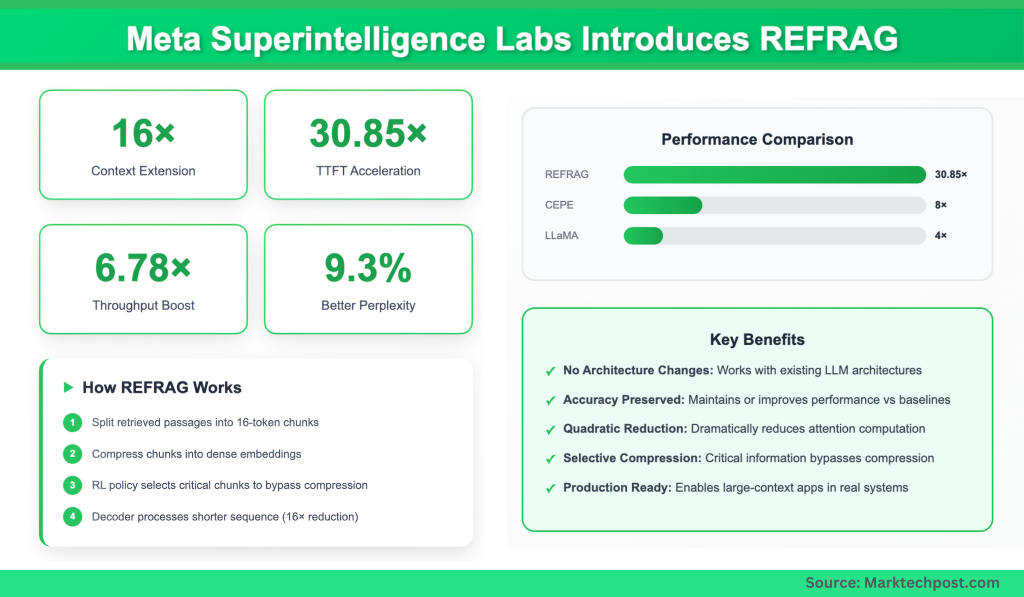

A group of investigators from Metter Superineinigence Labs, the National University of Singapore and Rice University is revealed Sound (RAG representation)A decorative framework that reset the efficiency of the retrieved retrested generagel generagel SUBRACK Passes the windows of the LLM by 16 × and achieve in a 30.85 VERHOVE FREE IN TIME-TO-DURING-TONKEN (TTFT) without compromising the accuracy.

Why is the long context in the llMS bottle?

How to be added in large languages of languages is more scaled in the length of installation. If the document is doubled, compute and memory costs can grow four times. This is not just the tendency but increasing key-value size (kv) cache (kv) cache, making major contexts applicants not work in production programs. In RAG Settings, many redesigned roles offer less in the final answer, but the model still pays the full quadratic amount to process.

How do you change and shorten the context?

The Seble introduces the lack of fragmentation encordiated to be returned to passages in chunks with a fixed size (eg 16 tokens) and press each one gentle Chunk's embryo. Instead of feeding thousands of green tokens, decoder processes this brief order is embedded. Result SUCCESS TO 16 × DEFTER RUQUENCEwithout changes in the form of the llm.

How to accelerate?

By reducing the sequence of decoder installation, remove reducing the quadratic attention and reduces KV cache. Results of Battlefit 16.53 × TTTFT to accelerate in K = 16 including 30.85 × acceleration in K = 32Cape Cape in front of the ART-ART (received only by 2-8 ×). To pass and improve until 6.78 × compared to the foundations of llama.

How to strengthen true maintenance?

Learning Policy (RL) guides stress. It identifies the chunks with many detail and allow them to pass the pressure, feeding the green tokens directly to decoder. The selected strategy guarantees that critical details – such as specific numbers or frames – are not lost. For all many benches, wipe storage or advanced confusion compared to the CEPE while it is very low.

What does the exams reveal?

The SUBRAG was found in the 20b tokens from Slimpumajama Corpus (books + Arxiv) and tested in the RAG-CONTEXT Date dams, and summarizing a long-term documentation, a strong solid Subrac:

- Expansion of 16 × In addition to Standard Llama-2 (4K Tokens).

- ~ 9.3% confused improvement above the CEPE in it four datasets.

- Better accuracy in weakening settings of restoration, when improper roles govern, due to the ability to process many roles under the same latency budget.

Summary

Subsula indicates that the long llms llms doesn't have to do it a little or memory – hungry. By pressing Refunds Restricts to suggest, only selecting the important choice, and reconsider how the Rag Decoding Labs works to process the largest installation while running as fast as running as soon as running as fast as running as soon as possible. This makes many books – such as analyzing all reports, managing many conversations, or enterprise business plans – not only effective, without enduring accuracy.

Kilombo

Q1. What is shooting?

The Submission (RAG METTER) Framework of Superourininilligence Labs Press ABSPress Returns Returns Restricted Rounds, which enables immediate tendencies and the magnitude of context in the llms.

Q2. Would you quickly scalp with the existing methods?

Subrag moves up 30.85 × Quick-to-first Token (TTFT) including 6.78 × Variations in Other Compare the foundations of LLAMA, while a break.

Q3. Does the stress reduce accuracy?

No. Authentication policy ensures critical chunks that remain unwisely, maintained important information. Across benchmarks, wipe stored accuracy or accurate advances related to previous methods.

Q4. Where will the code be available?

Meta Superintagence Labs will issue Gitub in Fareessersearch / Sebrack

Look Paper here. Feel free to look our GITHUB page for tutorials, codes and letters of writing. Also, feel free to follow it Sane and don't forget to join ours 100K + ml subreddit Then sign up for Our newspaper.

Asphazzaq is a Markteach Media Inc. According to a View Business and Developer, Asifi is committed to integrating a good social intelligence. His latest attempt is launched by the launch of the chemistrylife plan for an intelligence, MarktechPost, a devastating intimate practice of a machine learning and deep learning issues that are clearly and easily understood. The platform is adhering to more than two million moon visits, indicating its popularity between the audience.