Google Ai Ships Timesfm-2.5: Little Model, Longer Coctions Underline Spos-Sover (Picture Shooting)

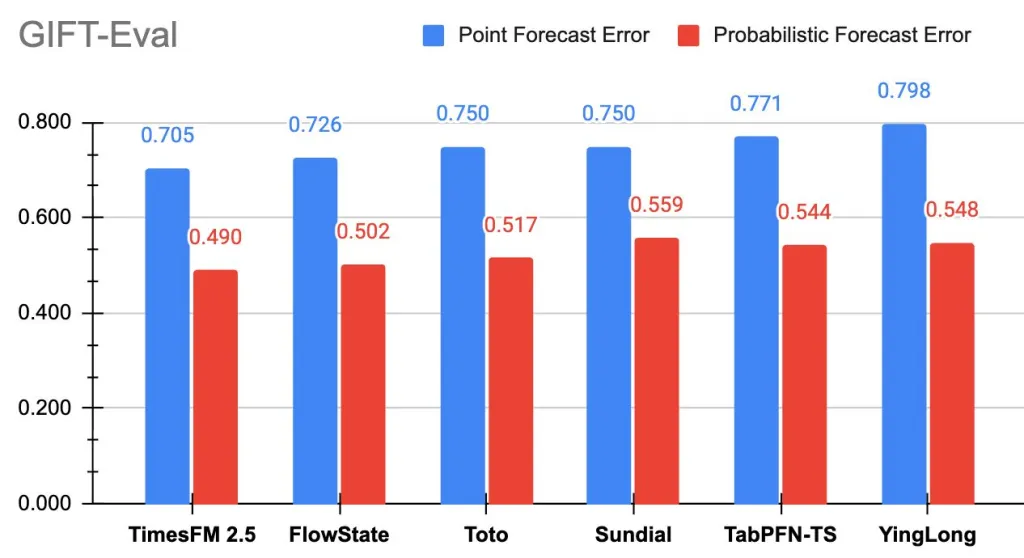

Google Survey Is Removen TimesFm-2.5200m-parameter, only time model of time for a period of time A The area of the 16K status and indisotic Prediction Support. New Assessing Live on the face of it. Despite of- Po-SoulTimesFm-2.5 now A collection of FoundBoard in all metrics with ax (Mase, CRPs) between Zero-Shot models.

What is predicting a series of time?

Predicting a series of time to analyze data points in order collected in the longance to identify patterns and predict future prices. It emphasizes the crisis programs in all the industry, including product predicts in sale, monitoring the weather and weather styles, and performing great programs such as the dedication cake. By filming temporary dependence and the diversity of the season, the series of time is enabled to make post-decisions in powerful areas.

What has changed in TimesFm-2.5 vs v2.0?

- Parameters: O.30 (bottom from 500m at 2.0).

- Max City: 16,384 Points (top from 2,048).

- Prices: Optional 30M-param quantile head In order to expose the value of value until 1K Horizon.

- Input: There is no “frequency” index needed; New flags that are incomparable (flip-incident, politivity, pricing rising).

- Roadmap: It is coming Chibrate The use of immediate consideration; Covariates Support in Slated to return; Documents are extended.

Why does the longest context?

Adorable History points allow one further to hold the building of many years, Kingdom levels, and items that are often displayed without imitating or hierarchical. In operation, reducing the Heuristics processing begins and improves the stability of the domains where context >> the breed (eg energy load, the need for sale). The long context is a change of core discition is clearly marked by 2.5.

What is the research context?

TimesFm's Core Thesis-One model of the decoder-only predictor– Delivered on the ICML 2024 paper and Google's blog. The gift – Salesforce) is to promote the testing testing for all domains, usually, lengths, and public / multivaries, and the leading community board held in the face of the false.

Healed Key

- Little Model, Instant: TimesFm-2.5 Run with 200m porameders (half of size 2.0) while improving accuracy.

- A long context: Is supporting 16K the length of the installationEnabling Deeper Process for historical experiences.

- Benchmark leader: Now reaches # 1 between zero-shot models base In the number of gifts Producer (The accuracy of the point) and Creys (visible accuracy).

- Production – Ready: The effective support and effective predications make it easy for the world's world postpagans in all industries.

- Wide receiving: The model is Live in the face of binding.

Summary

TimesFM-2.5 indicates that the support models of moving predictable proof is proof of effective, ready for production. By cutting parameters in half when he expands the length of the context and leading the gifts – in both points and accuracy, marks the ongoing change in well-performance. With the temptation of live and live accessory / garden model on the road, the model is edited to speed up the acceptance of zero-shots – a series of real pipes in the world.

Look Model Card (HF), Repo, Benchmark including Paper. Feel free to look our GITHUB page for tutorials, codes and letters of writing. Also, feel free to follow it Sane and don't forget to join ours 100K + ml subreddit Then sign up for Our newspaper.

Michal Sutter is a Master of Science for Science in Data Science from the University of Padova. On the basis of a solid mathematical, machine-study, and data engineering, Excerels in transforming complex information from effective access.