IBM and Eth Zürich Investigators Reveal Anog Foundation models to deal with noise in-Memory Ai Hardware

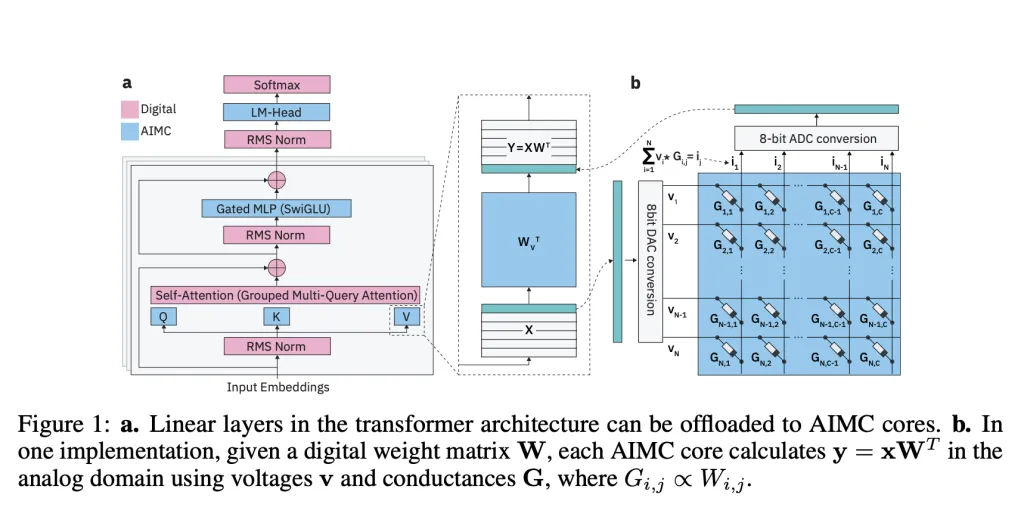

IBM investigators, and ethürich, reveal the new class of Anog Foundation Models (AFMS) designed to close the gap between large languages of Language (LLMS) and Analog In-Memory Computing (AIMC) Hardware. AIMC has promised the radical leaf with effective models of models in a billion parameters in a small quantity of the NVM and integration devices. But Tech Cheel heel

Why is analog simple llms?

Unlike the GPUS or TPUS such detail between Memory and Compute units, AIMC makes the multi-dimensional dimension of the project removes von Neumann Bottleneck and provides major advances in finding and performance. Previous study showed that the combination of AIMC with 3d NVM including Mixture-expert (moe) Properties are possible, legally, supporting Trillion-parameter models in accelerates speeding. That can make Ai-Scale of ai basic ai not possible on the devices more than data institutions.

What does Analog In-Memory Computing (Aimc) is it so hard to use in working?

The greatest obstacle is noise. AIMC integration is suffering from the diversity, DAC / ADC value, and fluctuations fluctuations that are changing the accuracy. Unlike pricing in GPUS – where the defective and innovative errors that controls Stochastic and unpredictable. Previous audit measures to synchronize small networks such as CNNS and RNNs (<100m Parameters) to put up such sounds, but billions of parameters are broken under AIMC's challenges.

How do Analog Foundation models cope with an audio problem?

The IBM group is silent Anog Foundation modelsconsisting of training known as hardware to prepare for analog's killing llms. Their Pipeline uses:

- Noise injection During the training of AIMC random imitation.

- Weight Shipment Strengthening distribution within device limits.

- Learned input / issuing the issues of output aligned with real hardware problems.

- Distillation from trained llms using 20b tokens of the data data.

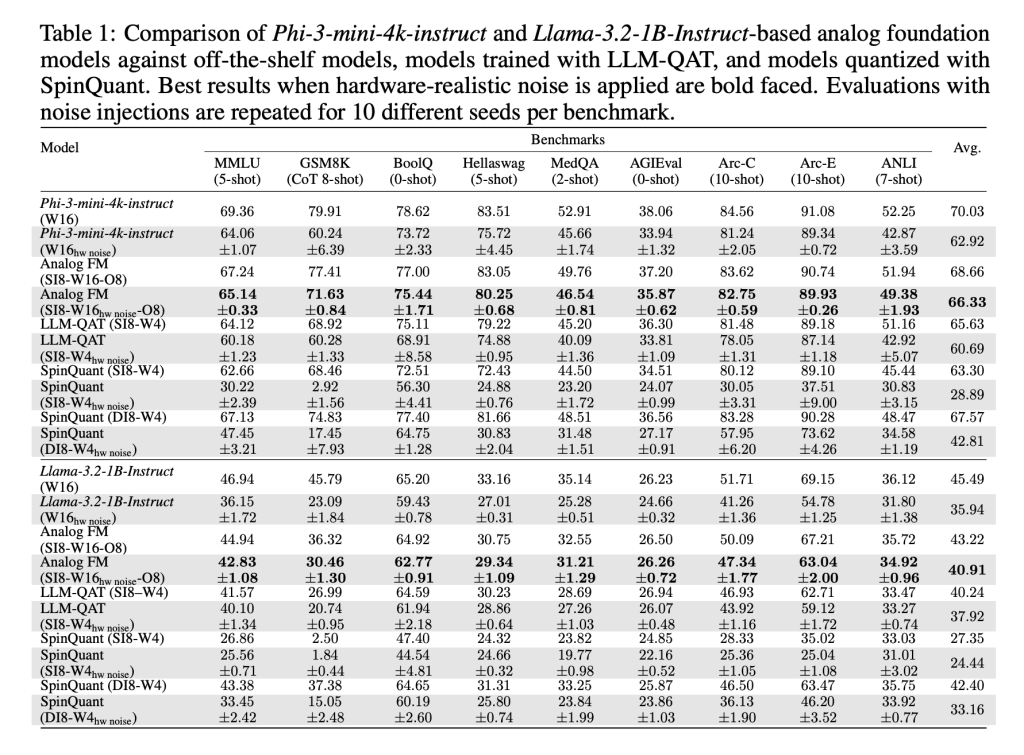

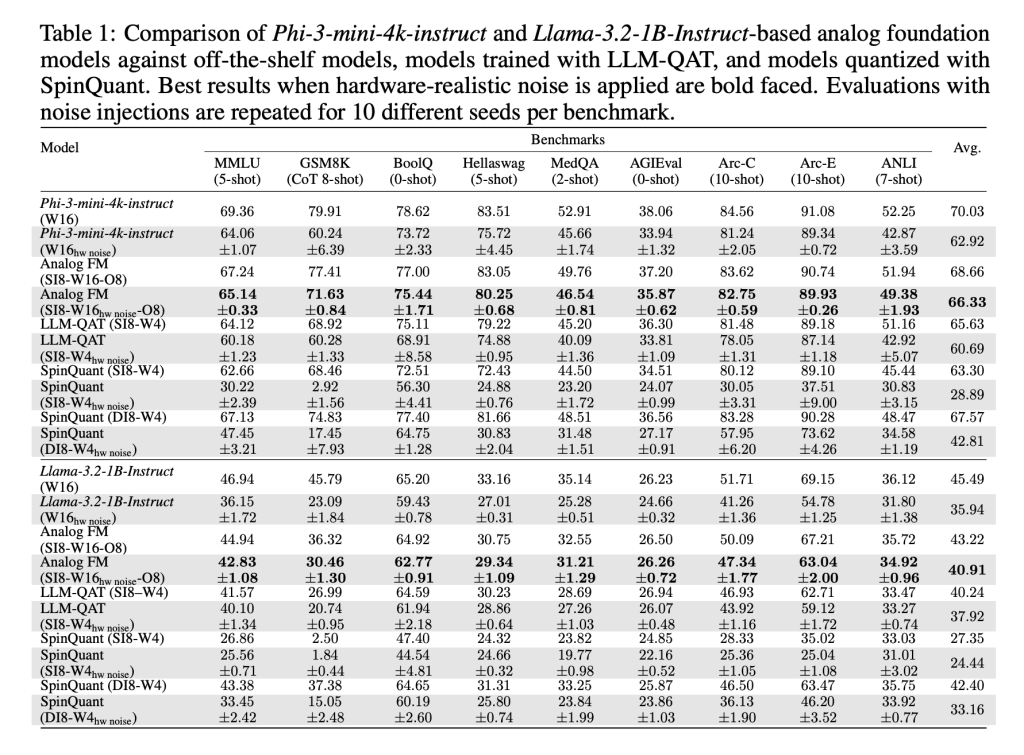

These methods, used Aihwkit-lightningAllow models like LI-3-Mini-4k-4k-4K including Llama-3.2-1b-order support performance compared to weight 4-bit / activation 8-bit baseenes below analog noise. In testing across the consultation and the facts of facts, AFMs start the training training of information (Qat) and post-training training (spinquant).

Are these types for an analog hardware for analog?

No. The unexpected result is that AFMS is also active Lower Digital Hordion. Because AFMS is trained to end up sound and cut, manage simple construction of future training (RTN) better than the existing ways. This makes them use not just AIMC's accelerators, but also the Commodity Digital Harpare.

Is the Perform Sculb with more compute during the point of seeing?

Yes. Investigators check Checkpoint Testing Time In a Math-500 budget, creating many answers each question and choose good for the reward model. The AFMS best indicated the strongness of fitness than the QAT models, with accurate lines as a greater computation assigned. This is in line with Aimc-power, higher recognition than training.

How does the analog in-Memory Computing (AINCC) the future?

The research team provides the original organized show that the large llms can be changed from AIMC Hardware without having accurate accuracy. While AFM training are heavy and increasingly heavy jobs as GSM8K it is still showing accuracy gaps, results is a milestone. A combination of Efficiency of power, noise intensity, and compliance with digital hardware It makes AFMS a promising guide to measure the foundations of the foundation above GPU limits.

Summary

The launch of Analog Foundation marks the important milestone to measure lls across the ends of the digital accordator. By making the unpredictable analog models in-memory Computing, a research team shows that AIMC can from the Thursor promise. While the training costs remain high and the consulting benches showing spaces, this work establishes the way to large effective models working on the Compact Hardware, pushing base models near road shipping.

Look Paper including GitHub page. Feel free to look our GITHUB page for tutorials, codes and letters of writing. Also, feel free to follow it Sane and don't forget to join ours 100K + ml subreddit Then sign up for Our newspaper.

Asphazzaq is a Markteach Media Inc. According to a View Business and Developer, Asifi is committed to integrating a good social intelligence. His latest attempt is launched by the launch of the chemistrylife plan for an intelligence, MarktechPost, a devastating intimate practice of a machine learning and deep learning issues that are clearly and easily understood. The platform is adhering to more than two million moon visits, indicating its popularity between the audience.

🔥[Recommended Read] NVIDIA AI Open-Spaces Vipe (Video Video Engine): A Powerful and Powerful Tool to Enter the 3D Reference for 3D for Spatial Ai