DataSets for training language models

A language model is a mathematical model that describes human language as a probability distribution of its words. To train a deep learning network to simulate a language, you need to identify vocabulary and learn its probability distribution. You cannot create a model from scratch. You need a dataset for your model to learn from.

In this article, you will learn about the data used to train linguistic models and how to obtain regular datasets from public documents.

Let's get started.

DataSets for training language models

Photos by Dan V. All rights reserved.

Good data for training a language model

A good language model should teach correct language use, free of research and errors. Unlike programming languages, human languages lack formal grammar and syntax. They change continuously, which makes it difficult to worry about all the linguistic differences. Therefore, the model should be trained from the database instead of crafted from the rules.

Setting up a dataset for a model language is challenging. You need a large, diverse dataset that represents the nuances of language. At the same time, it must be of high quality, presenting the correct use of language. Ideally, the dataset should be manually edited and cleaned to remove noise such as typos, grammar errors, and linguistic content such as symbols or HTML tags.

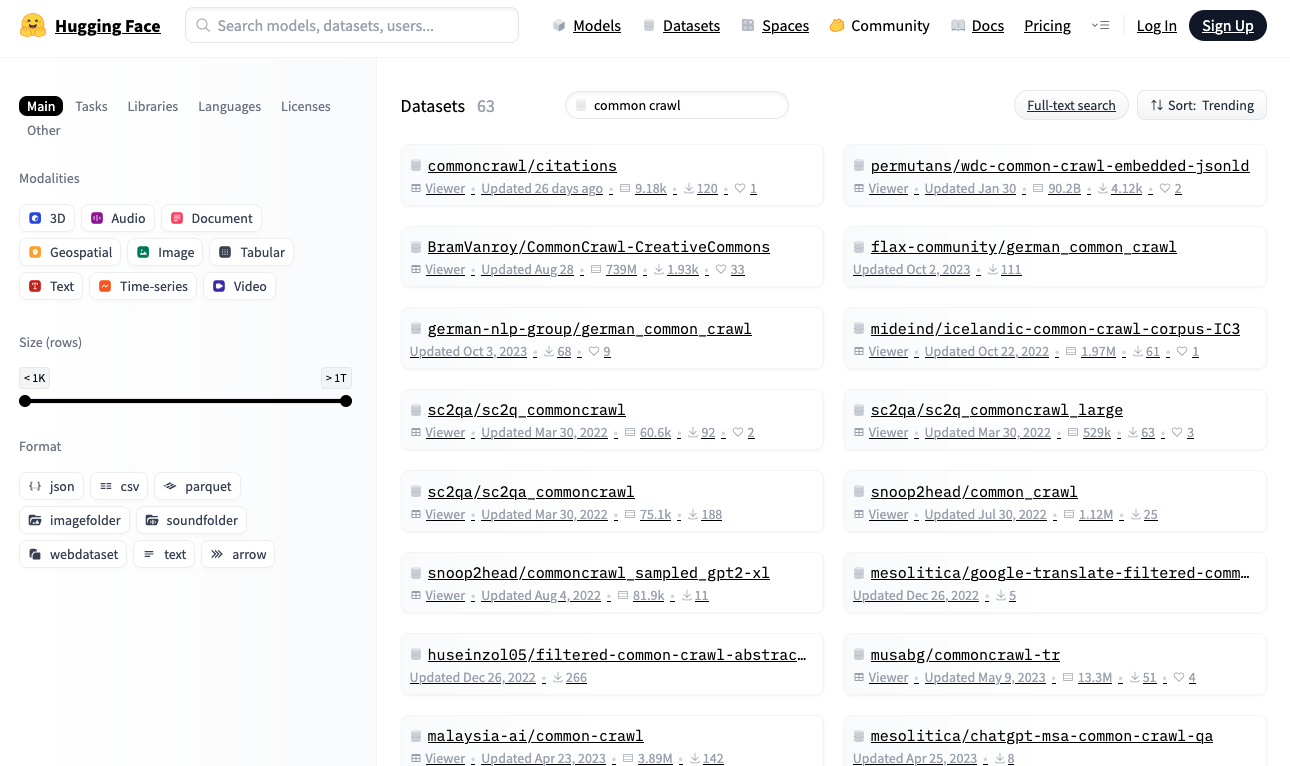

Creating such a dataset from scratch is expensive, but high quality datasets are freely available. Common datasets include:

- A general crawl. Big, continuously updated data of more than 9.5 petabytes with diverse content. Used by leading models including GPT-3, LLAMA, and T5. However, as found on the web, it contains high quality and pirated content, as well as racism and abuse. Strong cleaning and filtering is necessary to make it useful.

- C4 (Colossal Clansed Corpus). 750GB data extracted from the web. Unlike a normal crawl, this data is pre-cleaned and filtered, making it easier to use. However, expect potential biases and errors. The T5 model was trained on this data.

- Wikipedia. The English content alone is around 19GB. It's a big deal but it's manageable. Well curated, organized, and organized to wikipedia standards. While it covers a wide range of general information with high precision, its style is straightforwardly encyclopedic. Training in this data alone can create models to reduce this style.

- Wikitext. The data taken comes from the well-edited and featured articles of Wikipedia. Two versions exist: Wikitext-2 (two million words from hundreds of articles) and wikitext-103 (100 million words (100 million words from 28,000 articles).

- The BookCorpus. A few GB dataset of long-form, rich, high-quality book texts. It is useful for learning about concurrent maintenance and long-term dependence. However, it is aware of the issues of copyright and social awareness.

- A lot. 825GB dataset selected from multiple sources, including bookcorpus. It covers different types of literature (books, articles, source code, and academic papers), providing a broad coverage tailored to a variety of perspectives. However, this diversity leads to variable quality, duplicate content, and inconsistent writing styles.

Finding information

You can search for these datasets online and download them as compressed files. However, you will have to understand each data format and write custom code to read it.

Alternatively, search for datasets in a repository that faces evil in this repository provides a Python library that allows you to download and read datasets in real time using a native format.

Kissing face datasets

Let's download the Wikitext-2 Dataset from the face mask, one of the smallest datasets suitable for building a language model:

|

import into the country out of sequence from the the data import into the country Load_datasut Dataset measure = Load_datasut(“Wikitext”, “Wikitext-2-Raw-V1”We are divided press on(ef“Dataset size: {len (dataset)}”We are divided # Print several samples ni = What is bought on the knee there ni > 0: IDX = out of sequence.rand(0, download(Dataset measureWe are divided–1We are divided text = Dataset measure[idx][“text”].suck it up(We are divided when text and -I text.to begin with(“=”We are divided: press on(ef“{idx}: {text}”We are divided ni – = = 1 |

The output can look like this:

|

Data size: 36718 31776: missouri heads missouri more than three icons extend much higher than … 29504: Regional variations of the name Allah occur in both Pagan Na Christian Pre @ – @ … 19866: Pokiri (English: Rogue) is a 2006 Indian Telugu @ – @ Lidl Action Film, … 27397: The first flour mill in Minnesota was built in 1823 at Fort Snolling as … 10523: The music industry recognized the success of parey. He received two awards in… |

If you haven't already, install the hugging face library:

When you use this code for the first time, load_dataset() it downloads the data from your local machine. Make sure you have enough disk space, especially for large datasets. By default, datasets are downloaded from it ~/.cache/huggingface/datasets.

All Datasets face bindings follow a standard format. This page dataset object cannot be trusted, for each object as a dictionary. For training a language model, datasets usually contain text strings. In this data, the text is stored under the "text" the key.

The code above samples a few items from the dataset. You will see clear text strings of various lengths.

Processing datasets

Before training the language model, you may want to post-process the data to clean the data. This includes reformatting text (trimming long strings, replacing multiple spaces with single spaces), removing non-language content (HTML tags, symbols), and removing unwanted characters (spaces plus punctuation). The specific processing depends on the dataset and how you want to present the text to the model.

For example, if you are training a small bert-style model that only handles lowercase letters, you can reduce the size of the vocabulary and simplify the tokenizer. Here is the generator function that provides the postcode:

|

search Wikitext2_datasut(We are divided: Dataset measure = Load_datasut(“Wikitext”, “Wikitext-2-Raw-V1”We are divided it's a brother the object of the story between Dataset measure: text = the object of the story[“text”].suck it up(We are divided when -I text or text.to begin with(“=”We are divided: go ahead # Skip empty lines or header lines Express text.die(We are divided # generate a small text type |

Creating a good post-art work. It should improve the data-to-noise ratio to help the model learn better, while retaining the ability to handle unexpected input formats that a trained model may encounter.

Further Reading

Below are resources you may find helpful:

To put it briefly

In this article, you'll learn about the datasets used to train linguistic models and how to get standard datasets from public repositories. This is the first place to check the data. Consider adding existing libraries and tools to optimize data loading speed so that it becomes a bottleneck in your training process.